Interested in web scraping Indeed job listings to get all the key information directly in a database? Learn how to create such a tool!

Note: Many websites restrict or ban scraping data from their pages. While you can use a reputable data collection solution like ScraperAPI to get around hurdles, be sure to follow best practices. Read every website’s terms, conditions, and restrictions before scraping their website. More from a legal standpoint here.

1. Setting up our environment

To start a web scraping project, there is one main required library:

- Selenium is used for automating web applications. It allows you to open a browser and perform tasks as a human being would, such as clicking buttons and searching for specific information on websites.

Additionally, we need a Driver to interact with our browser. To set up our environment, we first need to:

- Install Selenium: Run the following command in your command prompt or terminal

pip install selenium - Download the Driver. We need a driver so

Seleniumcan interact with the browser. Check your Google Chrome version and download the right Chromedriver here. You need to unzip the driver and place it into a path you remember — we will need this path later on!

Note: Google Chrome was used as the default browser. ️Just make sure to find the correct driver for your browser type and version. To understand the basics of Selenium and HTML, read this article.

2. Loading Libraries

Once we have all the required libraries installed in our environment, we start our code by loading all of them. Apart from selenium , we will need pandasandtime libaries among others.

# Importing Selenium and all related modules

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

#Importing the libraries to get the webdriver

import requests

import wget

import zipfile

import os

# Importing other required libraries

import time

import pandas as pd

from datetime import date, timedelta

import random

from datetime import date, timedelta

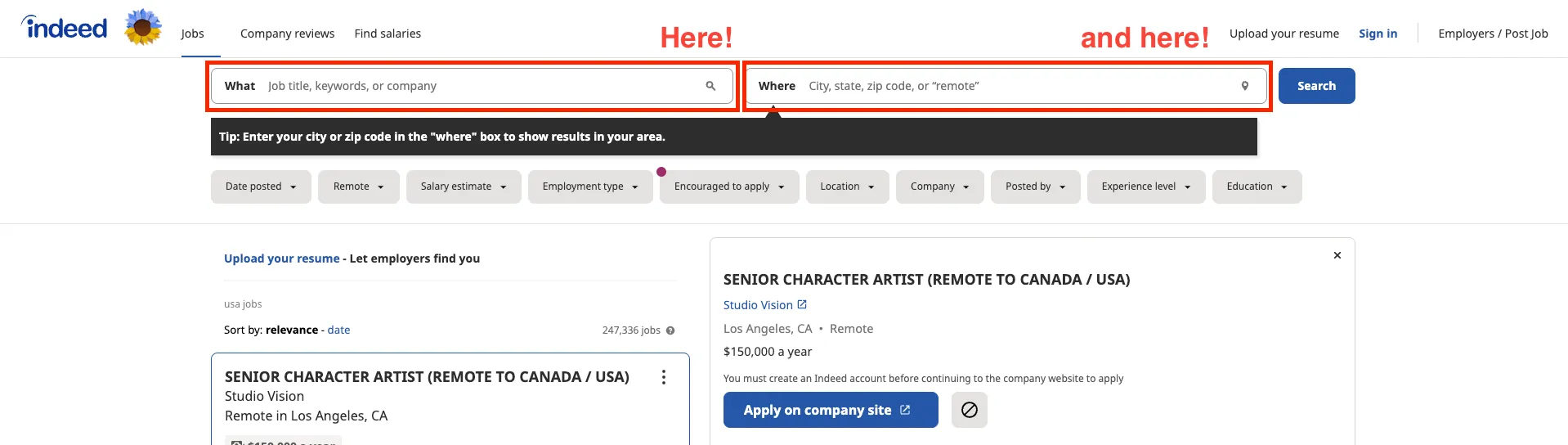

3. Understanding Indeed URLs and defining our job and location of interest

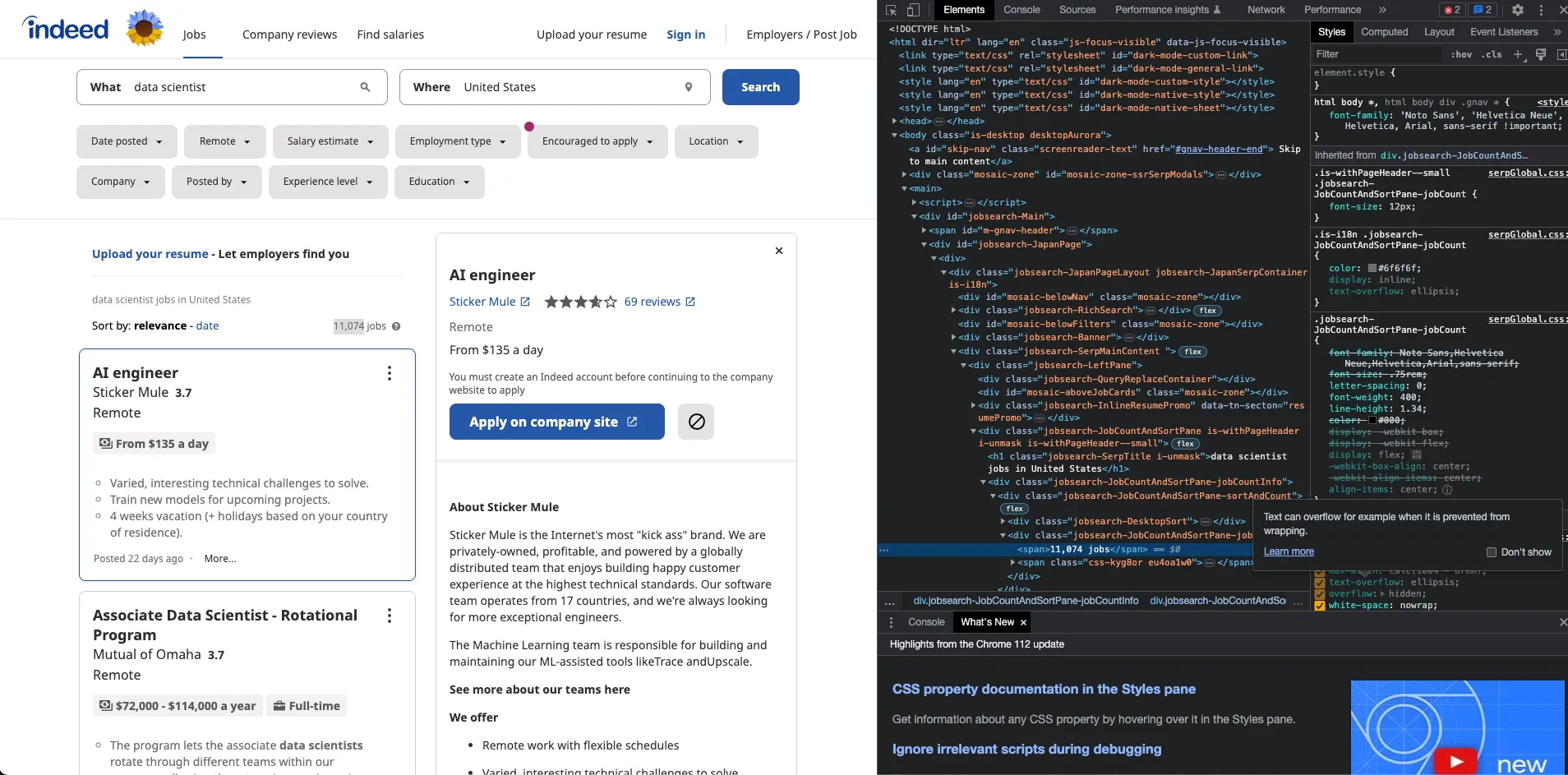

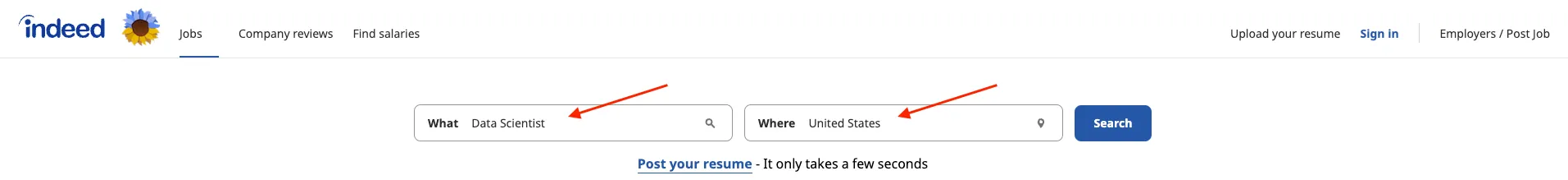

If we go to Indeed’s website, one can easily observe there are two inputs to define what job are we looking for and what location.

In this case, I will start looking for jobs as Data Analyst in the USA.

If we search for such a job and location, we can observe that both keywords are reflected in the corresponding URL. We have keywords=Data+Scientist&l=USA.

That’s why, we can simply modify the URL directly to choose whatever job and location we want — which makes our life way easier! 😉

Are we sure about this…?

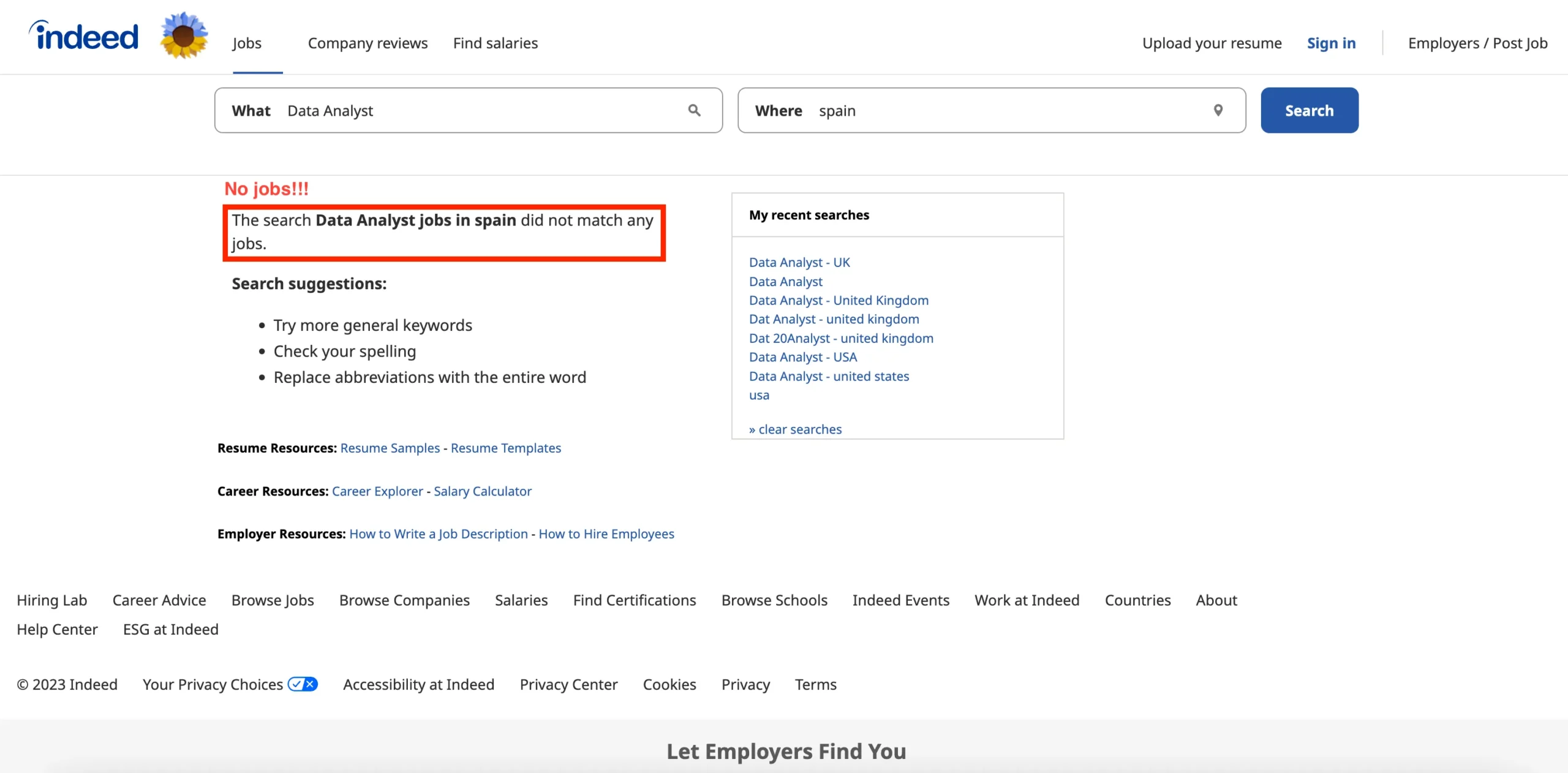

Indeed works with national pages — which means it presents a website with all job offers specifically for each country. So if you try to check Spanish jobs in the USA website, the result will be None, as you can observe below.

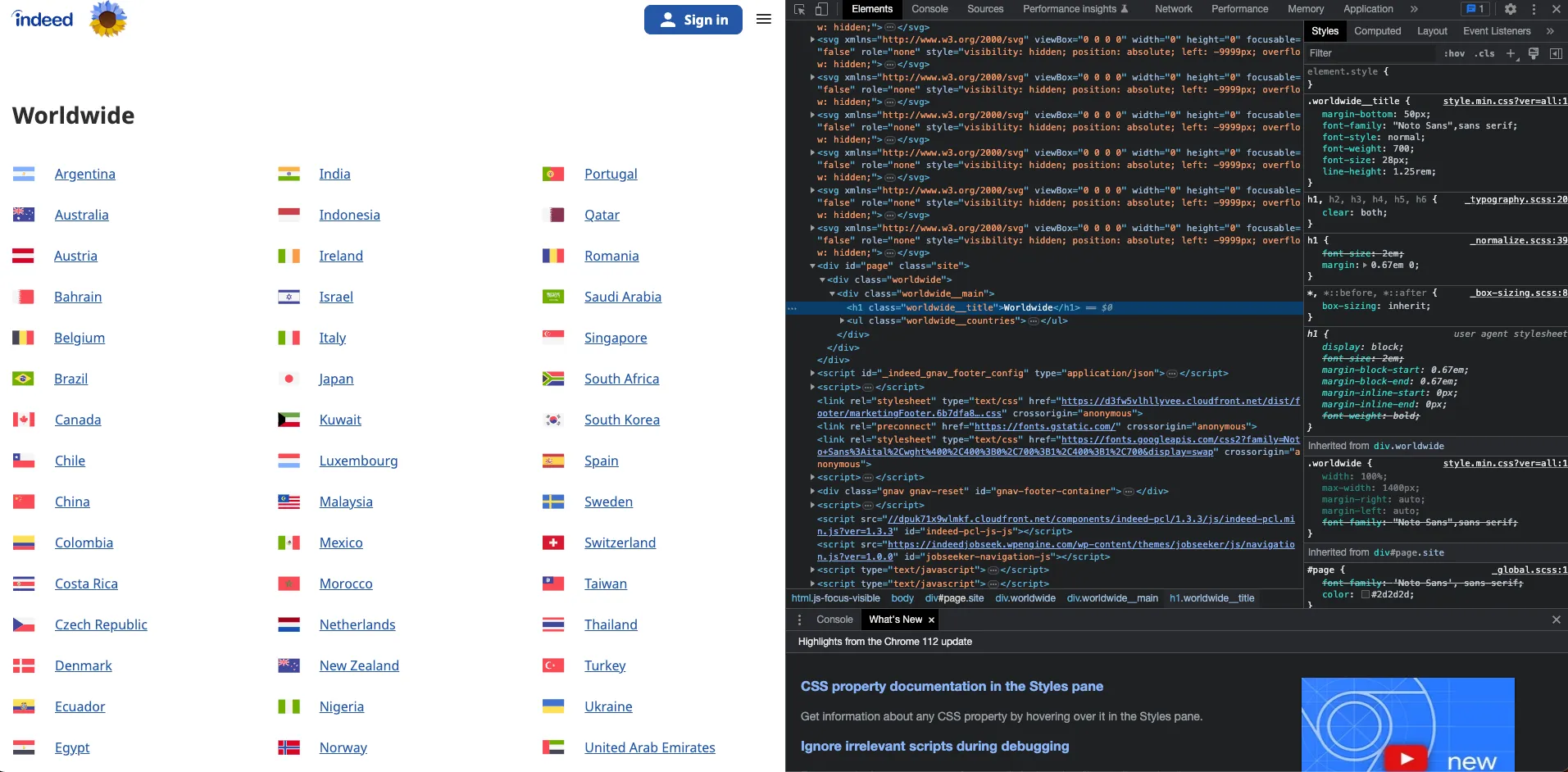

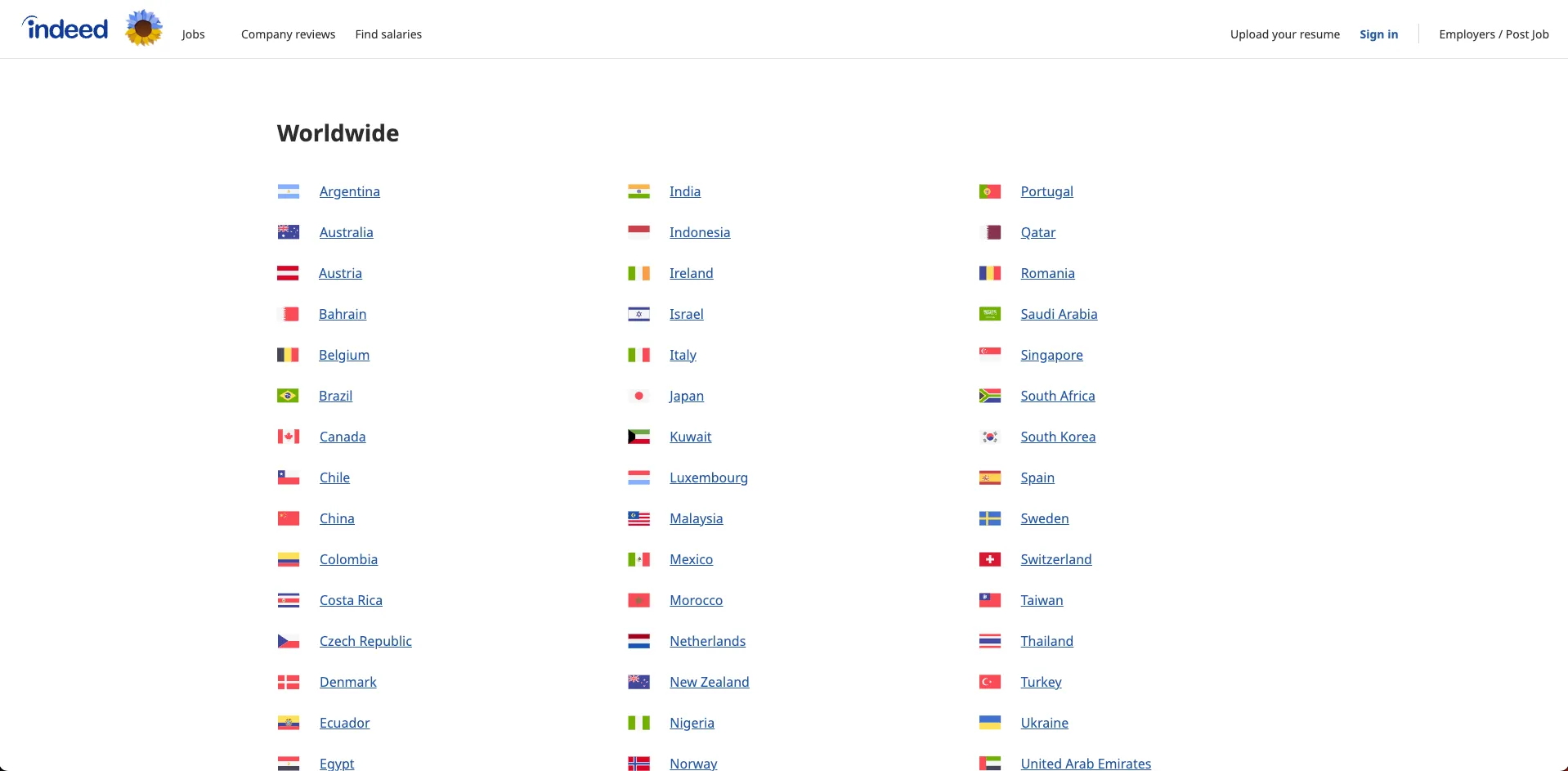

As we want to be able to switch countries if required, we need to assess this. What is thefastest and easiest way to do it? Creating a dictionary with all countries and URLs. Our personalized URL depends on the country we want to look for. Indeed presents a website with all their available countries and their corresponding URLs.

Screenshot of Indeed worldwide UI. List of xURLs by country.

Before starting to scrape, we still need to consider one last thing! Whenever we use more than one word, the corresponding search URL will separate each of the words using “+”. Thus, we can easily generate the URL we desire as follows:

- Defining a function that splits the input and adds a “+” between all different words.

- Defining what job and location we want to search for.

- Defining the corresponding URL

def url_string(string):

output = "";

for item in string.split(" "):

if item != string.split(" ")[-1]:

output = output + item + "+"

else:

output = output + item

return output

# Defining the job and the country

job_name = "Data Scientist"

country_name = "Argentina"

#Getting the corresponding URL for our country

url = country_link_dict[country_name]

# Adding + in case that are requird

job_name_url = url_string(job_name)

country_name_url = url_string(country_name)

#Defining the URL to find the job and the country

url = url.split("/?hl")[0] + "/jobs?q={0}&l={1}".format(job_name_url,country_name_url)

# Opening the url we have just defined in our browser

driver.get(url)

4. Loading the driver and creating an instance

The basic idea here is to control a web browser with our Python code. To do so, we need to create a bridge between Python and our browser. That’s why we generated an instance of our web driver using the file we downloaded in step 1 — Remember the path!

# __________________Defining the Chrome Driver Instance

# Creating a webdriver instance

options = Options()

#options.add_argument('--headless') -> You can activate this option if you want to watch the scraping process.

options.add_argument('--disable-gpu') # Last I checked this was necessary.

driver = webdriver.Chrome("ChromeDriver_Path/chromedriver", chrome_options=options)

Once we have the instance, it is as easy as opening the job list URL using the driver.get() command. The previous code will open up a Chrome window with our Indeed webpage.

⚠️ In case you want to use this code for a long time, your browser may get some update, and thus you should update the driver as well. To avoid this, update the ChromeDriver directly from python using the following code.

# get the latest chrome driver version number

url = 'https://chromedriver.storage.googleapis.com/LATEST_RELEASE'

response = requests.get(url)

version_number = response.text

# build the donwload url

download_url = "https://chromedriver.storage.googleapis.com/" + version_number +"/chromedriver_mac64.zip"

# download the zip file using the url built above

latest_driver_zip = wget.download(download_url,'chromedriver.zip')

# extract the zip file

with zipfile.ZipFile(latest_driver_zip, 'r') as zip_ref:

zip_ref.extractall("ChromeDriver_Path") # you can specify the destination folder path here

# delete the zip file downloaded above

os.remove(latest_driver_zip)

5. Creating a countries and URL dictionary

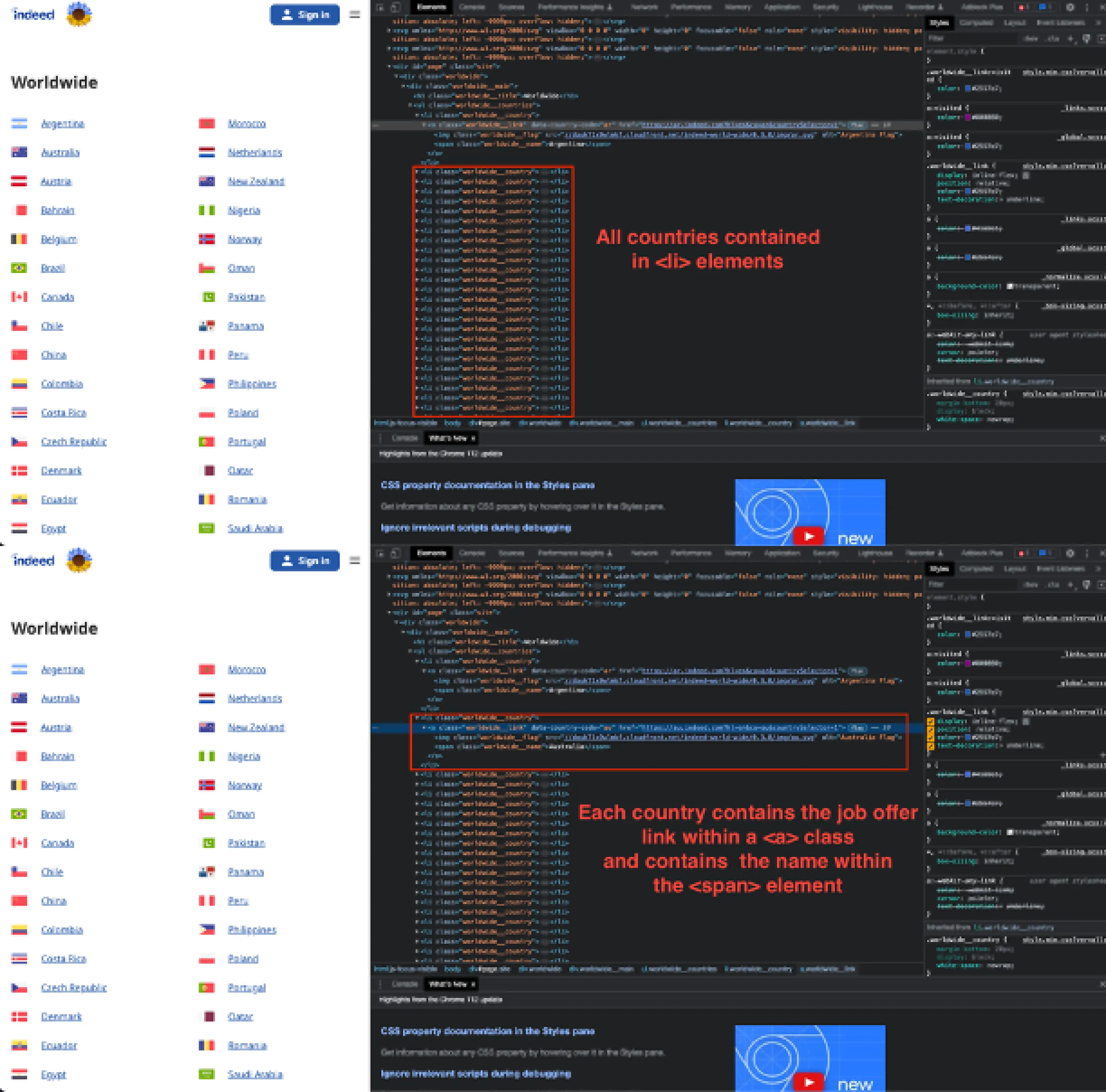

If you are not familiar with web scraping basics, you can right-click your mouse, select inspect, or press F12. The following popup should appear:

If we inspect the elements, we can easily observe that all countries are contained within an element called a page. Every country entry is contained within an <li> element composed of an <a> element that has both the link and an <span> element that contains the name of the country.

To obtain both elements, it is as easy as getting all elements a contained within the page element.

- The country name can be found with the command

.text— we could use as well the.get_attribute("text") - The link can be found with the command

.get_attribute("href")

# Scraping all countries and their corresponding links

url = "https://www.indeed.com/worldwide"

# Opening the url we have just defined in our browser

driver.get(url)

# We get a list containing all jobs that we have found.

countries_list = driver.find_element(By.ID,"page")

countries = countries_list.find_elements(By.TAG_NAME,"a") # return a list

country_link_dict = {ii.text: ii.get_attribute("href") for ii in countries}

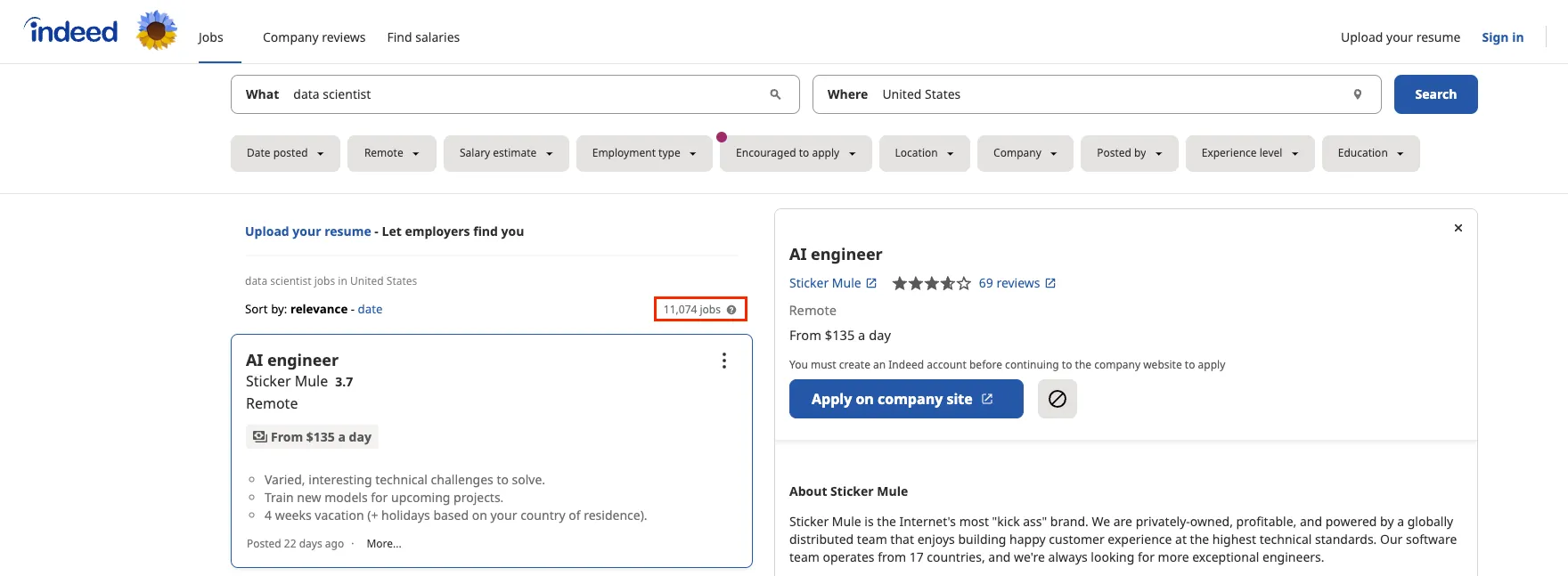

6. Detecting how many jobs are available

Next, we need to know how many jobs we have found through this search. To do so, we use the Selenium library to get the number that appears in the upper-left corner.

To do so, we can easily find the element by its class using the following command: driver.find_element(By.CLASS_NAME,"jobsearch-JobCountAndSortPane-jobCount").get_attribute("Text). Once we have the string, we need to make sure it is converted to an integer. And that’s it! 🙂

# Find how many jobs are offered.

jobs_num = driver.find_element(By.CLASS_NAME,"jobsearch-JobCountAndSortPane-jobCount").get_attribute("innerText")

# Erase the jobs string

jobs_num = jobs_num.replace(' jobs','')

# The jobs num is converted to an integer.

if ',' in jobs_num and (jobs_num := jobs_num.replace(',', '')).isdigit():

jobs_num = int(jobs_num)

7. Browsing jobs

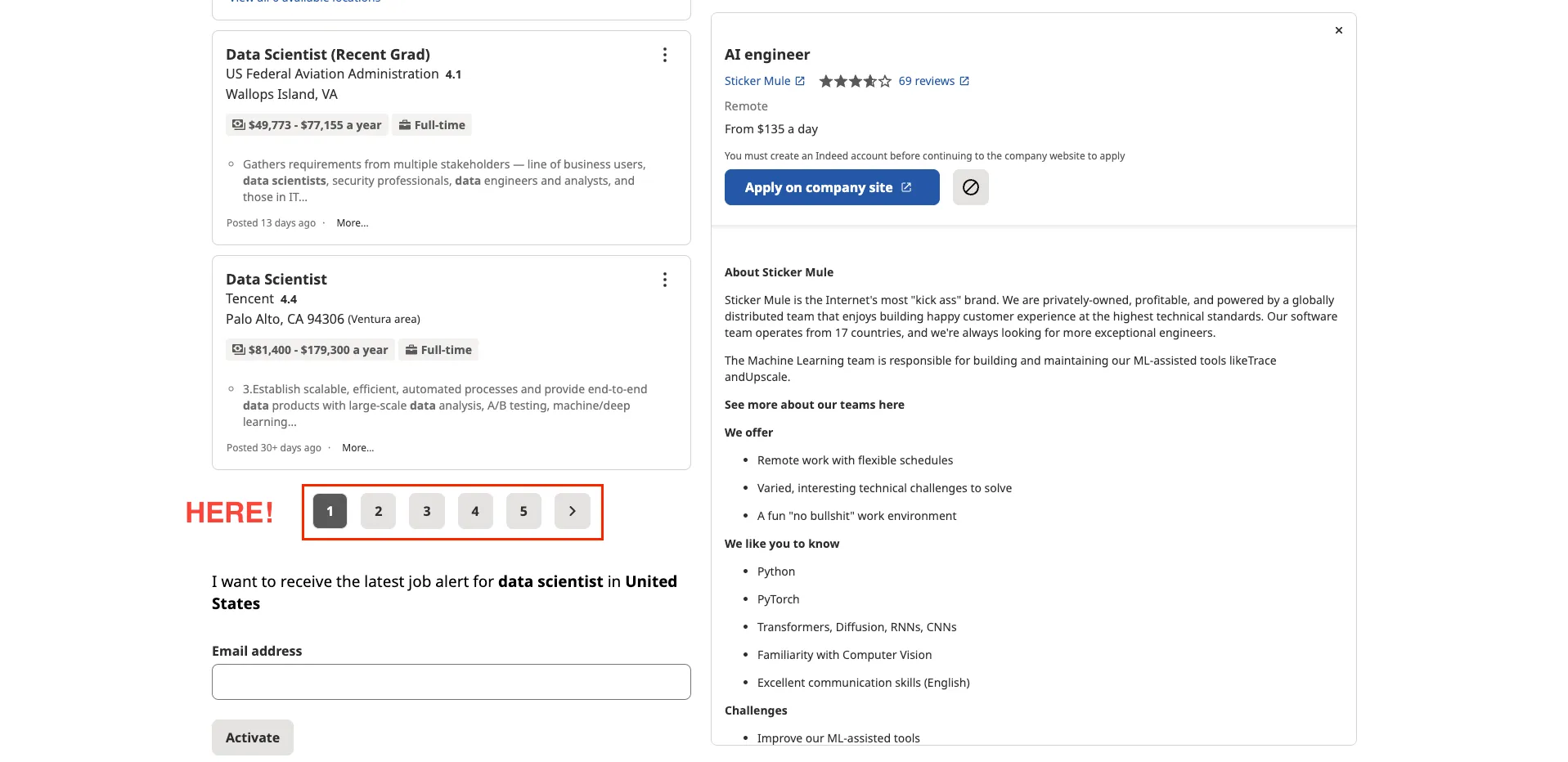

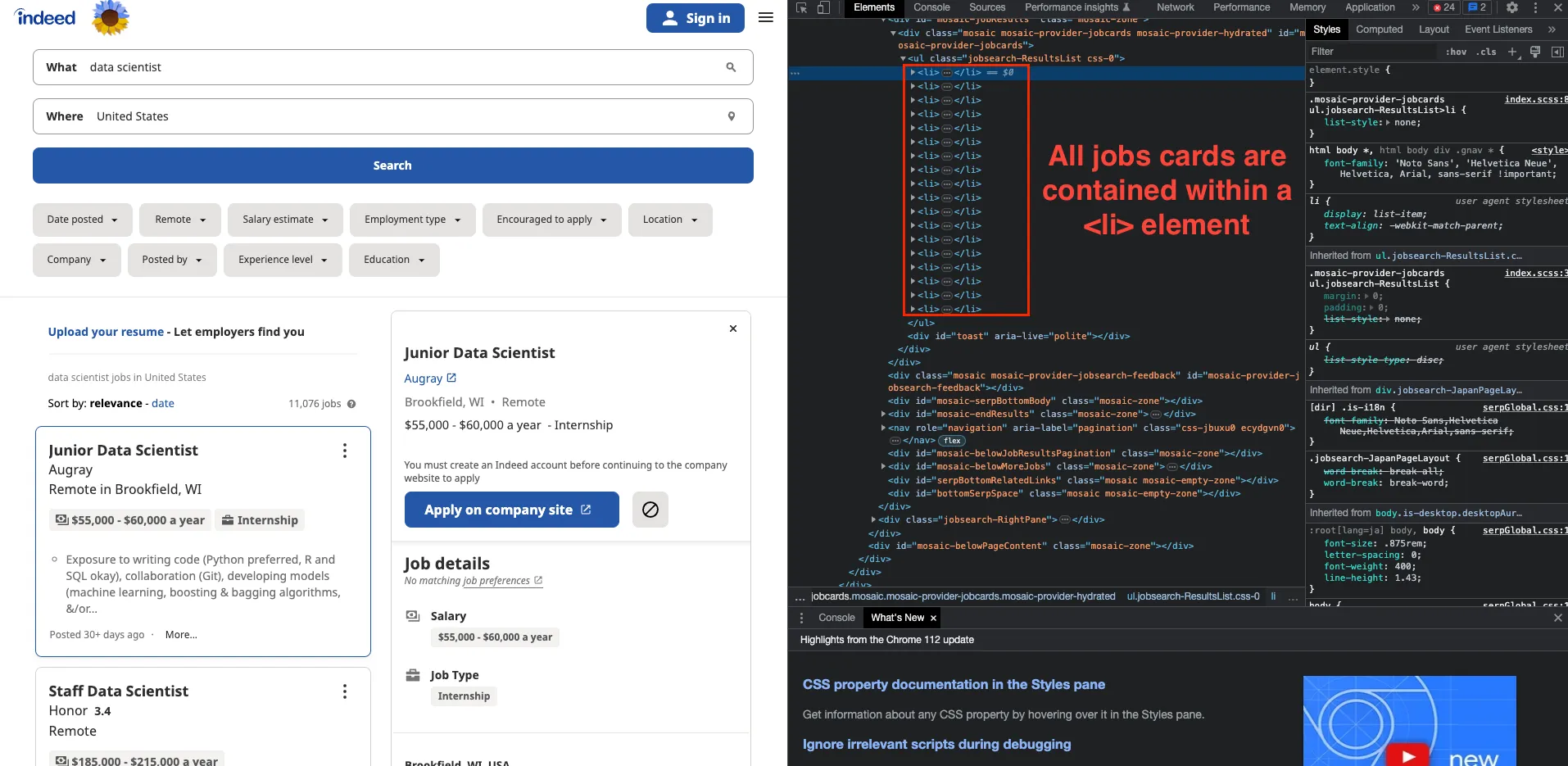

Indeed’s search results typically have 15 results per page displayed on the left-hand side. Each job meta-data is displayed on a job card.

While it’s great that the job card contains most of the data we’re looking for — job title, company, and location — it only has an abbreviated version of the job description. This is why we will have to click on the job card to get the full job data. To load more job offers, it is important to consider that the way Indeed job postings work is by having a pagination number at the end of the page.

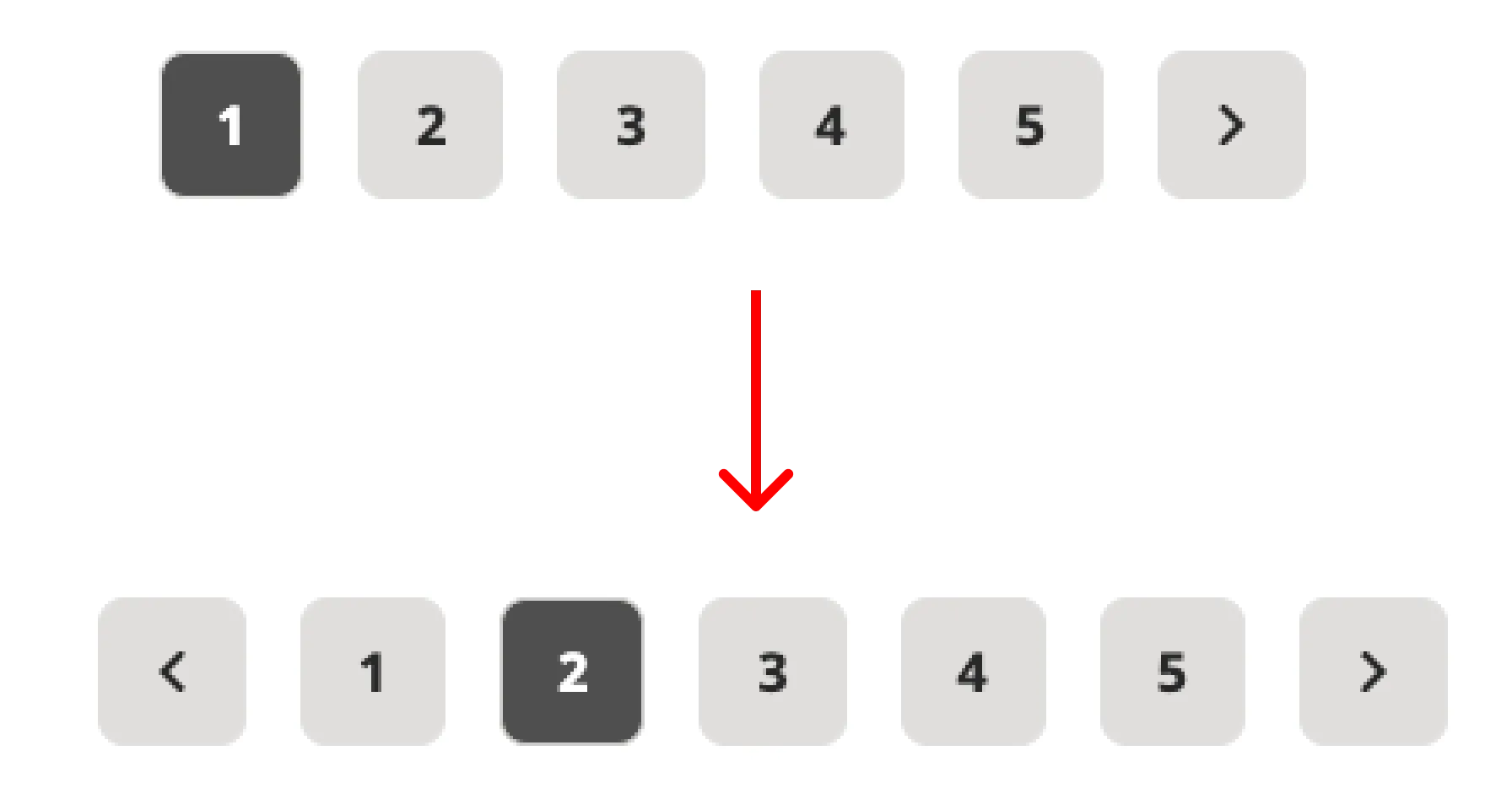

It is as easy as clicking the next page button… right? Well, there’s another important thing to consider. The first time we open Indeed, there will be 6 pagination buttons, being the 6th the one to go to the next page. However, starting on the second page, there will appear an additional button for going back, making our button of interest the 7th.

To accommodate both of the scenarios, we add a ‘try — except’ procedure. In order to locate the button, we will use the Xpath to locate it.

So we can easily click on the next page button using the following command! .find_element(By.XPATH,"next_page_button_xpath").click()

8. Detecting all elements

If we inspect the website again, we can easily observe that every job card is held within an <li> element.

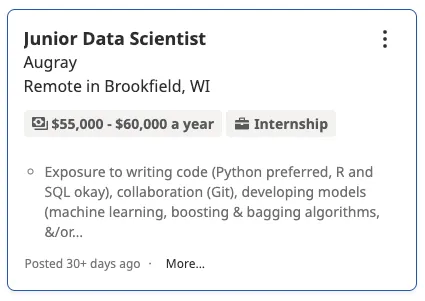

Within each job card, we can find an <div> element with class=cardOutline that contains all the abbreviated info we want to store:

- Job Title is stored in a

<h2>heading with the class ‘jobTitle’. - Company Name is in the

<span>tag container with the class of ‘companyName’’. - Company location is in a

<div>section with the class of ‘company location’. - Job salary is in a

<div>section with the class of ‘salary-snippet-container’. - The posting date range is in a

<span>section with the class ‘date’.

⚠️ It is important to know that the structure of the webpage can change at any time. This is why, you should try to understand how it works by inspecting the elements yourself.

To store all this data, we first get the list with all obtained jobs on the previous step using the driver.find_element.(By.ID,"mosaic-jobResults"). This element will contain all 15 job cards. As all elements have different class name, we can easily use the driver.find_element(By.CLASS_NAME,"element") to find our element and then using the command .text

- Job Title:

.find_element(By.CLASS_NAME,"jobTitle").text - Company Name

.find_element(By.CLASS_NAME,"companyName").text - Location

.find_element(By.CLASS_NAME,"companyLocation").text - Posting date

.find_element(By.CLASS_NAME,"date").text

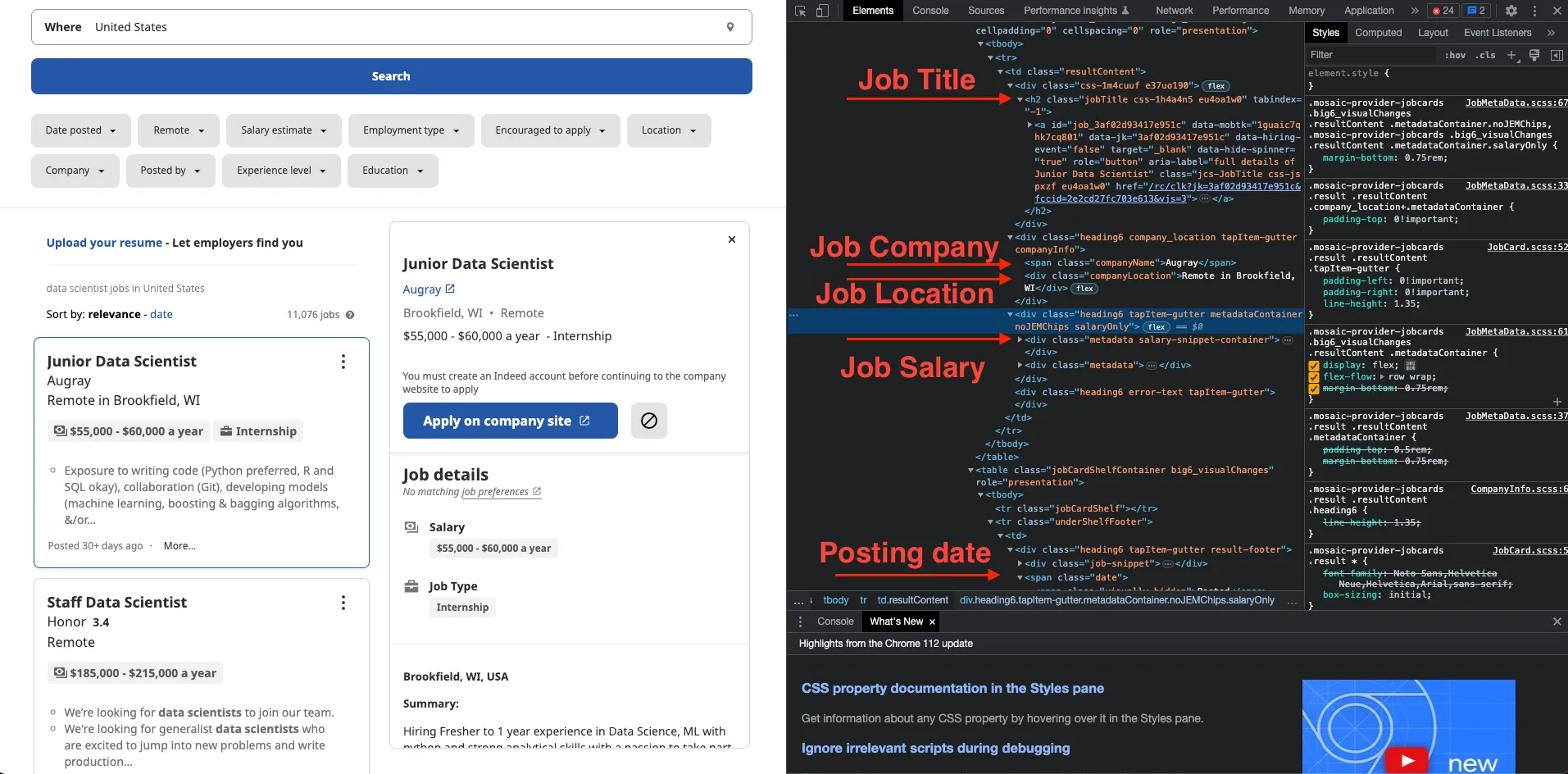

We will get some additional values.

- JobLink:

.find_element(By.CLASS_NAME,"jobTitle").find_element(BY.CSS_SELECTOR,"a").get_attribute("href") - JobId:

.find_element(By.CLASS_NAME,"jobTitle").find_element(BY.CSS_SELECTOR,"a").get_attribute("id")

For the JobSalary, I found a problem. Many job offers did not have a salary at all, and many others had another element called estimated salary. As it can happen that we try to find the element and it doesn’t exist at all, the code might raise an error and stop our scraping. This is why I decided to use a try-except again.

try:

job_salary_list.append(ii.find_element(By.CLASS_NAME,"salary-snippet-container").text)

except:

try:

job_salary_list.append(ii.find_element(By.CLASS_NAME,"estimated-salary").text)

except:

job_salary_list.append(None)

Finally, we need to get the full job description. This will require two actions:

- Clicking on the JobTitle element to open the full job description.

- We need to use the

time.sleep()command to make sure the new page is completely loaded. ⚠️ I usually wait for a random number of seconds, to “imitate” a human behavior. - Finding the description element using the following command:

driver.find_element/By.ID, "jobDescriptionText") - Just in case there is not enough time for the description to be loaded, I add a try-except procedure to return a null description when the element is not found.

All code for step #7 and #8 can be found in the following gist:

# Void lists to store the data.

job_title_list = [];

job_company_list = [];

job_location_list = [];

job_salary_list = [];

job_type_list = [];

job_date_list = [];

job_description_list = [];

job_link_list = [];

job_id_list = [];

# The next button is defined.

next_button_xpath = '//*[@id="jobsearch-JapanPage"]/div/div/div[5]/div[1]/nav/div[6]/a'

num_jobs_scraped = 0

while num_jobs_scraped < 1000:

# The job browing is started

job_page = driver.find_element(By.ID,"mosaic-jobResults")

jobs = job_page.find_elements(By.CLASS_NAME,"job_seen_beacon") # return a list

num_jobs_scraped = num_jobs_scraped + len(jobs)

for ii in jobs:

# Finding the job title and its related elements

job_title = ii.find_element(By.CLASS_NAME,"jobTitle")

job_title_list.append(job_title.text)

job_link_list.append(job_title.find_element(By.CSS_SELECTOR,"a").get_attribute("href"))

job_id_list.append(job_title.find_element(By.CSS_SELECTOR,"a").get_attribute("id"))

# Finding the company name and location

job_company_list.append(ii.find_element(By.CLASS_NAME,"companyName").text)

job_location_list.append(ii.find_element(By.CLASS_NAME,"companyLocation").text)

# Finding the posting date

job_date_list.append(ii.find_element(By.CLASS_NAME,"date").text)

# Trying to find the salary element. If it is not found, a None will be returned.

try:

job_salary_list.append(ii.find_element(By.CLASS_NAME,"salary-snippet-container").text)

except:

try:

job_salary_list.append(ii.find_element(By.CLASS_NAME,"estimated-salary").text)

except:

job_salary_list.append(None)

# We wait a random amount of seconds to imitate a human behavior.

time.sleep(random.random())

#Click the job element to get the description

job_title.click()

#Wait for a bit for the website to charge (again with a random behavior)

time.sleep(1+random.random())

#Find the job description. If the element is not found, a None will be returned.

try:

job_description_list.append(driver.find_element(By.ID,"jobDescriptionText").text)

except:

job_description_list.append(None)

time.sleep(1+random.random())

# We press the next button.

driver.find_element(By.XPATH,next_button_xpath).click()

# The button element is updated to the 7th button instead of the 6th.

next_button_xpath = '//*[@id="jobsearch-JapanPage"]/div/div/div[5]/div[1]/nav/div[7]/a'

Now our browser will scroll down all available jobs while clicking on them.

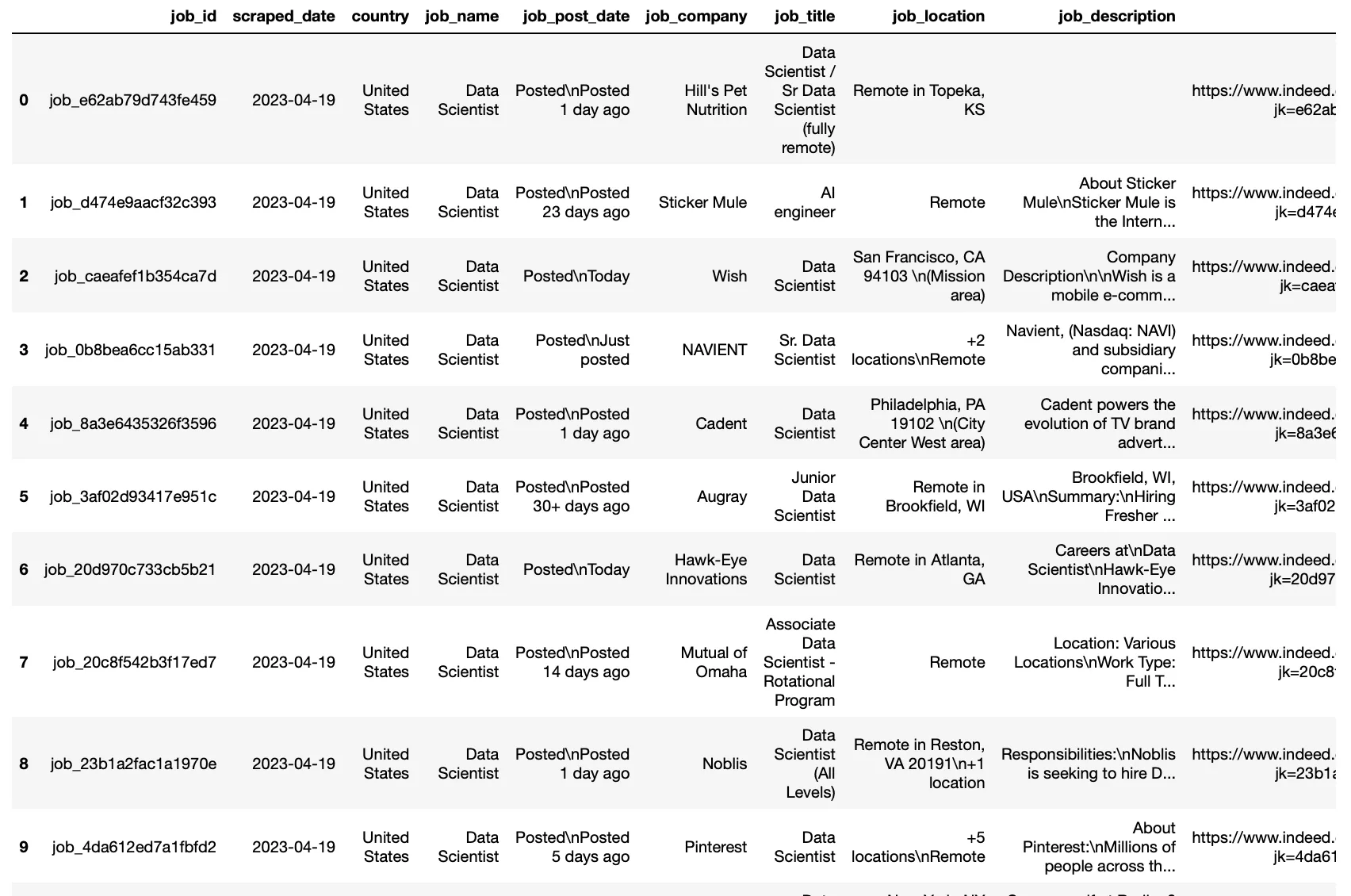

9. Creating our pandas dataframe and saving it up

Once we already have all the data stored in different lists, we just need to create our pandas data frame that will contain all the data we have just scraped.

country_list = [country_name]*len(job_title_list)

job_name_list = [job_name]*len(job_title_list)

scraped_date_list = [date.today()]*len(job_title_list)

indeed_job_data = pd.DataFrame({

'job_id': job_id_list,

'scraped_date': scraped_date_list,

'country': country_list,

'job_name': job_name_list,

'job_post_date': job_date_list,

'job_company': job_company_list,

'job_title': job_title_list,

'job_location': job_location_list,

'job_description': job_description_list,

'job_link': job_link_list,

'job_salary': job_salary_list,

})

indeed_job_data.to_csv("Output/{0}_{1}_".format(country_name,job_name)+"_ddbb.csv")

Once this is done, we should obtain a dataframe that looks as follows:

The last step would be saving up our dataframe as a CSV file.

Now, we have all scraped data saved up on our laptops!

For further information, find my code on my GitHub repository web scraping. You can find both a JupyterNotebook and a Python project.

If you have a project that needs some extra help and assistance, try ScraperAPI. Sign up today and get 5,000 free APO credits.