If you live in the US and have ever tried to search for a house to purchase or rent, you must have come across Zillow. It is one of the world’s biggest property websites and caters to millions of users’ needs each month. With such a mammoth user base and hundreds of thousands of property listings, Zillow is a treasure trove of data.

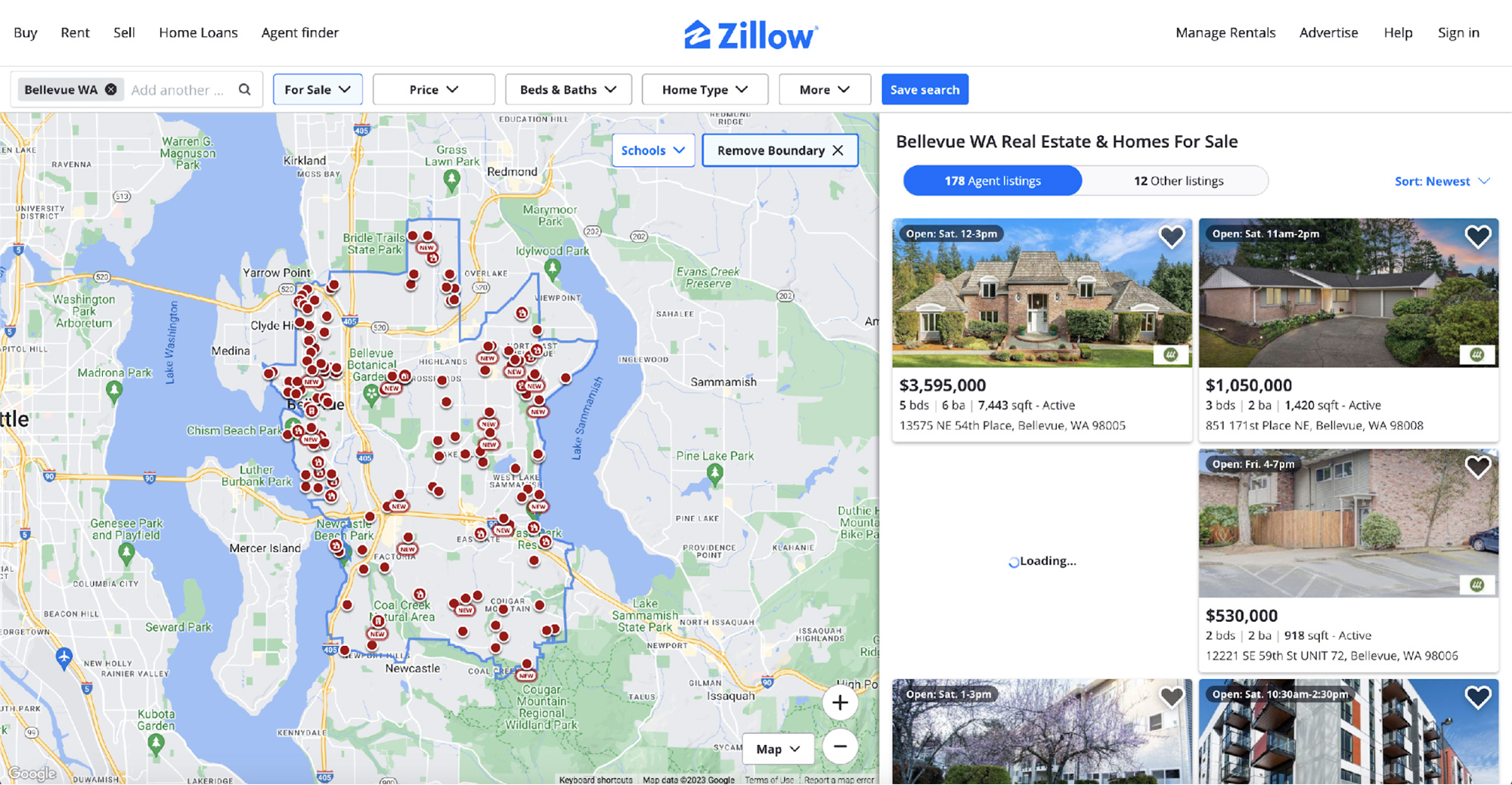

In this article, you will learn how to scrape the search results data from Zillow while bypassing its bot detection. You will be using Selenium and Undetected-Chromedriver to do all the heavy lifting. Before moving on, you can explore the search results page from Zillow at this link. This is what it looks like at the time of writing this article:

Requirements

Like any new project, go ahead and create a new folder. You can name it whatever you want. Then create a new app.py file inside this folder and install two dependencies:

Selenium will provide us with APIs to programmatically control a browser, and undetected-chromedriver will patch the Selenium Chromedriver such that websites will not know that they are being accessed by an automated browser. This is generally more than enough to thwart most anti-bots. Pair this with some rotating proxies solution similar to what ScraperAPI provides, and you have a fairly good solution for scraping most websites.

You can run these commands in the terminal to quickly bootstrap and satisfy all the requirements:

$ mkdir scrape-zillow

$ cd scrape-zillow

$ touch app.py

$ pip install selenium undetected-chromedriver

This tutorial uses Python version 3.10.0, but the code is very generic and should work with most recent Python versions. If you are impatient, you can also look at the complete code at the end of the article.

Fetching Zillow Search Page

Just to make sure everything is set up correctly, go ahead and add this code to the app.py file and run it:

import undetected_chromedriver as uc

driver = uc.Chrome(use_subprocess=True)

driver.get("https://www.zillow.com/bellevue-wa/")

If things go well, you should be greeted by a Chrome window that navigates to the Zillow search results page for Bellevue, WA. If something goes wrong and the code throws an error, debug it using Google (or maybe ChatGPT!). Once you are in the clear, move on to the next step.

An important thing to note is that the search queries are encoded in the URL. Take a look at the URL in the code above and replace bellevue-wa with san-francisco-ca. It will start showing properties from San Francisco, CA. This means that you can simply modify the URL by adding your desired location, and it should continue working as expected. This is important to note now, as the rest of the article will not cover this.

Deciding What to Scrape

Now that the base setup is working fine, it is very important to decide the data you want to scrape before moving on. Working on any web scraping project without a plan is a recipe for disaster. Ideally, you will have this figured out before you touch even a single line of code.

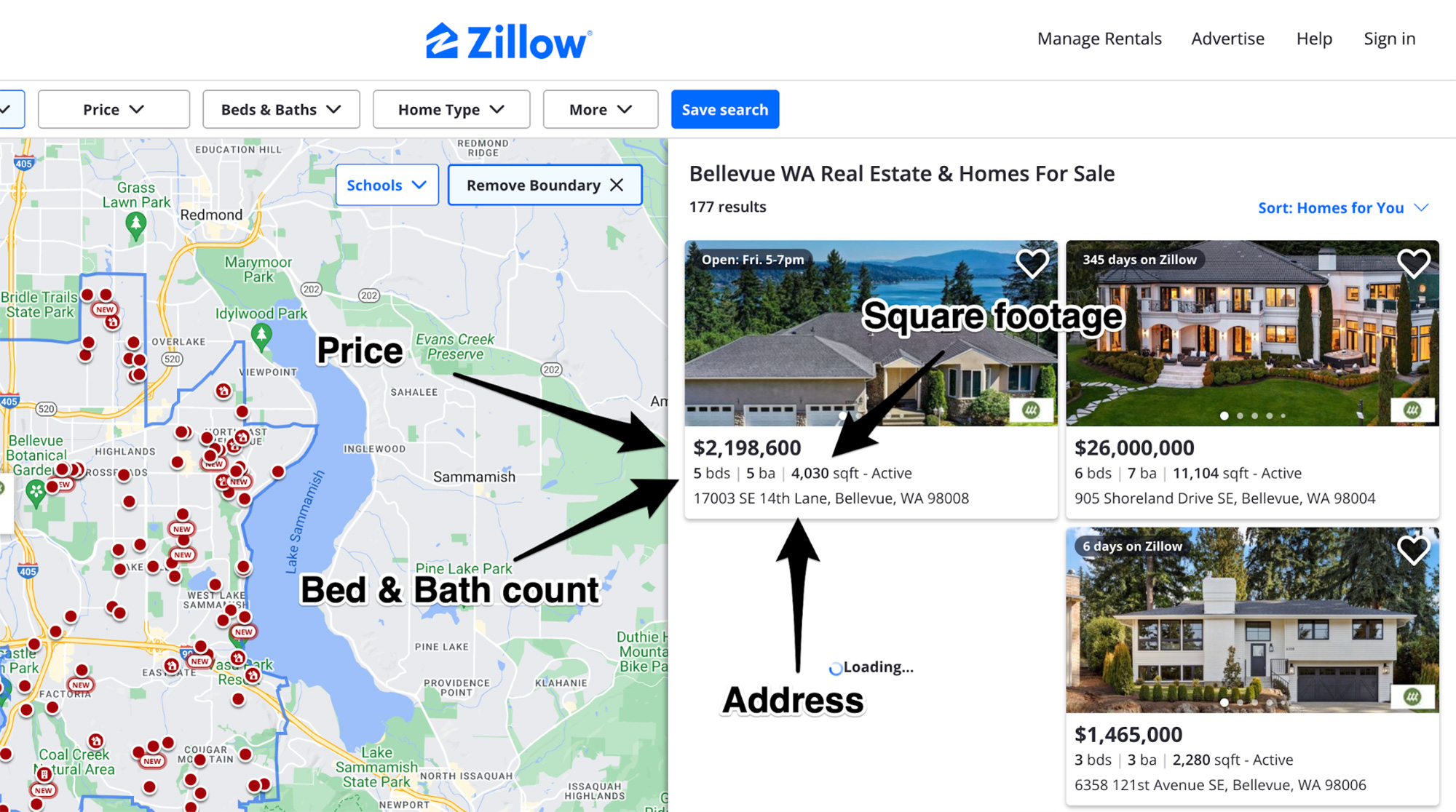

In this tutorial, you will be scraping the following information about a property listing:

- Property price

- Bed count

- Bath count

- Square footage (area)

- Address

The screenshot below shows where all of this information is listed on the search results page:

The final output of the code will resemble this:

[{

'price': '$2,198,600',

'beds': '5',

'baths': '5',

'sqft': '4,030',

'address': '17003 SE 14th Lane, Bellevue, WA 98008'

}, {

'price': '$26,000,000',

'beds': '6',

'baths': '7',

'sqft': '11,104',

'address': '905 Shoreland Drive SE, Bellevue, WA 98004'

}, {

'price': '$1,465,000',

'beds': '3',

'baths': '3',

'sqft': '2,280',

'address': '6358 121st Avenue SE, Bellevue, WA 98006'

}]

Scraping the Listing Cards

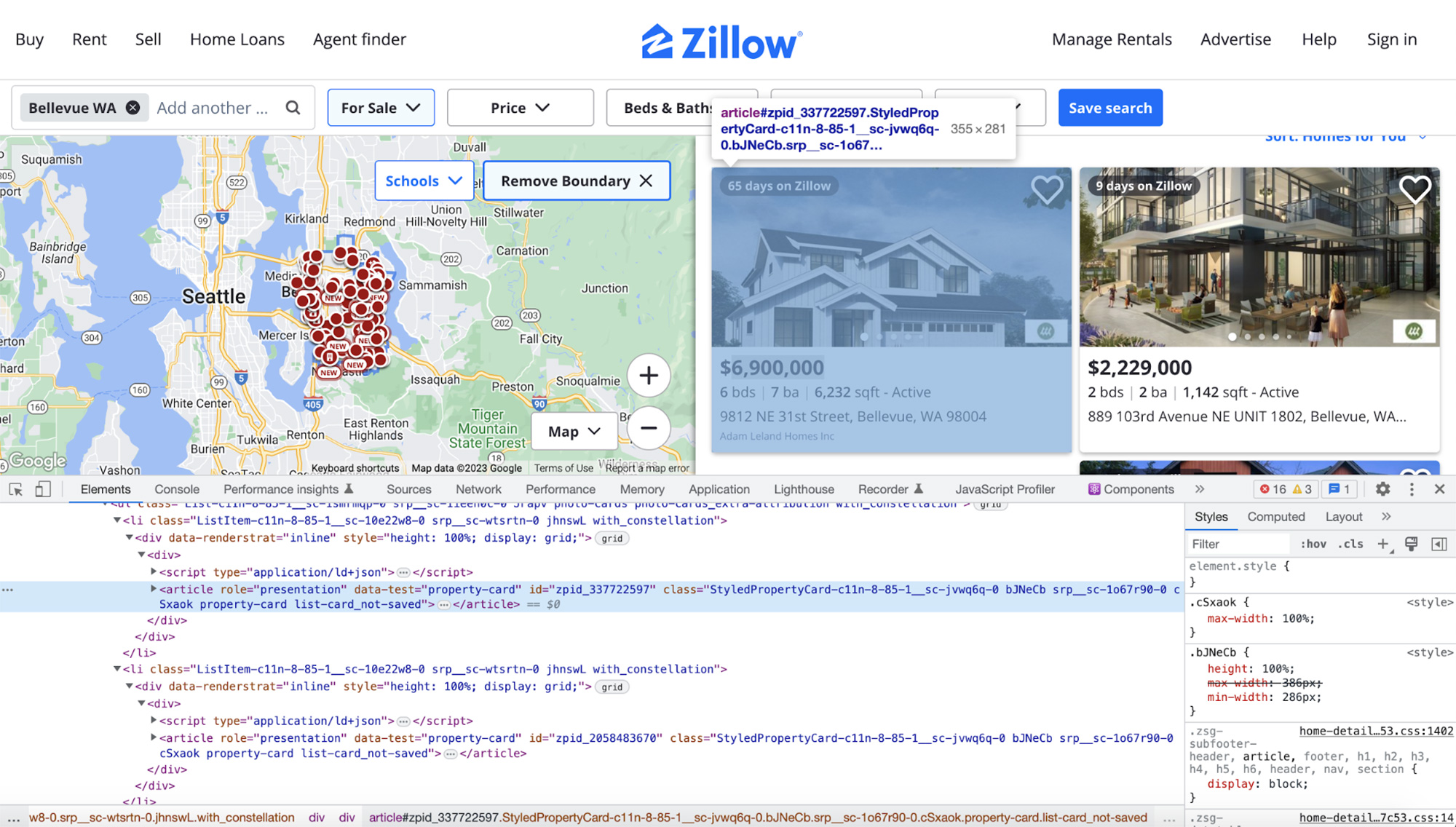

The most indispensable tools for any web scraper are the Developer Tools included in most browsers, and almost all web scraping projects involve a variation of the same basic workflow. You will be using the Developer Tools to inspect the HTML of the page and then running some XPath or CSS Selector queries (using find_element & find_elements) in the Python REPL to ensure you get the data you expect.

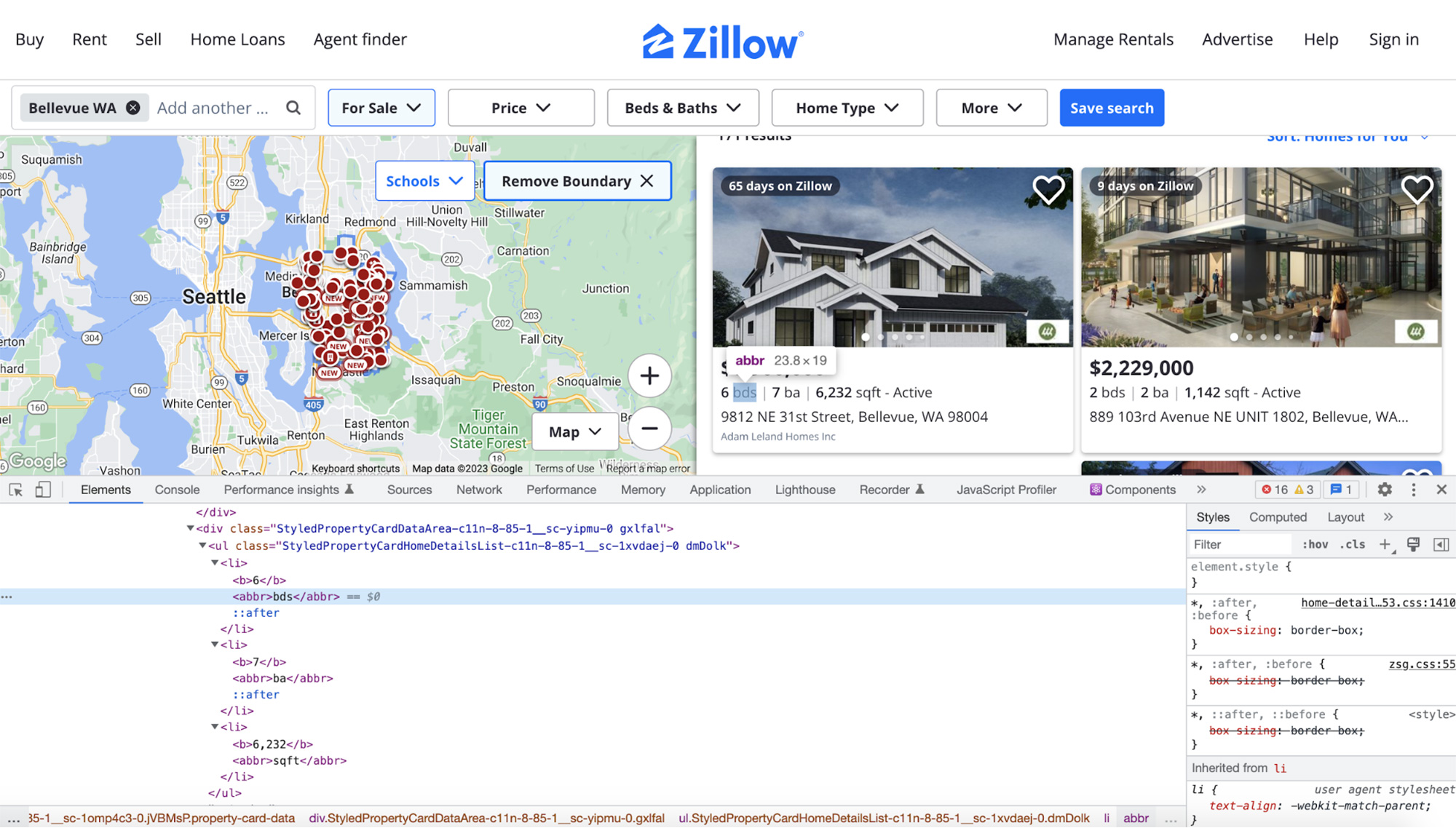

This is the best time to explore the HTML of the web page. Right-click on an area of interest and click on “Inspect”. This should open the Elements panel of the Developer tools. Look around and see if you can pinpoint the tags that encapsulate the required data.

It seems like each property listing card is wrapped in an article tag and each of these article tags has a data-test attribute set to property-card:

You can use this information to write some code to extract all of these individual listing cards into a list. Go to app.py and add the following code:

from selenium.webdriver.common.by import By

# ...truncated...

properties = driver.find_elements(By.XPATH, "//article[@data-test='property-card']")

This code is using an XPath query to extract the listing cards. You can deconstruct and read the query like this:

//article: Find all article tags in the HTML[@data-test='property-card']: That have the data-test attribute set to property-card

Scraping Individual Listing Data

Now that you have all the property listings in a list, you can loop over them and extract the required information from each listing.

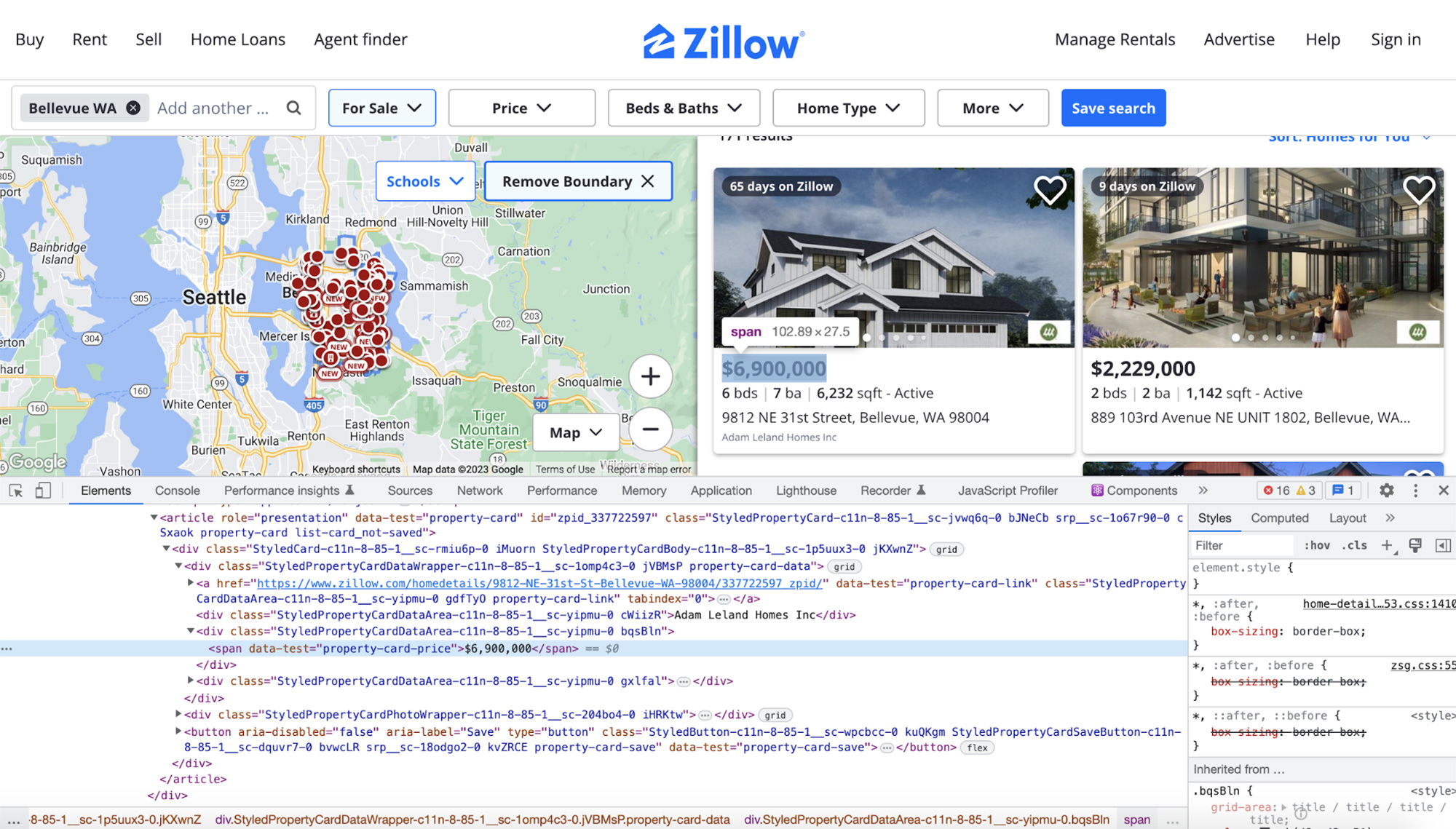

Extracting price data

Explore the HTML a bit more and try to figure out the uniquely identifiable tags that encapsulate the price information.

It seems like this information is encapsulated by a span with the data-test attribute set to property-card-price:

Let’s add some more code to the app.py file to loop over all the properties and extract their price:

# ...

all_properties = []

for property in properties:

property_data = {}

property_data['price'] = property.find_element(By.XPATH, ".//span[@data-test='property-card-price']").text

all_properties.append(property_data)

The code is defining a new all_properties list to store the extracted data for each property. Afterward, it is looping over all the extracted property cards and extracts the pricing information from them using an XPath query. The only significant difference in this query from the one in the last section is that this one begins with a .. This is significant as this scopes the XPath query to the individual property card rather than the whole HTML.

Extracting beds, baths, and square footage data

With the prices sorted out, continue exploring the HTML surrounding the beds, baths, and square footage information. This is what it looks like:

Based on this screenshot, it seems like there isn’t any unique attribute that we can target. However, the structure of this HTML is very methodical and similar for each property listing. Lucky for us, XPath provides us with a way to target a tag based on the text it contains. You will use this method to target the abbr tags that contain bds, ba, and sqft text and then extract the numerical data from the preceding sibling tag.

One important thing to note is that not every property listing contains the beds, baths, and square footage data. Therefore, it is important to make the code resilient for such cases. Otherwise, Selenium will throw NoSuchElementException.

Update the code in app.py to extract this new data:

# ...

for property in properties:

property_data = {}

# ...

if property.find_elements(By.XPATH, ".//abbr[contains(text(), 'bds')]/preceding-sibling::b"):

property_data['beds'] = property.find_element(By.XPATH, ".//abbr[contains(text(), 'bds')]/preceding-sibling::b").text

if property.find_elements(By.XPATH, ".//abbr[contains(text(), 'ba')]/preceding-sibling::b"):

property_data['baths'] = property.find_element(By.XPATH, ".//abbr[contains(text(), 'ba')]/preceding-sibling::b").text

if property.find_elements(By.XPATH, ".//abbr[contains(text(), 'sqft')]/preceding-sibling::b"):

property_data['sqft'] = property.find_element(By.XPATH, ".//abbr[contains(text(), 'sqft')]/preceding-sibling::b").text

# ...

Just because these XPaths are slightly different from the ones you saw earlier, let’s deconstruct one of them:

.//abbr: Find all abbr tags in a property listing card[contains(text(), 'bds')]: that contains the text bds/preceding-sibling::b: and navigate to its preceding b sibling

The code contains an if statement for each of these new pieces of data because, as mentioned earlier, not every property listing contains this data. Without an if statement, Selenium will throw NoSuchElementException.

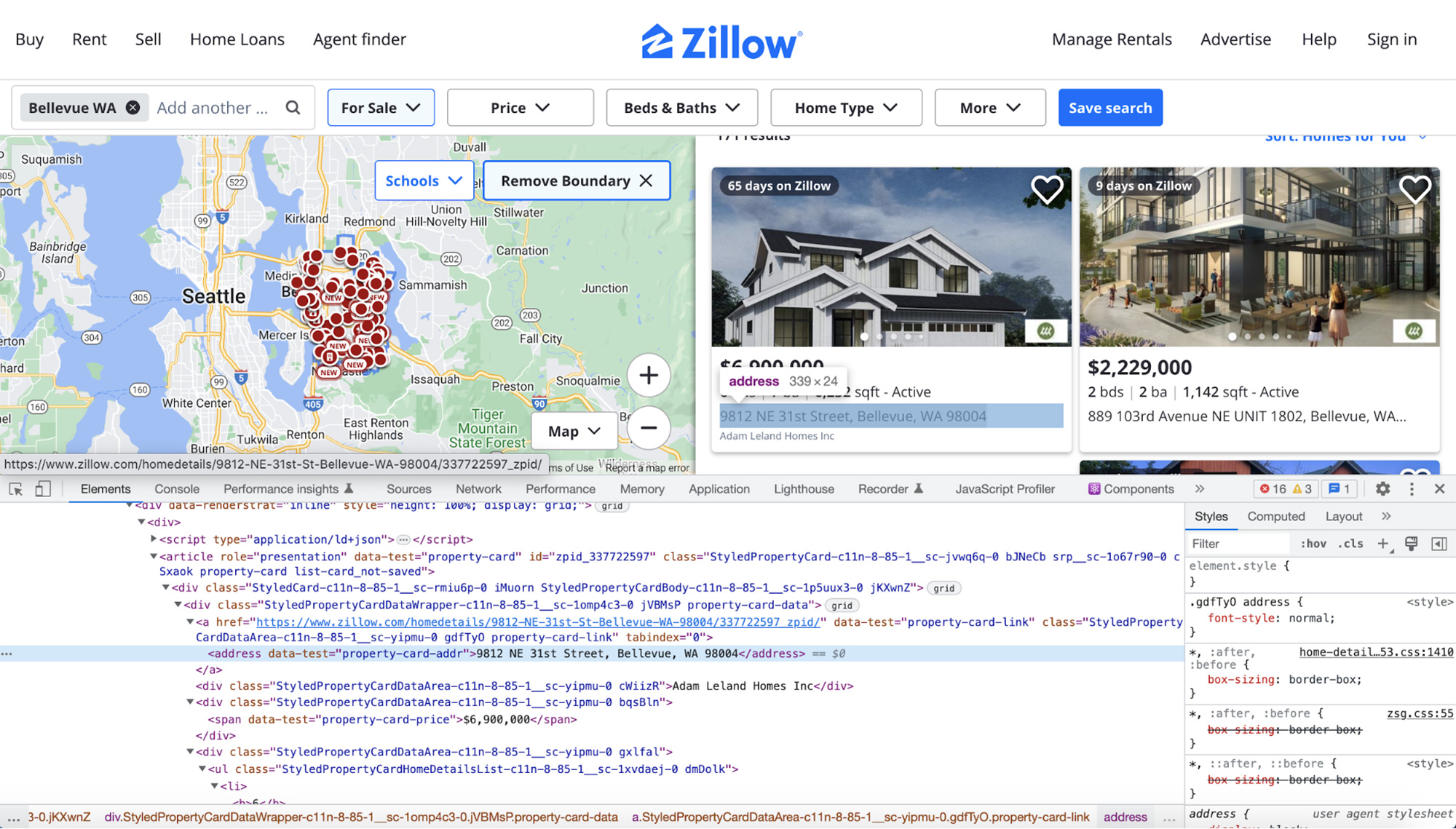

Extracting the address

By now, you are already aware of the process. Explore the HTML yet again and figure out the tags you can target for extracting the property address.

This one is simpler. Every property card has a nested address tag that contains the actual address:

This is what the updated code should look like:

# ...

for property in properties:

property_data = {}

# ...

property_data['address'] = property.find_element(By.XPATH, ".//address").text

# ...

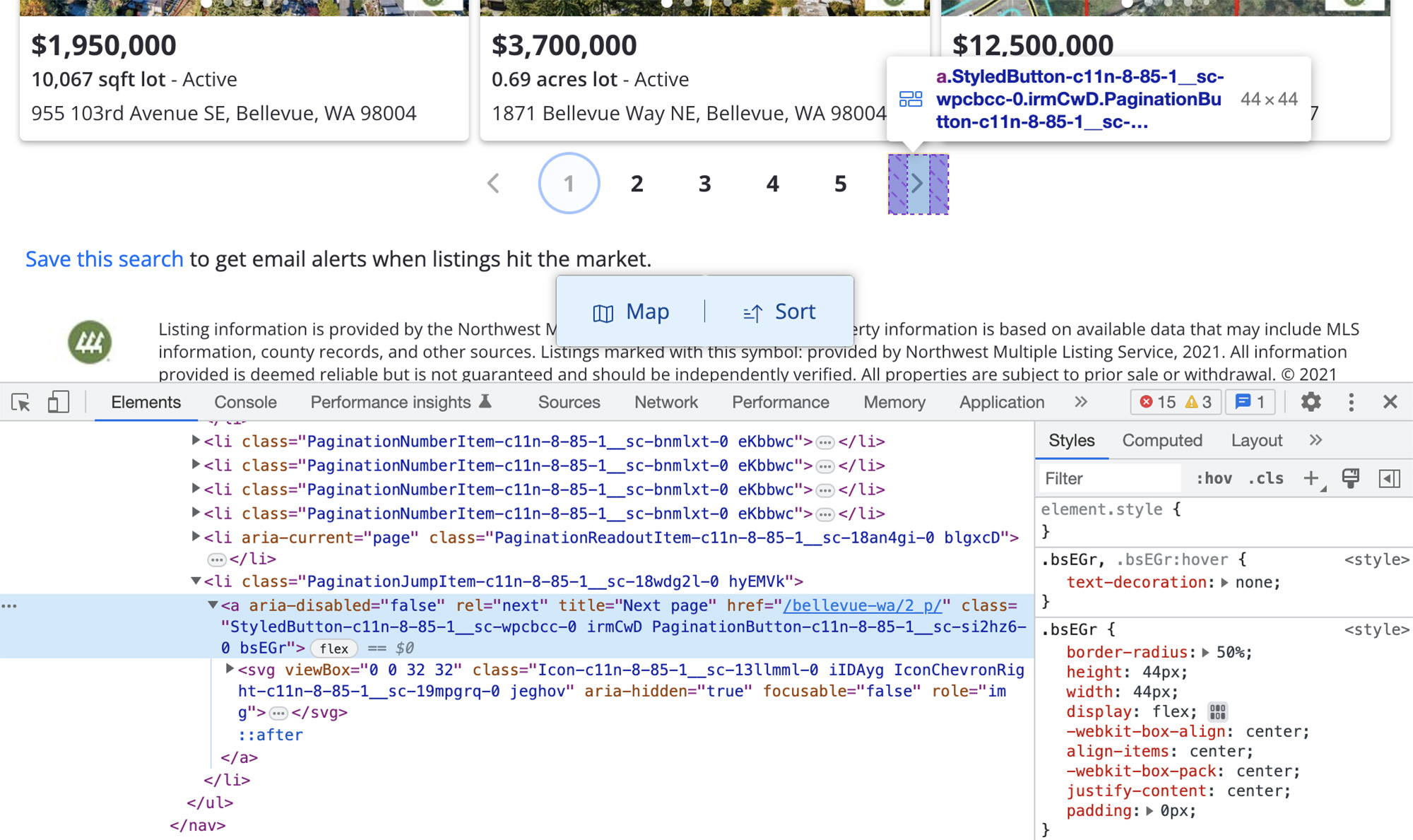

Making Use of Pagination

Now that the actual data extraction is sorted out, let’s brainstorm how to handle the pagination. Currently, each search result page lists only 40 results. The rest of the results are hidden behind pagination. You can find pagination links at the bottom of the results page. You need to devise a method through which your code will automatically navigate to the next page after extracting the property listings from the current page.

Like always, this is when you go and explore the HTML of the page to figure out a clever plan to solve the problem. This is what the HTML for the pagination looks like:

The screenshot might have given you a hint already about what you are going to do next. As you can observe, every pagination section has this next page button (>) and you can click this to go to the next page. When you are at the last page, the aria-disabled attribute for this button is set to true. This will inform you that you have extracted all the listings.

Let’s update the code in app.py to handle pagination. First, you will need to update the pre-existing code and wrap it in a function. This will clean it up a bit and prevent code duplication when you add the pagination logic:

all_properties = []

def extract_properties():

properties = driver.find_elements(By.XPATH, "//article[@data-test='property-card']")

for property in properties:

property_data = {}

property_data['price'] = property.find_element(By.XPATH,

".//span[@data-test='property-card-price']").text

if property.find_elements(By.XPATH, ".//abbr[contains(text(), 'bds')]/preceding-sibling::b"):

property_data['beds'] = property.find_element(By.XPATH, ".//abbr[contains(text(), 'bds')]/preceding-sibling::b").text

if property.find_elements(By.XPATH, ".//abbr[contains(text(), 'ba')]/preceding-sibling::b"):

property_data['baths'] = property.find_element(By.XPATH, ".//abbr[contains(text(), 'ba')]/preceding-sibling::b").text

if property.find_elements(By.XPATH, ".//abbr[contains(text(), 'sqft')]/preceding-sibling::b"):

property_data['sqft'] = property.find_element(By.XPATH, ".//abbr[contains(text(), 'sqft')]/preceding-sibling::b").text

property_data['address'] = property.find_element(By.XPATH, ".//address").text

all_properties.append(property_data)

pprint(all_properties)

Now add the following code at the end to handle pagination:

from selenium.common.exceptions import NoSuchElementException

# ...

extract_properties()

while True:

try:

next_page_url = driver.find_element(By.XPATH, "//a[@rel='next' and @aria-disabled='false']").get_attribute('href')

driver.get(next_page_url)

except NoSuchElementException:

break

extract_properties()

print(all_properties)

The code is fairly straightforward. It uses an XPath query to find an anchor tag that has a rel attribute set to next and the aria-disabled attribute set to false. As long as there is a next page, it will continue navigating to it. When it gets to the last page, the XPath expression will throw an exception as the aria-disabled attribute will be set to true. At that point, it breaks out of the loop and prints the extracted data.

Complete Code

If you have been following along closely, this is the final code you should have ended up with:

from pprint import pprint

import undetected_chromedriver as uc

from selenium.webdriver.common.by import By

from selenium.common.exceptions import NoSuchElementException

driver = uc.Chrome(use_subprocess=True)

driver.get("https://www.zillow.com/bellevue-wa/")

all_properties = []

def extract_properties():

properties = driver.find_elements(By.XPATH, "//article[@data-test='property-card']")

for property in properties:

property_data = {}

property_data['price'] = property.find_element(By.XPATH, ".//span[@data-test='property-card-price']").text

if property.find_elements(By.XPATH, ".//abbr[contains(text(), 'bds')]/preceding-sibling::b"):

property_data['beds'] = property.find_element(By.XPATH, ".//abbr[contains(text(), 'bds')]/preceding-sibling::b").text

if property.find_elements(By.XPATH, ".//abbr[contains(text(), 'ba')]/preceding-sibling::b"):

property_data['baths'] = property.find_element(By.XPATH, ".//abbr[contains(text(), 'ba')]/preceding-sibling::b").text

if property.find_elements(By.XPATH, ".//abbr[contains(text(), 'sqft')]/preceding-sibling::b"):

property_data['sqft'] = property.find_element(By.XPATH, ".//abbr[contains(text(), 'sqft')]/preceding-sibling::b").text

property_data['address'] = property.find_element(By.XPATH, ".//address").text

all_properties.append(property_data)

pprint(all_properties)

extract_properties()

while True:

try:

next_page_url = driver.find_element(By.XPATH, "//a[@rel='next' and @aria-disabled='false']").get_attribute('href')

driver.get(next_page_url)

except NoSuchElementException:

break

extract_properties()

print(all_properties)

The only difference in this code is that I have added a pprint function call at the end of the for loop. This is useful as it will “pretty” print property data from each page as soon as it is scraped.

Try running this code and you should start seeing scraped data in the terminal. Just keep in mind that you should invest in some rotating proxies solution similar to ScraperAPI as well so that Zillow does not block your IP address due to excessive requests.

Conclusion

In this article, you learned how to scrape data from Zillow’s search results page. You saw how XPaths work and how to use them in creative ways to target and find HTML elements. You also successfully configured pagination handling in the code. If you want to scrape Zillow at scale, it is important to know the limitations of the current system. Currently, it is very easy for Zillow to find and ban your IP address for excessive requests. You can easily bypass that by using rotating proxies from a reliable proxy provider like ScraperAPI. This will ensure that your personal IP is never banned and your scraping project can keep chugging along without a hitch.

We are scraping and proxy experts here at Scraper API. If you have a project that needs some help and assistance, don’t hesitate to reach out to us.