Over 7.59 million active websites use Cloudflare. If you can’t tell, that is a big number! And the chances of you running into a Cloudflare-protected website are very high.

If you have tried scraping such websites, then you might already be aware of how difficult it is to get around Cloudflare’s automated bot detection system. Today, you are in luck, as you will get to learn how to scrape Cloudflare-protected websites using Python.

You will use the wonderful open-source Cloudscraper project to do this. By the end, you will also become aware of the limitations of this project and why ScraperAPI is a more powerful and reliable alternative to Cloudscraper.

Note: Cloudflare constantly keeps on changing its bot detection system and breaking open-source projects like Cloudscraper. If you value your time and have large scraping jobs that require scale or interval scheduling scale, consider using ScraperAPI. This tool lets you scrape domains within many concurrent threads without getting blocked by Cloudflare’s blockers.

How to Use Cloudscraper to Scrape Cloudflare Protected Websites

Let’s review how to set up a Cloudscraper project to scrape Glassdoor pages at a low scale.

If you are an advanced web scraping user, you should skip to the other method described in the article — using ScraperAPI. By using its API, you can achieve higher scalability, will be able to schedule jobs, and automate the scraping of Cloudflare protected websites.

Step 1. Setting up the prerequisites

Make sure you have Python installed on your system. You can use Python version 3.6 or higher for this tutorial. Go ahead and create a new directory where all the code for this project will be stored and create an app.py file within in:

$ mkdir web_scraper

$ cd web_scraper

$ touch app.py

Next, you need to install cloudscraper and requests. You can easily do that via PIP:

$ pip install cloudscraper requests

Step 2. Making a simple request using requests

You will be scraping Glassdoor in this tutorial. Here is what the homepage of Glassdoor looks like:

This website is protected by Cloudflare and can not easily be scraped using requests. You can confirm that by making a simple GET request using requests like this:

import requests

html = requests.get("https://www.glassdoor.com/")

print(html.status_code)

# 403

As expected, Glassdoor returns a 403 Forbidden status code and not the actual search results. This status code is returned by the Cloudflare Bot detection system that Glassdoor is using. You can save the response in a local file and open it up in your browser to get a better understanding of what is going on.

You can use this code to save the response:

with open("response.html", "wb") as f:

f.write(html.content)

When you open the response.html file in your browser, you should see something like this:

Now that you know your target website is being protected by Cloudflare, do you have any viable options to bypass it?

Step 3. Scraping Glassdoor.com using Cloudscraper

Luckily this is where Cloudscraper comes in. It is built on top of Requests and has some intelligent logic to parse the challenge page returned by Cloudflare and submit the appropriate response to successfully get around it. Its biggest limitation is that it can only bypass Cloudflare bot detection version 1 and not version 2. However, if your target website is using bot detection version 1 then this is a very reasonable solution. We will discuss this limitation in detail in an upcoming step.

Another noteworthy fact is that Cloudscraper does not run a complete browser engine by default. Therefore, it is considerably faster than other similar solutions that rely on headless browsers or browser emulation.

Note: If you are trying to scrape a website that is using version 2 of Cloudflare’s bot detection system, you can save some time and skip to the very end of this tutorial and read about ScraperAPI as that is a potential solution for your use case.

Let’s repeat the same GET request to Glassdoor.com but this time using Cloudscraper. Open up the Python REPL and type this code:

import cloudscraper

scraper = cloudscraper.create_scraper()

html = scraper.get("https://www.glassdoor.com/")

print(html.status_code)

# 200

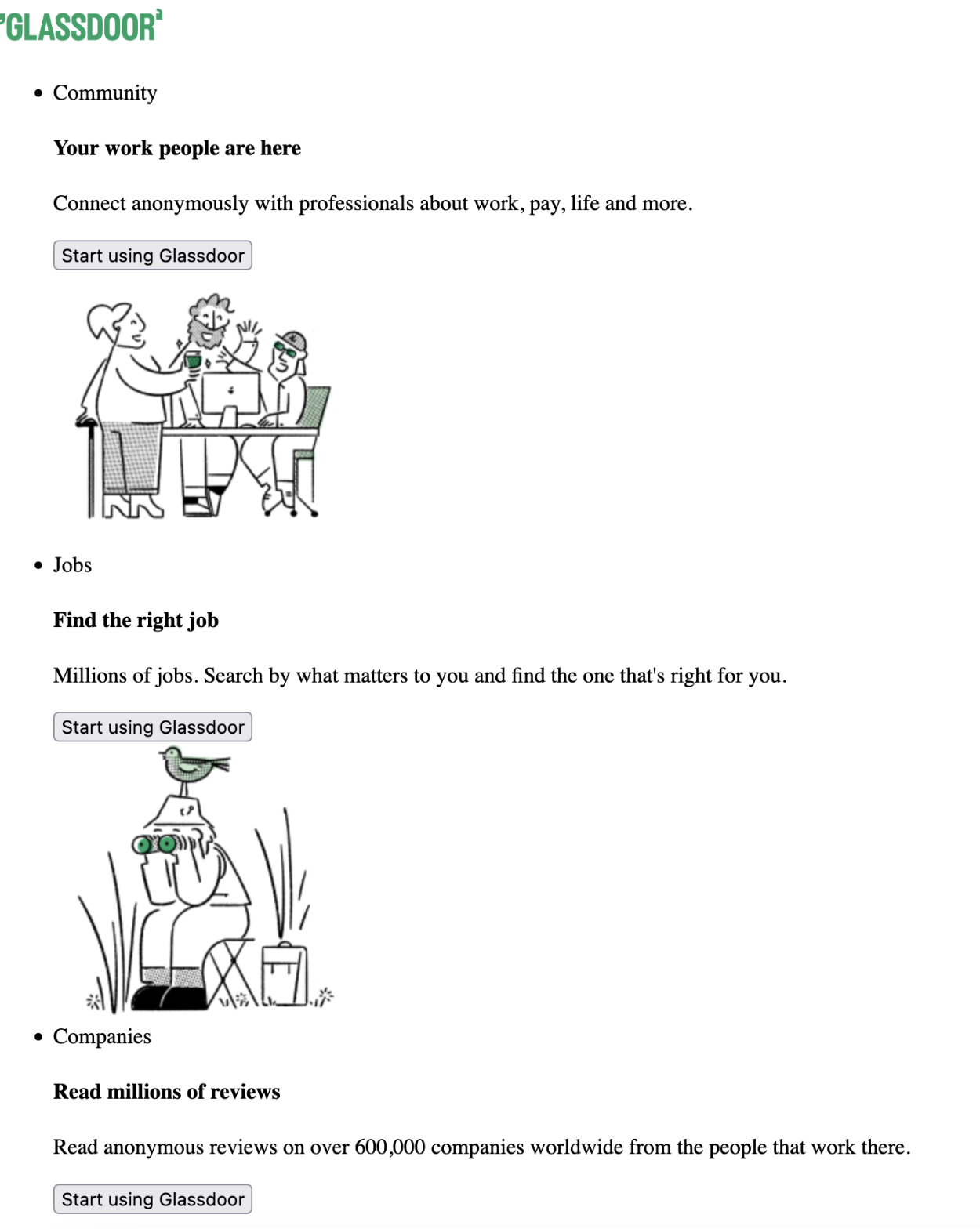

Sweet! Cloudflare did not block our request this time. Go ahead, save the html into a local file as we did in the previous step, and open it up in your browser. You should see the homepage of Glassdoor. The UI is a bit wonky because of broken CSS and JS links (as we haven’t saved the CSS and JSON locally) but all the data is there:

Just by changing a few lines of code, you were able to bypass Cloudflare’s bot detection system!

Step 4. Using advanced options of Cloudscraper

Cloudscraper provides a ton of configurable options out of the box. Let’s take a look at a few of them.

4.1 Built-in support for captcha solvers

Cloudscraper has built-in support for a few 3rd Party Captcha Solvers in case you might need them. For example, if your requests are hitting a captcha page, you can simply hook up 2captcha with Cloudscraper as a captcha solution provider:

scraper = cloudscraper.create_scraper(

captcha={

'provider': '2captcha',

'api_key': 'your_2captcha_api_key'

}

)

So far, Cloudscraper supports the following captcha services out of the box:

- 2captcha

- anticaptcha

- CapSolver

- CapMonster Cloud

- deathbycaptcha

- 9kw

- return_response

4.2 Using a custom proxy

More often than not, you will want to route all of the scraping traffic through a proxy. This will make sure your real IP is masked and is not banned by the target website for excessive requests. Thankfully, Cloudscraper provides support for using custom proxies. This comes as a side-effect of Cloudscraper being built on top of the amazing requests library.

If you want to use a custom proxy with Cloudscraper, you can define a dictionary containing the HTTP and HTTPS proxy endpoints and Cloudscraper will make sure to use the appropriate one for any future requests. Here is some code that demonstrates this:

import cloudscraper

proxies = {"http": "http://localhost:8080", "https": "http://localhost:8080"}

scraper = cloudscraper.create_scraper()

scraper.proxies.update(proxies)

html = scraper.get("https://www.glassdoor.com/")

print(html.status_code)

# 200

You can further enhance this custom proxy setup by adding logic for rotating proxies. Look at this other article on our website to learn how to do that.

4.3 Extracting just the Cloudflare token

When you bypass Cloudflare bot detection, Cloudflare responds with a cookie that has to be passed with all follow-up requests to the target website. This cookie informs Cloudflare that your browser has already passed the bot detection challenge and should be allowed to go through without additional challenges. Cloudscraper provides handy methods to extract this cookie (or the tokens within it). Here is how you can use them:

import cloudscraper

tokens, user_agent = cloudscraper.get_tokens("https://www.glassdoor.com/")

print(tokens)

# {

# 'cf_clearance': 'c8f913c707b818b47aa328d81cab57c349b1eee5-1426733163-3600',

# '__cfduid': 'dd8ec03dfdbcb8c2ea63e920f1335c1001426733158'

# }

cookie_value, user_agent = cloudscraper.get_cookie_string("https://www.glassdoor.com/")

print(cookie_value)

# cf_clearance=c8f913c707b818b47aa328d81cab57c349b1eee5-1426733163-3600; __cfduid=dd8ec03dfdbcb8c2ea63e920f1335c1001426733158

This is very useful as it allows you to use Cloudscraper to bypass the bot detection system and then use the resulting cookies with the follow-up requests from your pre-existing web scraping code. You just need to make sure that the follow-up requests are generated from the same IP as the one used while bypassing the bot detection system. If the IPs are different, Cloudflare will block your request and ask you to re-verify.

Limitations of Cloudscraper: When Scraping Cloudflare Protected Websites Isn’t Possible

We mentioned earlier that Cloudscraper has a few limitations and not being able to scrape websites protected by Cloudflare bot detection v2 is a major one. This is the newer version of bot detection provided by Cloudflare and is currently being used by quite a few websites. One such website is Author.

Here is what the homepage of Author looks like:

If you try to scrape data from this website using Cloudscraper, you will see the following exception:

cloudscraper.exceptions.CloudflareChallengeError: Detected a Cloudflare version 2 challenge, This feature is not available in the opensource (free) version.

Even though this exception alludes to the existence of a paid version of Cloudscraper, there isn’t one. Unfortunately, Cloudscraper completely falls apart in the face of the latest bot detection algorithm used by Cloudflare.

Alternatives to Cloudscraper

If you want to scrape websites that Cloudscraper can not currently scrape or if you want to achieve scale and better performance, you should look into Cloudscraper alternatives. One of the best alternatives available today is ScraperAPI.

It can handle the latest version of the bot detection algorithm used by Cloudflare and is regularly updated with new anti-bot evasion techniques to keep your scraping jobs running smoothly.

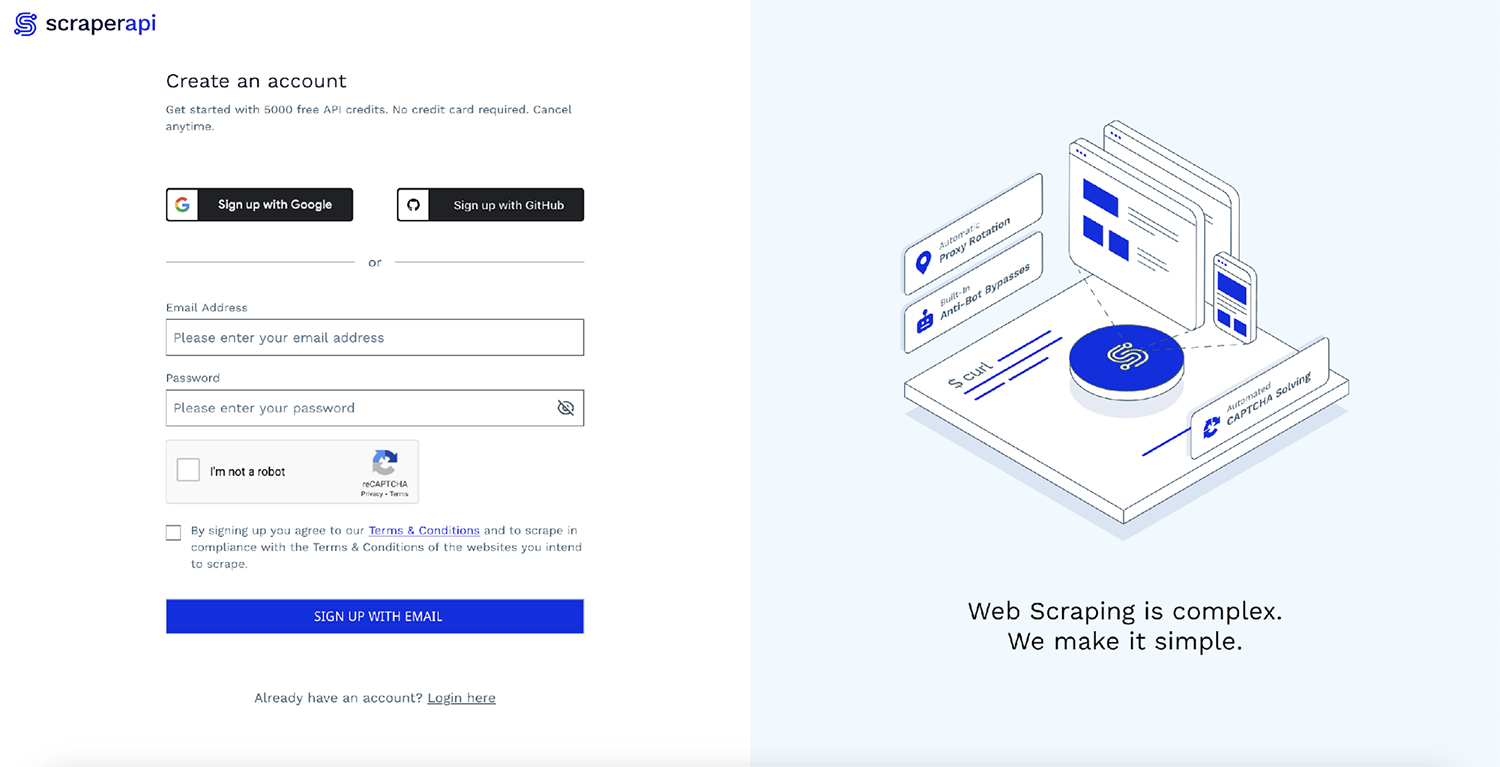

The best part is that ScraperAPI provides 5,000 free API credits for 7 days on a trial basis and then provides a generous free plan with recurring 1,000 API credits to keep you going.

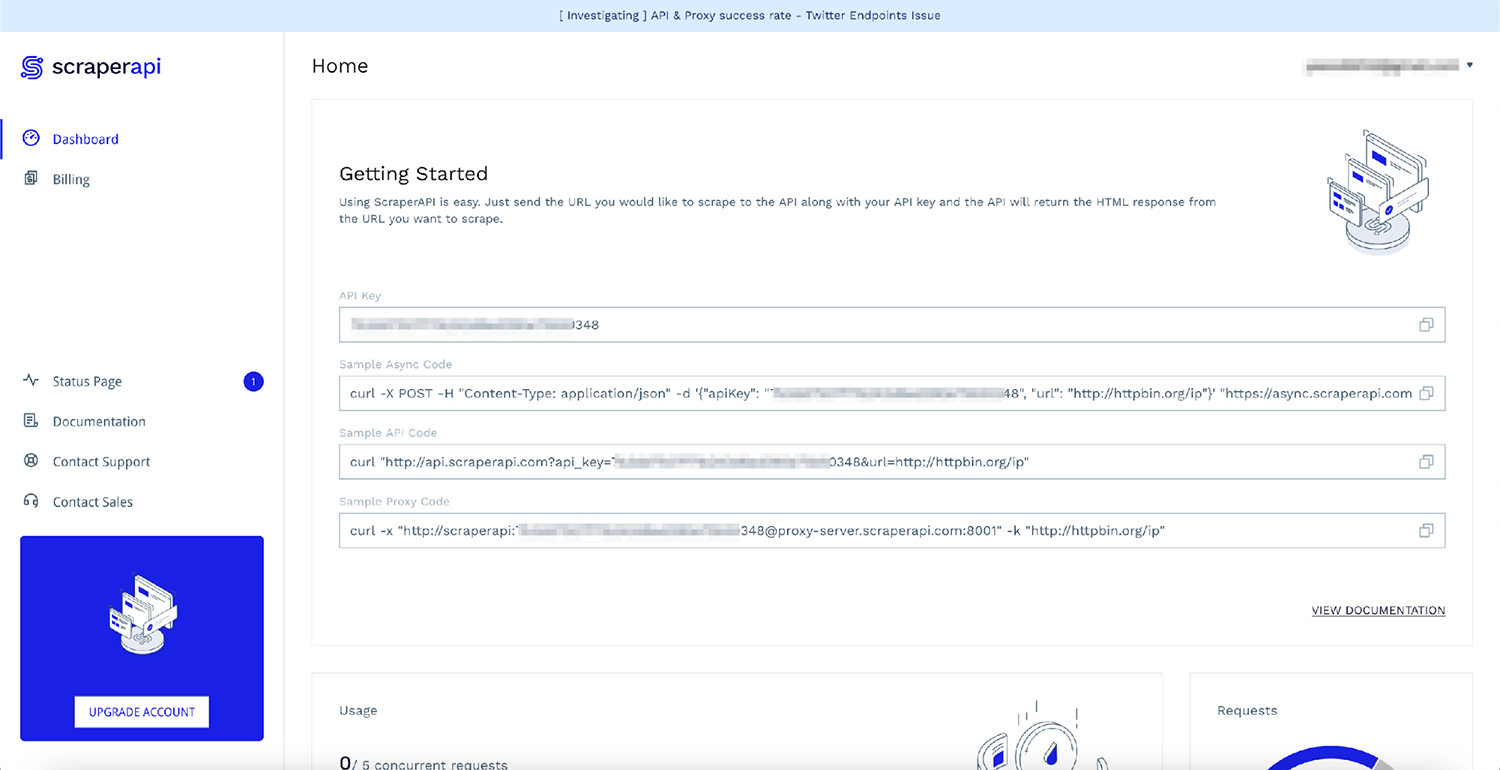

You can quickly get started by going to the ScraperAPI dashboard page and signing up for a new account:

After signing up, you will see your API Key and some sample code:

The quickest way to start using ScraperAPI without modifying your existing code is to use ScraperAPI as a proxy with requests. Here is how you can do so:

import requests

proxies = {

"http": "http://scraperapi:APIKEY@proxy-server.scraperapi.com:8001"

}

r = requests.get('https://author.today/', proxies=proxies, verify=False)

print(r.text)

Note: Don’t forget to replace APIKEY with your personal API key from the dashboard.

This is it! With just a few lines of code, you don’t have to think about Cloudflare’s bot detection system again. ScraperAPI will make sure it uses unblocked proxies and advanced techniques to evade the bot-detection system in place. Now, you can spend more of your time focusing on your core business logic.

ScraperAPI offers a solution that uses unblocked proxies and advanced techniques to evade the bot-detection system in place.

Cloudscraper vs ScraperAPI

Here is a short comparison table that will make it easy for you to pick the best solution for your web scraping project.

| Feature | Cloudscraper | ScraperAPI |

| Evade Cloudflare bot detection v1 | Yes | Yes |

| Evade Cloudflare bot detection v2 | No | Yes |

| Regularly kept up-to-date with Cloudflare algorithm changes | No | Yes |

| Scalable | No* | Yes |

| Uses a broad network of proxies | No | Yes |

| Completely free | Yes | No** |

* Cloudscraper is not scalable by default but can be scaled by setting up and maintaining your custom infrastructure

** Even though ScraperAPI is not completely free at scale, it provides an extremely generous free tier that is enough for most simple scraping projects

How to Scrape Cloudflare Protected Websites and Never Get Blocked (No Matter Your Scraping Scale)

Cloudflare is a great option for starters — you can perform small scraping jobs and now you can start testing. However, the tool is not the best choice for those who have large-scale jobs (or if you want to scale scraping projects in the future and will need to achieve high latency).

If that’s the case, use ScraperAPI so you stop worrying about maintaining code infrastructure or keeping up to date with Cloudflare’s software updates. Unfortunately, you can even expect Cloudflare to become more advanced with its blocking mechanisms in the future — the bar is set high there.

ScraperAPI is a better option as it performs much better in various categories compared to Cloudscraper and constantly updates its mechanisms to break through blockers whenever new updates are unrolled. So what should you do then?

The choice seems simple — get started with ScraperAPI and break through any Cloudflare protected domain at scale. Sign up now and get 5,000 free credits to try out!