Seasonality, demand changes, and promotions cause hotel prices to fluctuate frequently, making it nearly impossible to stay updated by collecting this information manually. Instead, you can automate (and scale) this process by scraping travel websites and platforms.

Collect data from Google search without writing a single line of code with ScraperAPI.

In today’s article, we’ll show you how to scrape hotel prices from one of the biggest aggregators: Google!

Why should you scrape Google hotel prices?

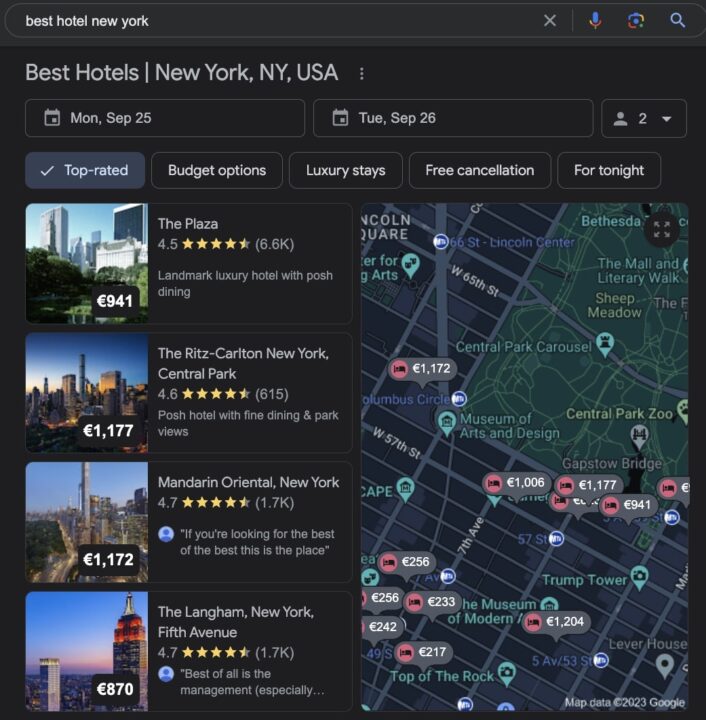

If you search hotel-related keywords, Google will generate its own hotel-focused section with names, images, addresses, ratings, and prices for thousands of hotels.

This is because Google has access to millions of travel and hotel websites and aggregates all this information into a single place.

Travelers, businesses, and analysts can use all of this data for many use cases:

- Price Comparison – compare prices across booking platforms and travel websites to find the best deals.

- Data Analysis – analysts can use hotel pricing data to uncover pricing trends, seasonal fluctuations, and competitive pricing opportunities.

- Dynamic Pricing Strategies – businesses can optimize revenue and occupancy rates by adjusting prices based on demand, availability, and competitor prices.

- Customized Alerts – monitor price drops to alert customers or for personal use.

- Travel Aggregation Services – provide users with a consolidated view of hotel prices and options from various sources.

- Budget and Planning – travelers can anticipate accommodation costs and adjust their plans accordingly.

In the end, there are many things you can do with data, but before you can get insights from it, you need to collect enough of it.

Let’s jump to the fun part and start collecting Google hotel prices!

Scraping Google Hotel Prices with Node.js

In this tutorial, we’ll write a script that finds the best hotel prices in New York by collecting hotel pricing data and then sorting the hotel list from the cheapest to the most expensive.

1. Prerequisites

You must have these tools installed on your computer to follow this tutorial.

- Node.js 18+ and NPM

- Basic knowledge of JavaScript and Node.js API.

Note: Although anyone can follow this tutorial, for those completely unfamiliar with web scraping, we advise starting by reading our JavaScript web scraping for beginners tutorial.

2. Set Up Your Project

Create a folder for the project.

mkdir google-hotel-scraper

Now, initialize a Node.js project by running the command below from the terminal:

cd google-hotel-scraper

npm init -y

The last command will create a package.json file in the folder. Create a file index.js and add a simple JavaScript instruction inside.

touch index.js

echo "console.log('Hello world!');" > index.js

Execute the file index.js using the Node.js runtime.

node index.js

This command will print Hello world! in the terminal. If it works, then your project is up and running.

3. Install the Necessary Dependencies

To build our scraper, we need these two Node.js packages:

- Puppeteer – to load Google Hotel pages and download the HTML content.

- Cheerio – to extract the hotel information from the HTML downloaded by Puppeteer.

Run the command below to install these packages:

npm install puppeteer cheerio

4. Identify the Information to Retrieve on the Google Hotel Page

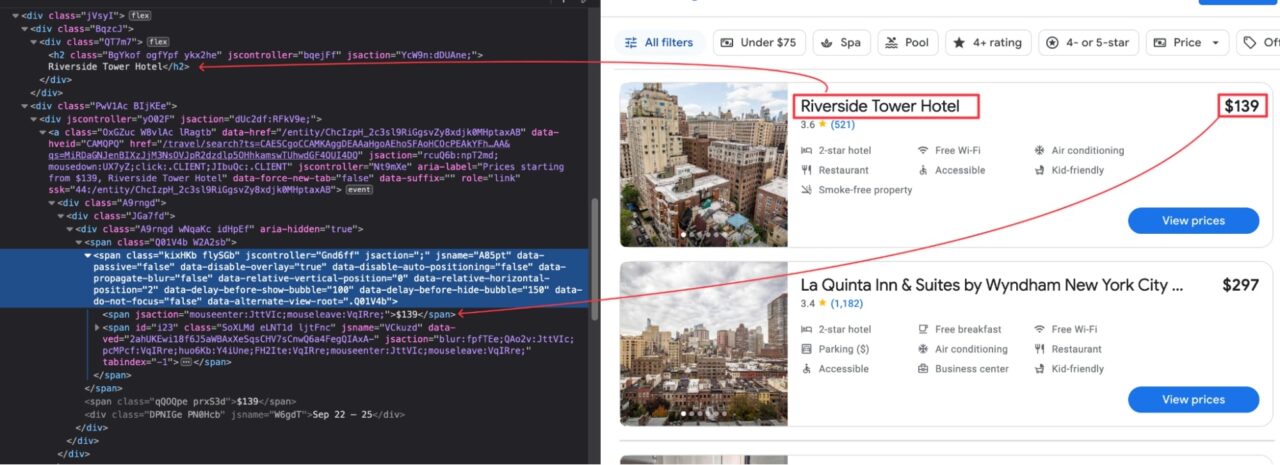

To extract a piece of information from a page, we first have to identify which DOM selector we can use to target its HTML tag.

Here’s what we mean: the picture below shows the location of the hotel’s name and price in the DOM.

As you can see, each piece of information is wrapped between HTML tags, and each tag has its own DOM selector.

Here’s a table that enumerates the DOM selectors for each relevant piece of data:

| Information | DOM Selector | Description |

| Hotel’s container | .uaTTDe |

A hotel item in the results list |

| Hotel’s name | .QT7m7 > h2 |

Name of the hotel |

| Hotel’s price | .kixHKb > span |

The room price for one-night |

| Hotel’s standing | .HlxIlc .UqrZme |

Number of stars |

| Hotel’s rating | .oz2bpb > span |

Customer reviews on the hotel |

| Hotel options | .HlxIlc .XX3dkb |

Additional services offered |

| Hotel pictures | .EyfHd .x7VXS |

Pictures from the hotel |

The DOM selectors of the Google Hotel page are not human-friendly, so be careful when you copy them to avoid spending time debugging.

Note: Even a small mistake in the DOM selector will break your script, so keep that in mind.

5. Scrape the Google Hotel Page

After identifying the DOM selectors, let’s use Puppeteer to download the HTML of the page; here’s the initial page we’re targeting: https://www.google.com/travel/search.

In some countries (Mostly in Europe), a page to ask for consent will be displayed before redirecting to the URL; we will add the code to select the “Reject all” button, click on it, and wait for three seconds for the Google Hotel page to load completely.

Let’s update the code of the index.js with the following code:

const puppeteer = require('puppeteer');

const PAGE_URL = 'https://www.google.com/travel/search';

const waitFor = (timeInMs) => new Promise(r => setTimeout(r, timeInMs));

const main = async () => {

const browser = await puppeteer.launch({ headless: 'new' });

const page = await browser.newPage();

await page.goto(PAGE_URL);

const buttonConsentReject = await page.$('.VfPpkd-LgbsSe[aria-label="Reject all"]');

await buttonConsentReject?.click();

await waitFor(3000);

const html = await page.content();

await browser.close();

console.log(html);

}

void main();

Run the code with the command node index.js; the execution output in the terminal is the HTML content of the page.

6. Extract Information from HTML

We retrieved the page’s content, but extracting any valuable data point is unbearable in its raw HTML form. This is where Cheerio comes into play.

Cheerio provides functions to load the HTML text and turn it into a parsed tree, making it possible to navigate through the structure to extract information using DOM selectors.

The code below loads the HTML and extracts the room’s price for each hotel:

const cheerio = require("cheerio");

const $ = cheerio.load(html);

$('.uaTTDe').each((i, el) => {

const priceElement = $(el).find('.kixHKb span').first();

console.log(priceElement.text());

});

This can be interpreted like this:

For each item having the class .uaTTDe, find the element, in the descendant elements, having the class .kixHKb, which has a span tag in his descendant.

Let’s update the file index.js to extract the content with Cheerio, put it in an array, and sort them from the lowest to the highest price.

For each hotel, we retrieve the information and add it to an array; the information extracted is passed to a sanitize() function we wrote to format the data – browse the code repository to see its implementation.

const cheerio = require("cheerio");

const puppeteer = require("puppeteer");

const { sanitize } = require("./utils");

const waitFor = (timeInMs) => new Promise(r => setTimeout(r, timeInMs));

const GOOGLE_HOTEL_PRICE = 'https://www.google.com/travel/search';

const main = async () => {

const browser = await puppeteer.launch({ headless: 'new' });

const page = await browser.newPage();

await page.goto(GOOGLE_HOTEL_PRICE);

const buttonConsentReject = await page.$('.VfPpkd-LgbsSe[aria-label="Reject all"]');

await buttonConsentReject?.click();

await waitFor(3000);

const html = await page.content();

await browser.close();

const hotelsList = [];

const $ = cheerio.load(html);

$('.uaTTDe').each((i, el) => {

const titleElement = $(el).find('.QT7m7 > h2');

const priceElement = $(el).find('.kixHKb span').first();

const reviewsElement = $(el).find('.oz2bpb > span');

const hotelStandingElement = $(el).find('.HlxIlc .UqrZme');

const options = [];

const pictureURLs = [];

$(el).find('.HlxIlc .XX3dkb').each((i, element) => {

options.push($(element).find('span').last().text());

});

$(el).find('.EyfHd .x7VXS').each((i, element) => {

pictureURLs.push($(element).attr('src'));

});

const hotelInfo = sanitize({

title: titleElement.text(),

price: priceElement.text(),

standing: hotelStandingElement.text(),

averageReview: reviewsElement.eq(0).text(),

reviewsCount: reviewsElement.eq(1).text(),

options,

pictures: pictureURLs,

});

hotelsList.push(hotelInfo);

});

const sortedHotelsList = hotelsList.slice().sort((hotelOne, hotelTwo) => {

if (!hotelTwo.price) {

return 1;

}

return hotelOne.price - hotelTwo.price;

});

console.log(sortedHotelsList);

}

void main();

Run the code and appreciate the result.

There you have it, you just collected the information of all the hotels within the first page of Google Hotels in a couple of seconds.

Scale Your Google Hotel Scraper with ScraperAPI

So far, we’ve written a scraper that can run punctually and scrape the first result of Google Hotel, but it is complicated if we want all the results.

As shown in the picture above, there are 14,994 hotels with only 12 displayed per page; if we want to get them all, we’ll need to send 1250 requests.

If you try to do that with the current code, Google Hotel’s bot detection system will:

- Rate limit your requests to minimize the number of requests you can send.

- Ban your IP address from sending requests to the website indefinitely.

To tackle this challenge, we’ll use ScraperAPI, an advanced solution that uses machine learning and years of statistical analysis to decide the best IP and headers combination, handle CAPTCHAs, and bypass any anti-bot systems on your way.

ScraperAPI handles CAPTCHAs, IP rotation, headers, retries, and more, to ensure 99.99% success rates.

ScraperAPI offers many solutions, but for this tutorial, let’s focus on its Async Scraper.

This tool allows you to submit scraping jobs in bulk without lowering the project’s success rate and a Webhook to return the data.

Once the job is submitted, ScraperAPI will scrape each URL asynchronously and trigger your webhook URL to retrieve the page content when available.

To get started, you will need an API key to use the API, so create a free ScraperAPI account to generate one.

Did you get your key? Then move to the next step.

Creating a Webhook Server

Let’s install the two dependencies we will need:

npm install axios express

Create a file webhook-server.js and paste the following code:

const express = require('express');

const PORT = 5001;

const app = express();

app.use(express.urlencoded({ extended: true }));

app.use(express.json({ limit: "10mb", extended: true }));

app.post('/scraper-webhook', (req, res) => {

console.log('New request received!', req.body);

return res.json({ message: 'Hello World!' });

});

app.listen(PORT, () => {

console.log(`Application started on URL http://localhost:${PORT} 🎉`);

});

This Express application exposes a route /scraper-webhook for receiving the page’s content scraped asynchronously.

Launch the Webhook server with the command node webhook-server.js; the application will start on port 5001.

Using ScraperAPI’s Async Scraper

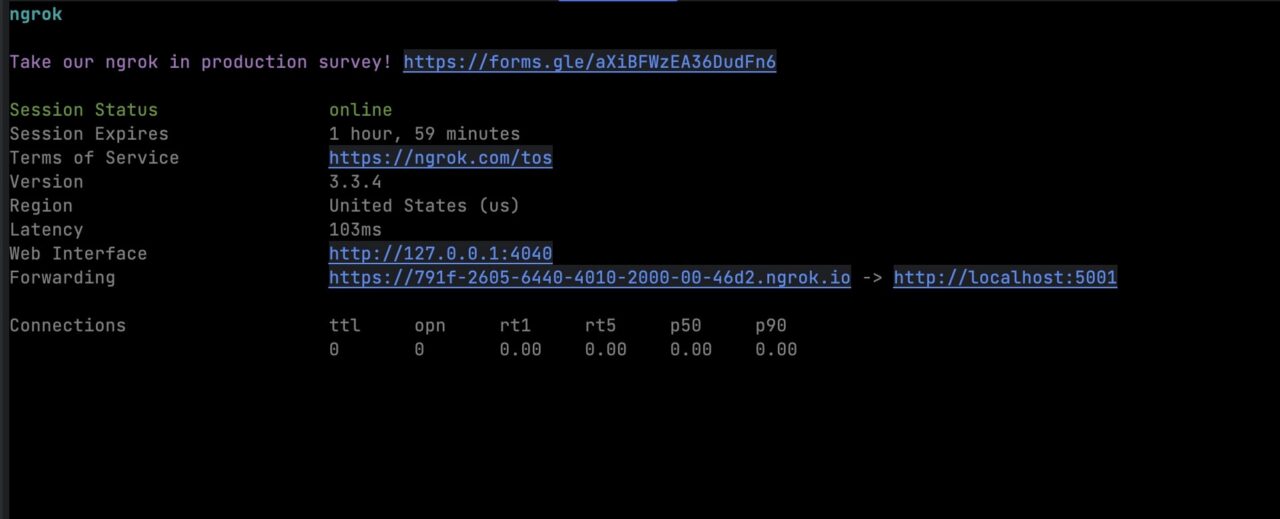

For ScraperAPI’s async service to trigger our webhook, we must make it accessible through the internet using a tunneling service like Ngrok.

- Run the command below to install Ngrok and verify it is installed correctly:

npm install -g ngrok

ngrok --version

- Create a tunnel from Ngrok to our local application:

ngrok http 5001

You will get the following output:

- Copy the Ngrok URL in the clipboard; we will use it later

- Create a file async-scraper.js and add the code below

<pre class="wp-block-syntaxhighlighter-code">

const axios = require('axios');

const pageURLs = [

'https://www.google.com/travel/search?ved=0CAAQ5JsGahcKEwiI9IHigKuBAxUAAAAAHQAAAAAQBg',

'https://www.google.com/travel/search?ved=0CAAQ5JsGahcKEwiI9IHigKuBAxUAAAAAHQAAAAAQBg&ts=CAEaHgoAEhoSFAoHCOcPEAoYAhIHCOcPEAoYAxgBMgIQACoHCgU6A1VTRA&qs=EgRDQkk9OA0&ap=MAE',

'https://www.google.com/travel/search?ved=0CAAQ5JsGahcKEwiI9IHigKuBAxUAAAAAHQAAAAAQBg&ts=CAESCgoCCAMKAggDEAAaHBIaEhQKBwjnDxAKGAISBwjnDxAKGAMYATICEAAqBwoFOgNVU0Q&qs=EgRDQ1E9OA1IAA&ap=MAE'

];

const apiKey = '<your-scraperapi-api-key>';

const apiUrl = 'https://async.scraperapi.com/batchjobs';

const callbackUrl = '<your-ngrok-url-to-the-webhook-server>';

const requestData = {

apiKey: apiKey,

urls: pageURLs,

callback: {

type: 'webhook',

url: callbackUrl,

},

};

axios.post(apiUrl, requestData)

.then(response => {

console.log(response.data);

})

.catch(error => {

console.error(error);

});

</pre>

- Replace the

apikeyand thecallbackUrlwith the correct values - The variable

pageURLscontains the URLs of the first three pages of Google Hotel search results, but you can add as many as you need. - Launch your async scraper with the command

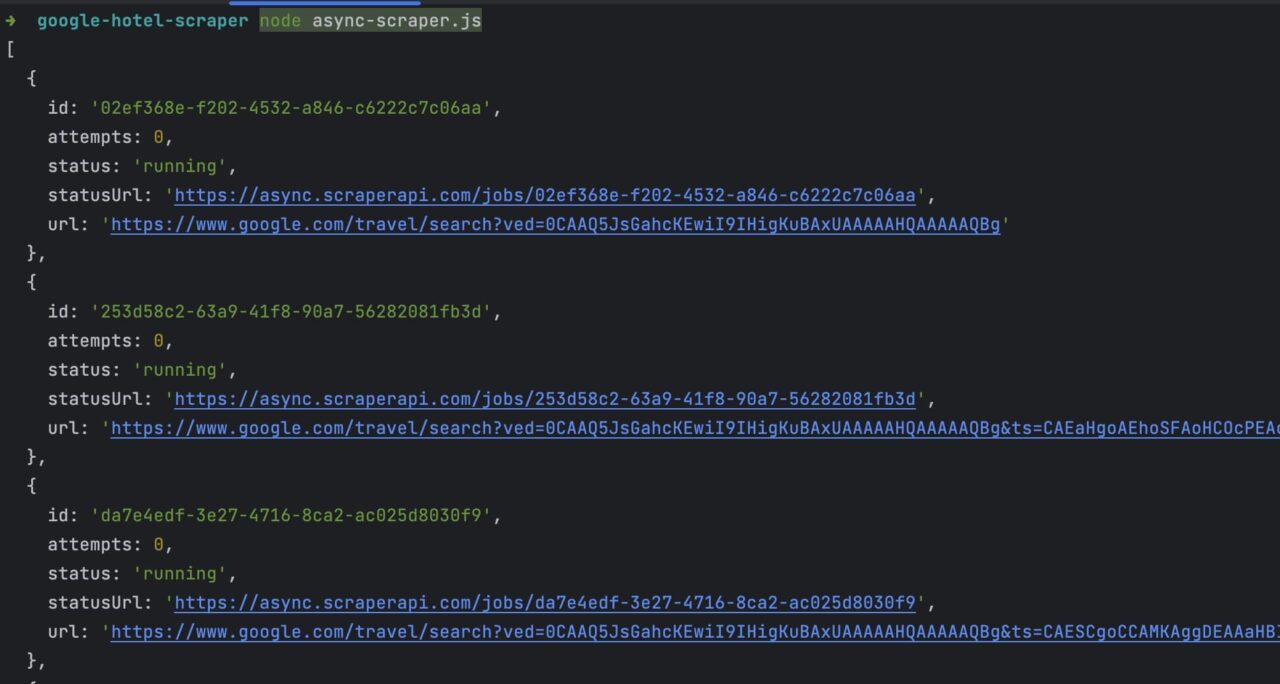

node async-scraper.js

You will get an output similar to this:

Once the Web scraping is done, you will receive the response with the raw HTML to your Webhook server, and you can use our previous code to parse, extract and sort the hotels.

Wrapping up

Congratulations, you just built your first Google Hotel scraper using Puppeteer – to retrieve the pages’ HTML – and Cheerio – to extract the data from the HTML content based on the DOM selector.

Remember, to scale your data collectors to thousands of URLs, you need to use ScraperAPI’s Async Scraper to avoid getting a rate limit or – worse – permanently banned from Google servers.

Check out Async Scraper’s documentation to learn the ins and outs of the tool, or check DataPipeline to automate the entire process without writing a single line of code – including a visual scheduler with cron support.

Find the code source of this post on this GitHub repository.

Until next time, happy scraping!