In an industry where even a slight shift in the market can generate or lose you millions of dollars, adopting a data-driven approach is crucial to be able to predict changes and make smart investment decisions.

For this reason, investors and hedge funds – and the financial industry at large – have the largest adoption and expenses in data acquisition.

Before committing to a multi-million dollar deal, investors need to collect and use all the data at their disposal to effectively predict how the company they’re investing in will perform in the future.

However, traditional historical data has its limitations and can blind your firm from considering real-time shifts in consumer behavior, product demand, etc., which provides deeper insights into the state of the market. For example, back in 2015, Eagle Alpha released a report predicting GoPro (NASDAQ: GPRO) wasn’t going to hit its Q3 target.

In a time when most stock recommendations pushed investors to buy, Eagle Alpha recommended the opposite. This prediction would payoff when GoPro reported Q3 earnings of $400 million after projecting $430 million – a difference of $30 million dollars that quarter.

The reason behind Eagle Alpha’s prediction wasn’t better analysts or some higher intuition; it was better data and more of it. Instead of just relying on traditional historical data, they collected alternative data from US electronics websites and more than 80 million sources.

If hedge funds and investors want to remain competitive, increase their ROI from their investments and mitigate risks as much as possible, scraping alternative data is a must.

What is Alternative Financial Data?

Any data points collected from non-traditional sources are considered alternative data. For financial firms, these are sources beyond company filings and broker insights like forum discussions, product reviews, and social media commentary.

Reasons to Automate Alt-Data Collection with Web Scraping

Just like the GoPro example, alt-data allows you to make faster and more accurate predictions by providing the extra insights conventional sources can’t. However, collecting this data manually is unproductive and impossible—can you imagine the amount of hours needed to collect, sort, clean, and finally analyze 80 million data sources?

For financial organizations and firms to stay competitive, they must use automated systems, more specifically, a web scraping solution, to perform these tasks, freeing their analysts to uncover the insights needed to make investment decisions rather than grinding for data.

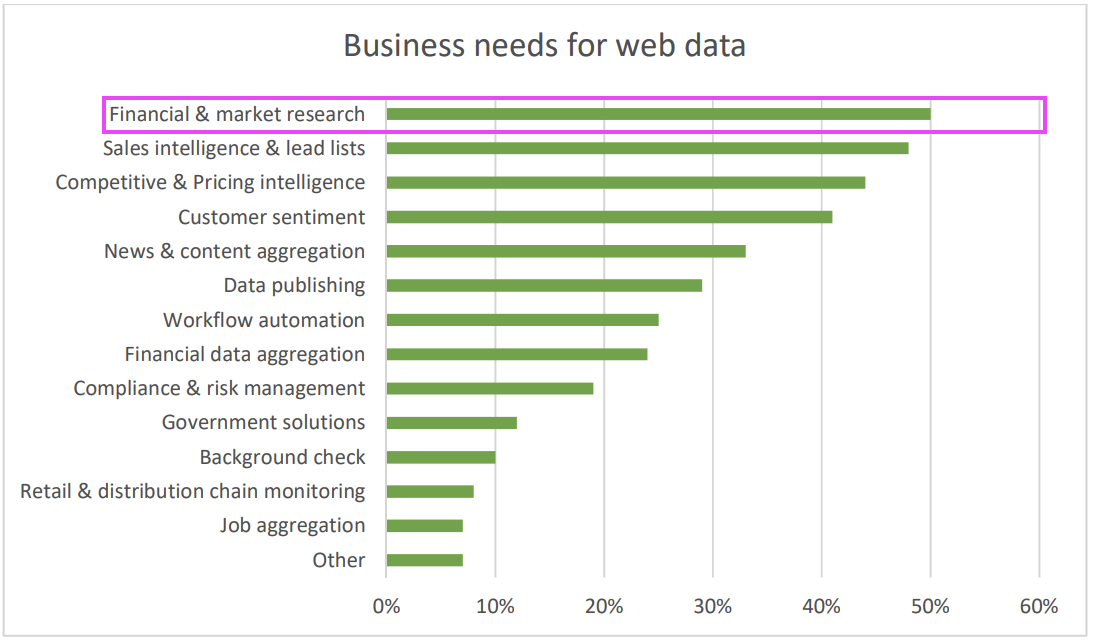

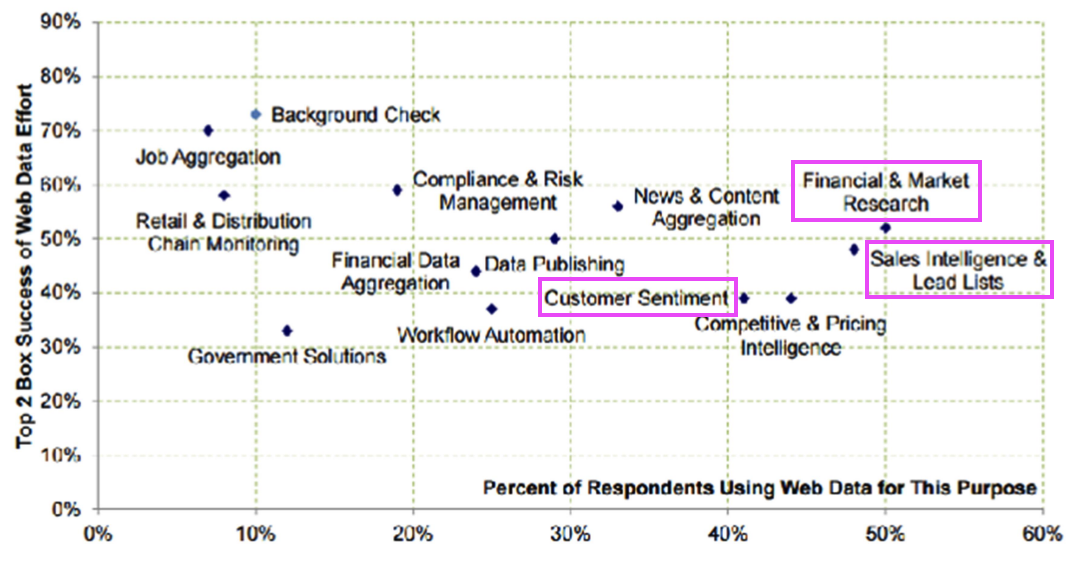

PrompCloud’s survey on web scraping revealed that finance, alongside competitive intelligence and customer sentiment analysis, are some of the top web scraping use cases. So, on that note, let’s explore four applications of alternative data scraping in the finance industry:

1. Product Data

Waiting for data reports can limit your insights. Why? Well, once the reports are released, you’ve probably lost investment opportunities.

One way to get ahead of the curve is by scraping product data like reviews and retail prices – to name a few – to uncover new trends in the market around your company’s products. For example, investors can scrape Amazon data to identify price and demand shifts for the products of the companies they have invested in. If many resellers start to reduce prices week by week, it could mean there’s less demand for the company’s products, therefore, less revenue coming in.

Reviews are also a great source of information to understand the customers’ perception of the company’s products. Highly positive reviews can symbolize a good time for investing. While increasingly negative reviews can let you know there’s a disconnect between the company and its customer base – a clear indication of a downtrend.

2. PR and News

Although we like to talk about the financial markets as rational, the truth is that the public’s emotions have a huge impact on a company’s performance. Staying up to date with the latest news around companies of interest is crucial for a successful investment strategy.

Bad public relations could change how the public and customers perceive a company, leading to lousy quarter performance. On the other hand, positive news like partnerships or corporate social responsibility (CSR) initiatives could help increase companies’ sales.

In this scenario, web scraping can be used to monitor millions of sites and stay on top of information (e.g., changes to financial regulatory requirements) that’s relevant to the companies you’re invested in.

3. SEC Filings

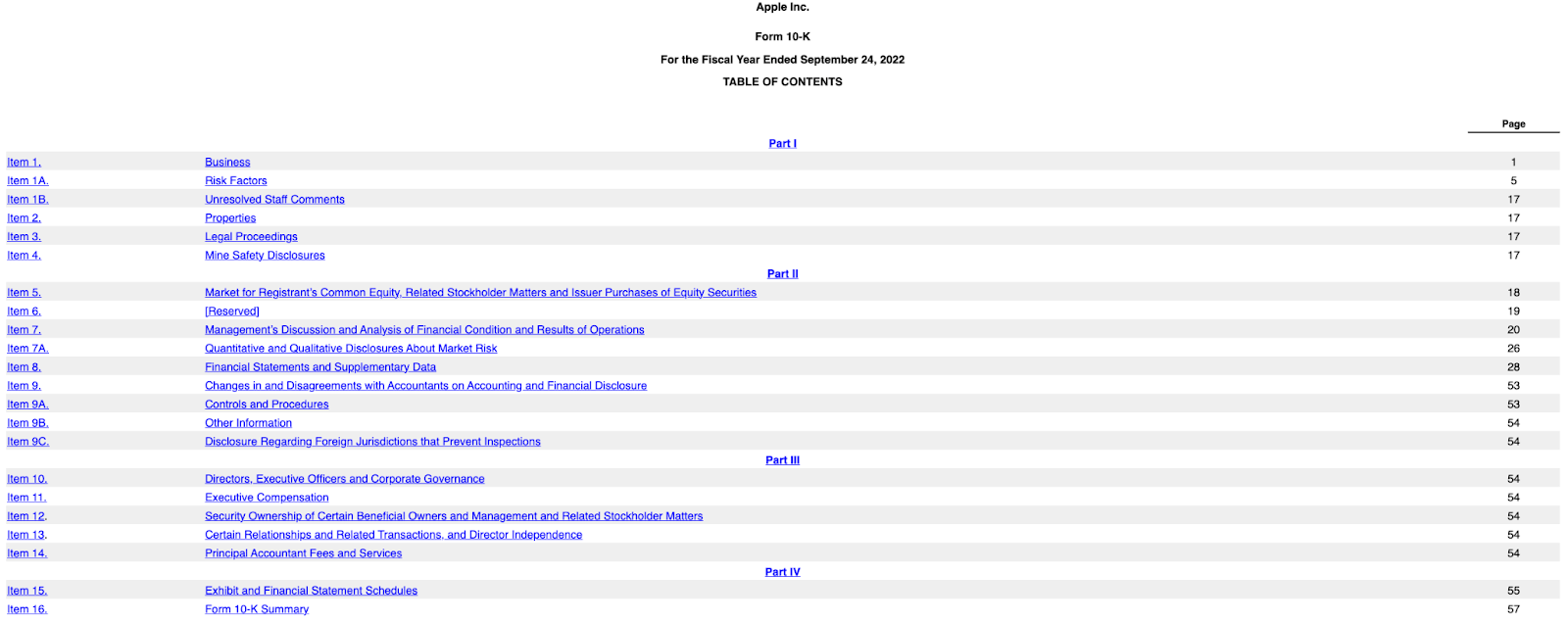

SEC filings are “important regulatory documents required of all public companies to provide key information to investors or potential investors,” which makes them a great source of alternative data for investment firms to leverage. These documents are filled with historical, internal information used to make decisions. One filing that investors might be interested in is the 10-k form, which “is an annual report that provides a comprehensive analysis of the company’s financial condition, (…) complete with tables full of data and figures.”

Source: Apple’s 2022 10-k form

By scraping these files, hedge funds can create an exhaustive database of hundred to thousands of companies’ historical financial data and uncover great investment opportunities or avoid potential losses.

4. Social Media and Forums

Sentiment analysis provides the necessary insights to comprehend how customers and the public perceive a brand and its products, and although analyzing the news can give you an idea, the best way to understand customer behavior is from the customers themselves.

By scraping social media platforms like Twitter and community forums like Reddit, you can collect the direct opinion of a company’s customer base instead of relying on guesswork, allowing you to predict the success or failure of a stock/company with a higher degree of accuracy.

(**Good to know: social media platforms and community forums data are some of the freshest sources of information. You can tap into real-time conversations instead of just relying on historical archives.)

Purchasing Datasets vs. Building Your Own

There are already companies dedicated to collecting and grouping this information, so it does beg the question: why would you create your own scrapers to collect alternative data instead of just buying it from aggregators? After all, buying existing datasets will provide you with instant data you can analyze and start getting insights from.

To understand the key role of web scraping in the future of hedge funds and investment firms’ ability to stay competitive.

Let’s analyze the pros and cons of each:

Off-the-Shelf Datasets Pros and Cons

Pre-built datasets are great because you get value right away and depending on the vendor you work with, a team of experts that are just dedicated to this task guarantees the quality of the data.

However, these datasets are open for sale to everyone, meaning your competition can access the same data pools, reducing their value.

To add, you’re also reliant on your vendor’s processes and data pipelines. If the vendor updates their database once a month, there isn’t much you can do to gain access to new data to stay competitive.

Building Your Own Datasets Pros and Cons

Unlike working with vendors, building your own datasets provides you with insights no other firms have access to. With clear planning, your team can create automated data feeds completely customized to your needs and analysis style, allowing for greater, customized insights.

In the case of scraping alternative data, the problem is time.

Investing in web scraping to build your own database is an investment for the future. You need time to gather enough data to discover significant trends, taking longer to perceive value from this investment.

Still, this is only true in certain scenarios. For example, firms can create data feeds for sentiment analysis with the goal of monitoring the sentiment around some of their investments. This requires scraping data as close to real-time as possible to stay on top of sentiment changes. So, before creating a backlog of historical data, firms can already use these alternative sources to make decisions.

Using Web Scraping to Gain a Competitive Financial Edge

At this point, you might be starting your own examination to determine which is a better investment for your firm based on the advantages and disadvantages we discussed above. However, in reality, web scraping and pre-built datasets are not mutually exclusive.

More data – as long as it’s accurate and clean – makes for better decisions. You can combine already existing datasets with your own scraped alternative data to form a more complete picture and gain a head start over the competition. Regardless, the fact that off-the-shelf datasets are openly available limits the potential for your firm.

When everyone has access to the same data, it comes down to your analysts’ and investment teams’ capabilities to transform the data into useful insights faster and better than the rest – putting much more pressure on their shoulders. Today, web scraping is the key to gathering unique data points from all over the internet, and creating custom-made data feeds that allow you to find new trends faster than anyone else. From spotting decreases in product demand or monitoring a brand’s reputation, firms using web scraping have an unfair advantage.

Want to learn more about web scraping?

Here are a few guides to help you get started:

- How Hedge Funds Are Using Web Scrapers – get inspiration from other firms and investors

- How to Choose a Data Collection Tool – find the right tool for your team based on technical expertise, time constraints, and money

- How to Scrape Stock Market Data Using Python in Just 6 Steps – especially important for stock traders and investors

- Build a Google Trends Scraper Using PyTrends – spot search trend shifts to predict customers’ interest and potential product performance

For more information, get in touch. Alternatively, try ScraperAPI yourself.