Want to scrape G2 reviews without getting blocked? Then you’re in the right place!

As a trusted source of information, G2 is a go-to resource for businesses to research and evaluate genuine user reviews and ratings for software products and services, and to manage their software stacks.

However, G2 has implemented robust security measures, including heavy use of CAPTCHA challenges, making it highly challenging to scrape data from the site.

Review sites use heavy anti-scraping mechanisms. Bypass them with a simple API call.

In this article, we’ll show you how to collect product reviews from G2 without risking a ban from the site, allowing you to export data into a JSON file for easy analysis.

TL;DR: Full G2 Software Product Review Python Scraper

For those in a hurry, here’s the full Python script we’re making in this tutorial:

import requests

from bs4 import BeautifulSoup

import json

API_KEY = "Your_API_Key"

url = "https://www.g2.com/products/scraper-api/reviews"

payload = {"api_key": API_KEY, "url": url}

html = requests.get("https://api.scraperapi.com", params=payload)

soup = BeautifulSoup(html.text, "lxml")

# Initialize the results dictionary

results = {"product_name": "", "number_of_reviews": "", "reviews": []}

# Extracting the product name

product_name_element = soup.select_one(".product-head__title a")

results["product_name"] = (

product_name_element.get_text(strip=True)

if product_name_element

else "Product name not found"

)

# Extracting the number of reviews

reviews_element = soup.find("li", {"class": "list--piped__li"})

results["number_of_reviews"] = (

reviews_element.get_text(strip=True)

if reviews_element

else "Number of reviews not found"

)

# Loop through each review element

for review in soup.select(".paper.paper--white.paper--box"):

review_data = {}

# Extracting the username

username_element = review.find("a", {"class": "link--header-color"})

review_data["username"] = (

username_element.get_text(strip=True)

if username_element

else "No username found"

)

# Extracting the review summary

summary_element = review.find("h3", {"class": "m-0 l2"})

summary_text = (

summary_element.get_text(strip=True) if summary_element else "No summary found"

)

review_data["summary"] = summary_text.replace('"', "")

# Extracting the review

user_review_element = review.find("div", itemprop="reviewBody")

review_data["review"] = (

user_review_element.get_text(strip=True)

if user_review_element

else "No review found"

)

# Extracting the review date

review_date_element = review.find("time")

review_data["date"] = (

review_date_element.get_text(strip=True)

if review_date_element

else "No date found"

)

# Extracting the review URL

review_url_element = review.find("a", {"class": "pjax"})

review_data["url"] = (

review_url_element["href"] if review_url_element else "No URL found"

)

# Extracting the rating

rating_element = review.find("meta", itemprop="ratingValue")

review_data["rating"] = (

rating_element["content"] if rating_element else "No rating found"

)

# Add the review data to the reviews list

results["reviews"].append(review_data)

# Writing results to a JSON file

with open("G2_reviews.json", "w", encoding="utf8") as f:

json.dump(results, f, indent=2)

print("Data written to G2_reviews.json")

Note: Don’t have an API key? Create a free ScraperAPI account and receive 5,000 API credits to test all our tools.

If you want to follow along, open your preferred editor, and let’s get started!

Scraping G2 Product Reviews with Python

For this tutorial, we’ll focus on scraping ScraperAPI’s G2 reviews, collecting details like:

- Username

- Summary

- Review

- Date

- Review’s URL

- Rating

We’ll export all of this information into a JSON file (or a CSV file if you prefer) to make it easier to navigate and repurpose the way you want.

Requirements

The packages needed as prerequisites for this project are beautifulsoup4, requests, and lxml. You can install these using the following command:

pip install beautifulsoup4 requests lxml

You’ll also need to have Python installed, preferably version 3.8 or beyond, to prevent issues.

Understanding G2 Site Structure

Understanding the HTML structure of the G2 website before starting scraping for reviews is critical, as it will allow you to extract data more efficiently.

Use the search bar to search for scraperAPI on the G2 website. You will get a page similar to this:

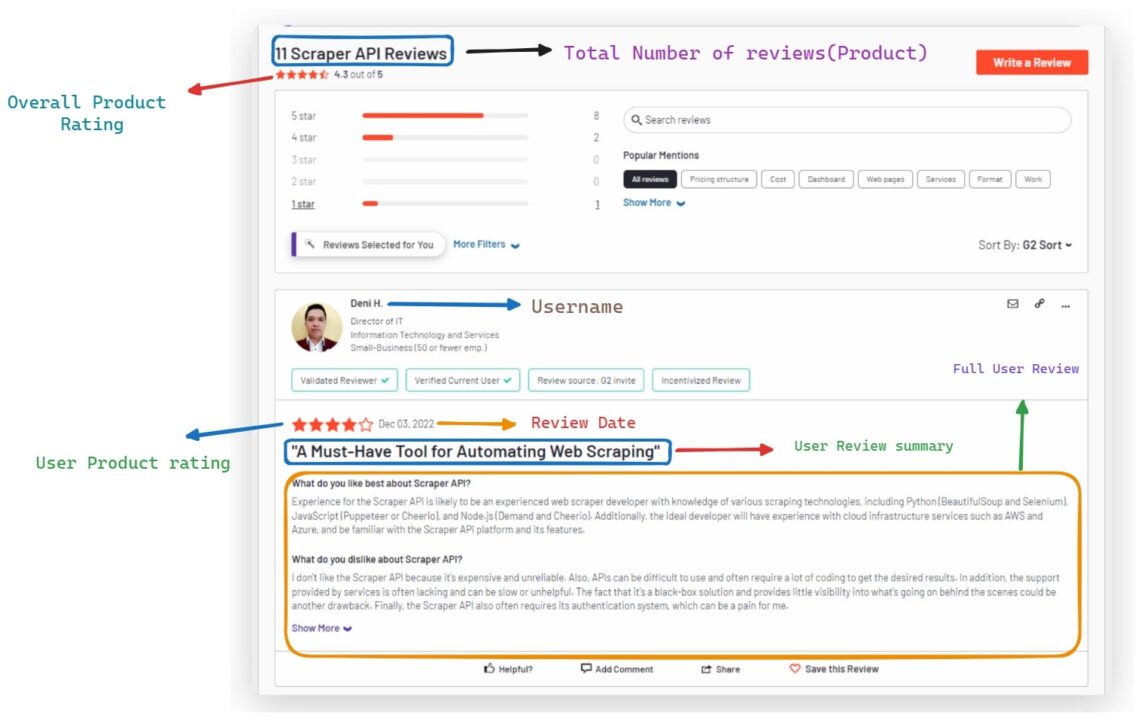

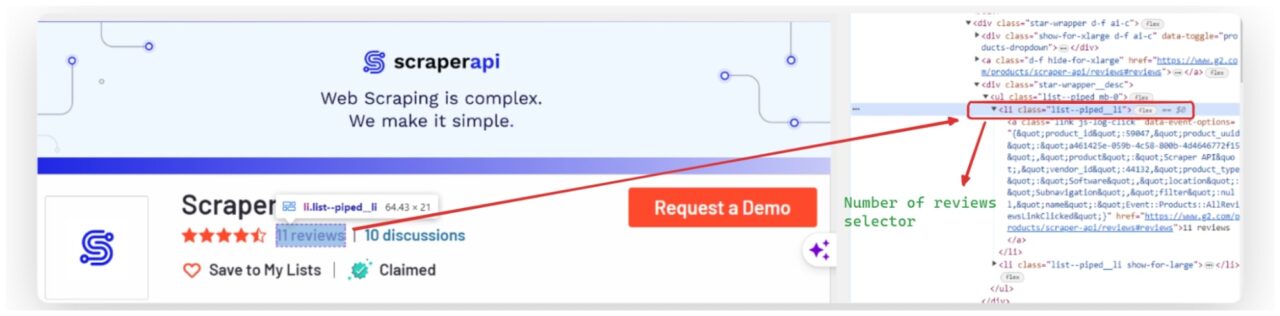

Each review on the G2 product reviews page is typically contained within distinct HTML elements with unique class names or attributes. These elements contain the data we will scrape.

We’ll use BeautifulSoup to target specific data points by identifying unique selectors such as class names, IDs, or attributes.

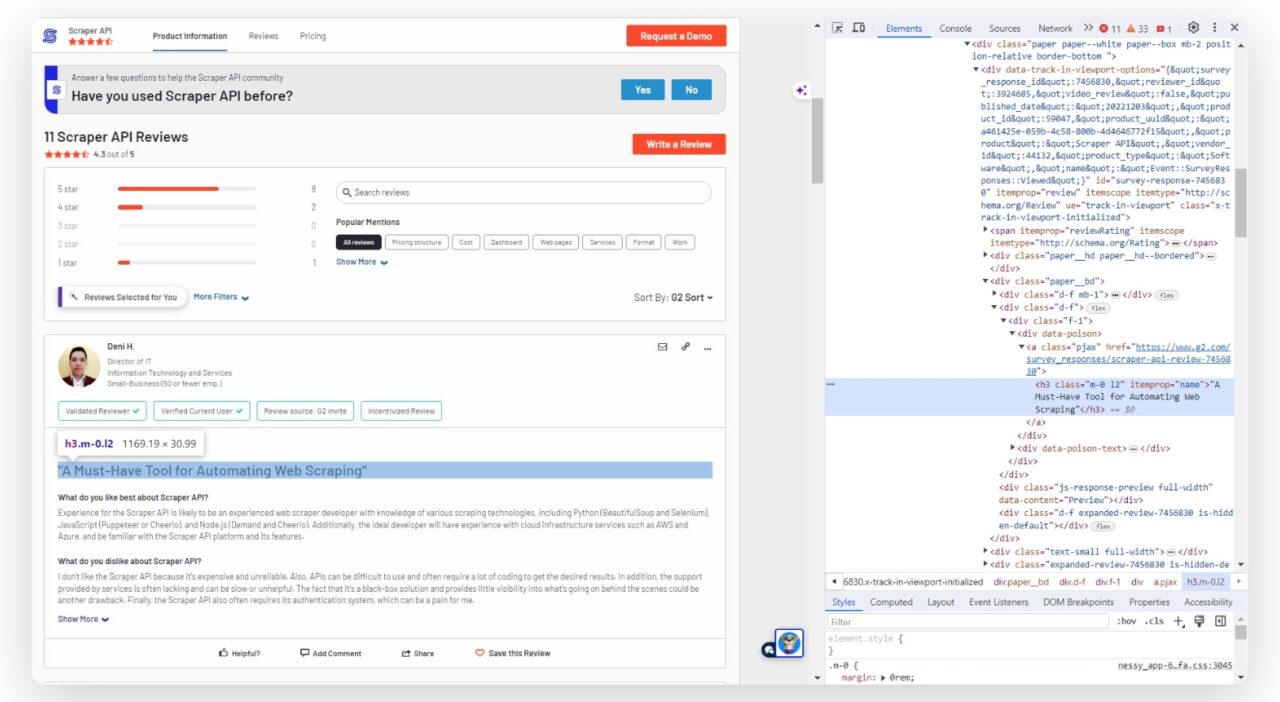

Start by opening the G2 review page and inspecting the HTML with your browser’s developer tools. This is usually accessed by right-clicking the page and selecting “Inspect“.

The HTML structure can be seen in the developer tools window:

Now that we know this, it’s a matter of taking notes of each element and its particular CSS selector.

Step 1: Setting Up Your Project

First, we need to import the necessary libraries for this project into our Python file:

import requests

from bs4 import BeautifulSoup

import json

Next, we define the API key and the URL of the product page we want to scrape as variables.

Note: You can find your API key from your ScraperAPI dashboard.

API_KEY = "your_api_key"

url = "https://www.g2.com/products/product_id/reviews"

It’s important to note that for scraping G2 effectively, a premium subscription to ScraperAPI is recommended as it provides additional capabilities that are particularly suited for dealing with G2’s level of protection.

Popular sites tend to use more advanced anti-scraping mechanisms. Talk to our experts to find the perfect plan for your use case.

Step 2: Sending a Request and Parsing the Response

We create a payload object that includes our API key and the G2 URL we wish to scrape. This payload is then used to send a get() request to our Scraping API, which will handle the complexities of web scraping such as smart IP and header rotation, CAPTCHA handling, and more, using machine learning and statistical analysis.

payload = {"api_key": API_KEY, "url": url}

html = requests.get("https://api.scraperapi.com", params=payload)

Then, we can use BeautifulSoup to parse the HTML response and store it as a soup object – allowing us to then navigate the parsed tree using CSS selectors.

soup = BeautifulSoup(html.text, "lxml")

Step 3: Extracting G2 Software Reviews

This step involves initializing a dictionary to store the results and extracting the product name, number of reviews, and user review data from the parsed HTML response.

We start by creating a dictionary called results to store the data we’ll extract.

results = {"product_name": "", "number_of_reviews": "", "reviews": []}

Here, product_name and number_of_reviews are strings that will hold the name of the product and the total number of reviews, respectively, while reviews is a list containing dictionaries, each representing an individual review.

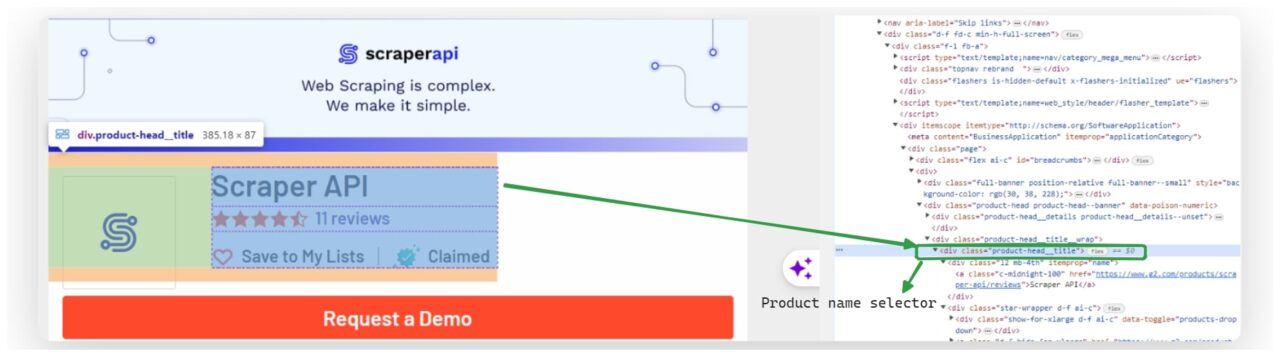

Once we have our dictionary ready, we extract the product name from the parsed HTML.

We use BeautifulSoup’s select_one() method to select the first element that matches the CSS selector .product-head__title a. We then get the text of this element and assign it to results['product_name'].

product_name_element = soup.select_one(".product-head__title a")

results["product_name"] = product_name_element.get_text(strip=True) if product_name_element else "Product name not found"

Similarly, we extract the number of reviews by selecting the element with the CSS selector li.list--piped__li and getting its text.

reviews_element = soup.find("li", {"class": "list--piped__li"})

results["number_of_reviews"] = reviews_element.get_text(strip=True) if reviews_element else "Number of reviews not found"

After that, we loop through all the reviews on the page. Each review is selected using the selector .paper.paper--white.paper--box. We extract the username, summary, full review, date, URL, and rating for each review, then proceed to store this information in a new dictionary called review_data.

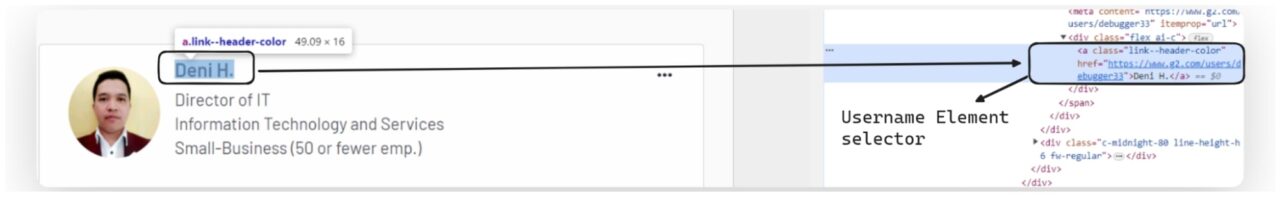

Extracting the Username

The username is typically found within an anchor (a) tag. To extract it, we look for the anchor tag with a class that indicates it contains the username.

For example, if the class name is link--header-color, we then use BeautifulSoup to find this a tag and then get its text content.

username_element = review.find("a", {"class": "link--header-color"})

review_data["username"] = username_element.get_text(strip=True) if username_element else "No username found"

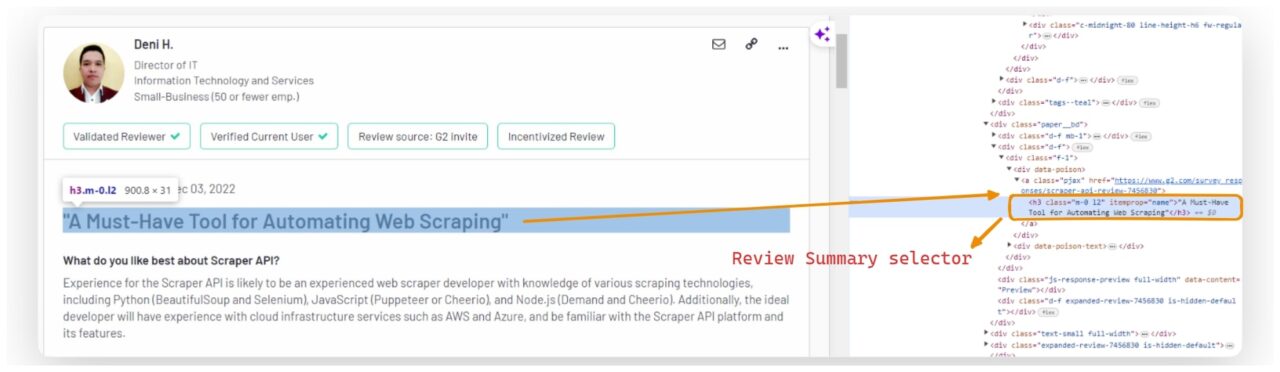

Extracting the Review Summary

The review summary is found within an h3 heading with a class set to m-0 l2.

summary_element = review.find("h3", {"class": "m-0 l2"})

review_data["summary"] = summary_element.get_text(strip=True).replace('"', "") if summary_element else "No summary found"

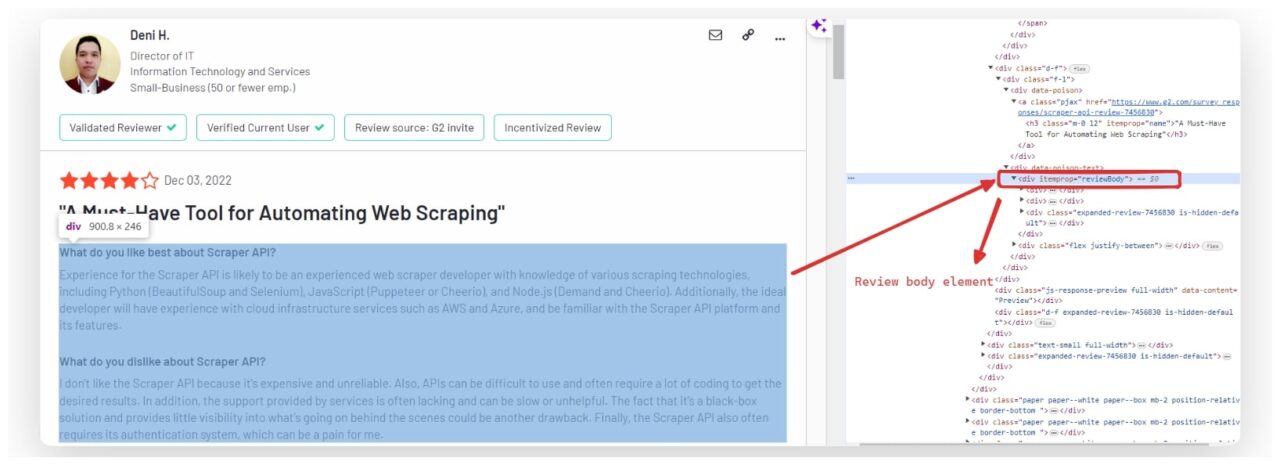

Extracting the Review Body

The full text of the review is usually contained within a div with an itemprop attribute set to reviewBody. To extract the review text, we find this div and retrieve its text content.

user_review_element = review.find("div", itemprop="reviewBody")

review_data["review"] = user_review_element.get_text(strip=True) if user_review_element else "No review found"

Extracting the Review Date

The date of the review is often located within a time element.

review_date_element = review.find("time")

review_data["date"] = review_date_element.get_text(strip=True) if review_date_element else "No date found"

Extracting the Review URL

To extract the URL of each review, we look for an anchor tag that contains the href attribute. Specifically, we are interested in the a tags with the class pjax, as this is the class used by G2 for the links to the individual reviews.

Here’s how we can include this in our extraction process:

review_url_element = review.find("a", {"class": "pjax"})

review_data["url"] = review_url_element["href"] if review_url_element else "No URL found"

Extracting the Rating

Ratings are found within a meta tag with an attribute itemprop set to ratingValue. We search for this tag and extract its content attribute to get the rating value.

rating_element = review.find("meta", itemprop="ratingValue")

review_data["rating"] = rating_element["content"] if rating_element else "No rating found"

Each piece of data is then stored in a dictionary called review_data. After extracting the data for each review, we append review_data to results['reviews'].

results["reviews"].append(review_data)

By the end of this step, the results will be a dictionary containing the product name, number of reviews, and a list of dictionaries, each representing an individual review.

Talk to our team of experts to build the perfect plan for your use case, including premium support and a dedicated account manager.

Step 4: Write the Results to a JSON File

We serialize the scraped data into a JSON format and write it to a file named G2_reviews.json. The json.dump() function is used for this purpose, which takes the data and a file object as arguments, and writes the JSON data to the file.

with open("G2_reviews.json", "w", encoding="utf8") as f:

json.dump(results, f, indent=2)

print("Data written to G2_reviews.json")

The encoding=utf8 parameter ensures that our data is encoded in UTF-8, which is the most common encoding used for web content.

UTF-8 encoding supports all Unicode characters, which means it can handle non-ASCII characters without any issues. This is particularly important when dealing with special characters or emojis that may appear in a user’s review.

Step 5: Testing the G2 Scraper

After running your script, the results are stored in a JSON file named G2_reviews.json, which should look like this:

{

"product_name": "Scraper API",

"number_of_reviews": "11 reviews",

"reviews": [

{

"username": "Deni H.",

"summary": "A Must-Have Tool for Automating Web Scraping",

"review": "What do you like best about Scraper API?Experience for the Scraper API is likely to be an experienced web scraper developer with knowledge of various scraping technologies, including Python (BeautifulSoup and Selenium), JavaScript (Puppeteer or Cheerio), and Node.js (Demand and Cheerio). Additionally, the ideal developer will have experience with cloud infrastructure services such as AWS and Azure, and be familiar with the Scraper API platform and its features.Review collected by and hosted on G2.com.What do you dislike about Scraper API?I don't like the Scraper API because it's expensive and unreliable. Also, APIs can be difficult to use and often require a lot of coding to get the desired results. In addition, the support provided by services is often lacking and can be slow or unhelpful. The fact that it's a black-box solution and provides little visibility into what's going on behind the scenes could be another drawback. Finally, the Scraper API also often requires its authentication system, which can be a pain for me.Review collected by and hosted on G2.com.What problems is Scraper API solving and how is that benefiting you?Despite the drawbacks, there are a few scenarios where using the Scraper API could be useful to me. For example, if I need to quickly and easily access large amounts of data from multiple sources without having to write complex code, the Scraper API might be one solution. Additionally, the scalability of the service makes it ideal for high-volume scraping tasks that are difficult to achieve with manual coding. Finally, the fact that it's managed by a third party means I don't have to worry about updating my code or making sure it works with new versions of websites.Review collected by and hosted on G2.com.",

"date": "Dec 03, 2022",

"url": "https://www.g2.com/survey_responses/scraper-api-review-7456830",

"rating": "4.0"

},

MORE REVIEWS ]

}

What Can You Do with the G2 Data

After scraping G2 reviews and exporting it into a JSON file, you can use the data — during market research — to gain insights about the product. You can calculate the average rating, make data-driven decisions, or track changes in ratings over time.

This rating data is particularly valuable to businesses looking to enhance customer support, as understanding common issues and complaints from reviews can help you proactively address these problems.

You can use these reviews to improve your FAQs, create targeted support materials, or train your customer service team to handle recurring issues more effectively.

Also, you can use this process for competitive analysis, as you can find what your competitors’ customers are saying about them, letting you find weaknesses in their software to enhance yours or promote features they’re lacking.

Legal and Ethical Considerations When Scraping G2

G2 heavily relies on Cloudflare challenges to protect its site, and while most data on G2.com is publicly accessible, scraping must be done without harming the website.

Always consult and adhere to G2’s Terms of Service and community guidelines, which outline permissible data collection and use. Attempting to bypass any form of access control, such as login walls, is unethical and potentially illegal.

Furthermore, if your scraping activities involve collecting personal data, such as reviewer emails, you must be mindful of regulations like the GDPR in the EU, which impose strict rules on handling personal data.

Wrapping Up

G2.com is a valuable resource for company reviews and comparisons, allowing you to collect direct feedback from customers to make marketing and business decisions.

In this tutorial, we have:

- Gone through the essential steps of sending HTTP requests, parsing HTML, and exporting all reviews found into a JSON file

- Addressed navigating G2’s robust security measures by utilizing ScraperAPI to facilitate data extraction without triggering a site ban

- Understood the legal implications of scraping G2 and how to keep your scrapers compliant

We hope learned something new today. If you have any questions, contact our support team. We’re eager to help!

Working on a large project? Collecting data at an enterprise level doesn’t mean breaking the bank, contact our sales team if you need more than 3M API credits and let our experts build a custom plan for you.

Until next time, happy scraping!