Web scraping with Python is one of the easiest and fastest ways to build large datasets, in large part thanks to its English-like syntax and wide range of data tools.

However, learning everything on your own might be tricky – especially for beginners.

To help you in this journey, let us walk you through the basics of Python web scraping, its benefits, and best practices.

Why is Python Good for Web Scraping?

Thanks to Python’s popularity, there are a lot of different frameworks, guides, web scraping tutorials, resources, and communities available to keep improving your craft.

What makes it an even more viable choice is that Python has become the go-to language for data analysis, resulting in a plethora of frameworks and tools for data manipulation that give you more power to process the scraped data.

So if you’re interested in scraping websites with Python to build huge data sets and then manipulating and analyzing them, this is exactly the guide you’re looking for.

Web Scraping with Python and Beautiful Soup

In this Python web scraping tutorial, we’re going to scrape this Indeed job search page to gather the:

- Job title

- Name of the company hiring

- Location

- URL of the job post

After collecting all job listings, we’ll format them into a CSV file for easy analysis.

Web scraping can be divided into a few steps:

- Request the source code/content of a page to a server

- Download the response (usually HTML)

- Parse the downloaded information to identify and extract the information we need

While our example involves Indeed, you can follow the same steps for almost any web scraping project.

Just remember that every page is different, so the logic can vary slightly from project to project.

With that said, let’s jump into our first step:

Understanding Page Structure

All web scrapers, at their core, follow this same logic. In order to begin extracting data from the web with a scraper, it’s first helpful to understand how web pages are typically structured. Before we can begin to code our Python web scraper, let’s first look at the components of a typical page’s structure.

Most modern web pages can be broken down into two main building blocks, HTML and CSS.

HTML for Web Scraping

HyperText Markup Language (HTML) is the foundation of the web. This markup language uses tags to tell the browser how to display the content when we access a URL.

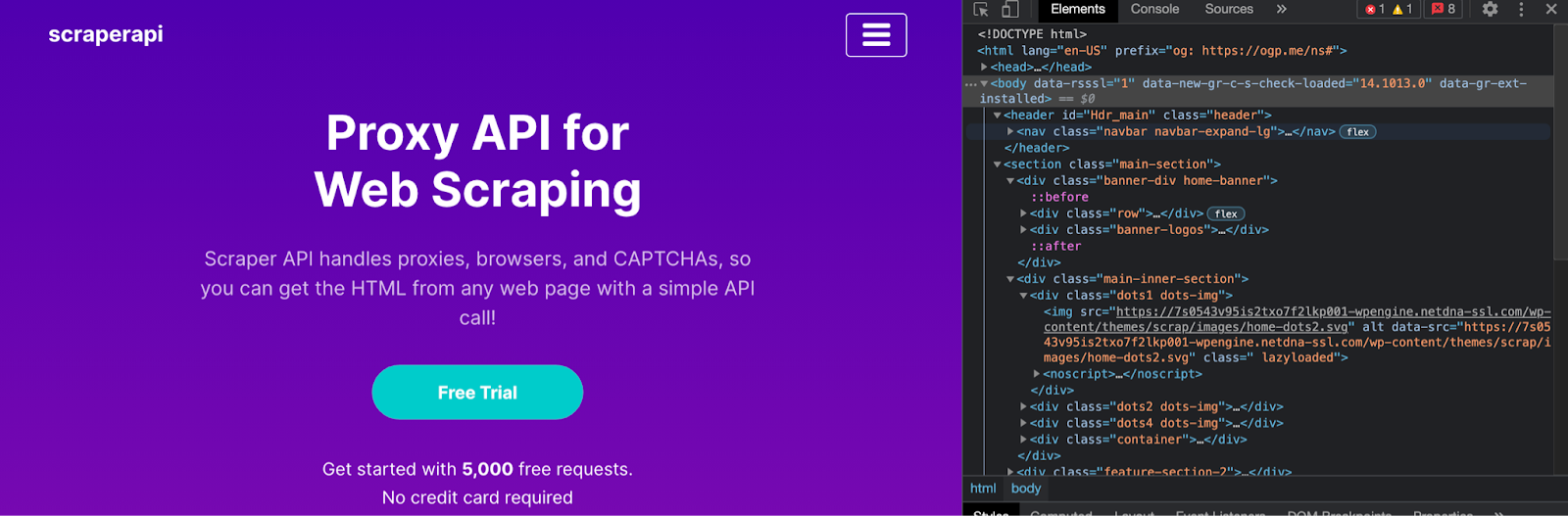

If we go to our homepage and press ctrl/command + shift + c to access the inspector tool, we’ll be able to see the HTML source code of the page.

Although the HTML code can look very different from website to website, the basic structure remains the same.

The entire document will begin and end wrapped between <html></html> tags, we’ll find the <head></head> tags with the metadata of the page, and the <body></body> tags where all the content is – thus, making it our main target.

Something else to notice is that all tags are nested inside other tags.

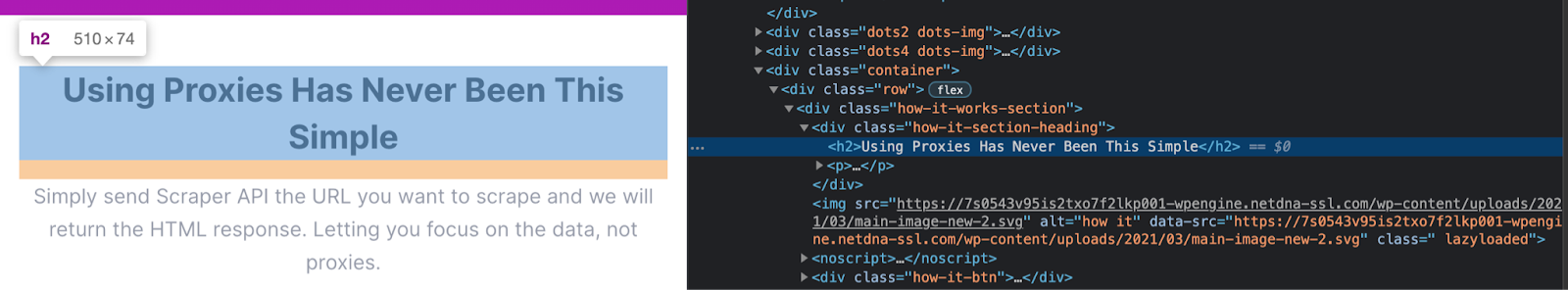

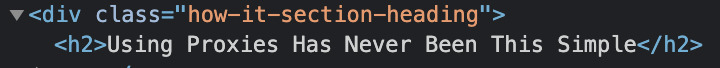

In the image above, we can see that the title text is inside of a <h2> tag which is inside of a div inside a div.

It is important because when scraping a site, we’ll be using its HTML tags to find the bits of information we want to extract.

Here are a few of the most common tags:

- div – it specifies an area or section on a page. Divs are mostly used to organize the page’s content

- h1 to 6 – defines headings.

- p – tells the browser the content is a paragraph.

- a – tells the browser the text or element is a link to another page. This tag is used alongside an href property that contains the target URL of the link

Note: for a complete list, check W3bschool’s HTML tag list.

CSS for Web Scraping

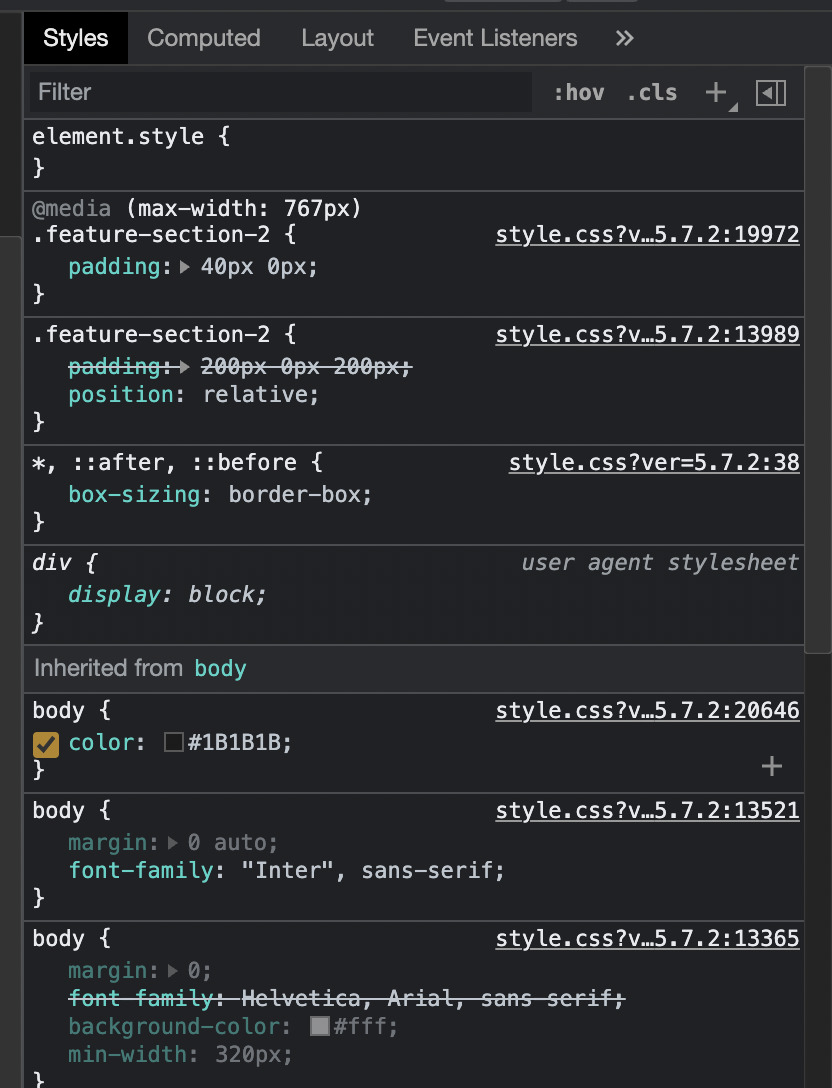

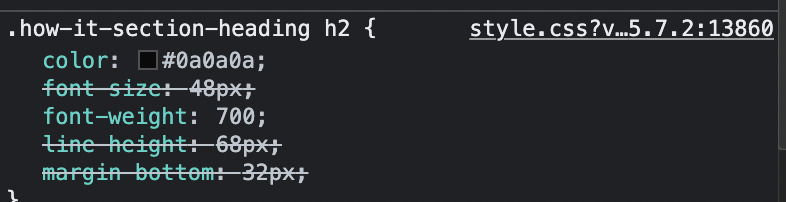

Cascading Style Sheets (CSS) is a language used to style HTML elements. In other words, it tells the browser how the content specified in the HTML document should look when rendered.

CSS example from the ScraperAPI homepage

But why do we care about the aesthetics of the site when scraping? Well, we really don’t.

The beauty of CSS is that we can use CSS selectors to help our Python scraper identify elements within a page and extract them for us.

When we write CSS, we add classes and IDs to our HTML elements and then use selectors to style them.

In this example, we used the class=”how-it-section-heading” to style the heading of the section.

Note: the dot (.) means class. So the code above selects all elements with the class how-it-section-heading.

Now that we understand the basics, it’s time to start building our scraper using Python, Request, and Beautiful Soup.

Step 1: Use Python’s Requests Library to Download the Page

The first thing we want our scraper to do is to download the page we want to scrape.

For this, we’ll use the Requests library to send a get request to the server.

To install the Requests library, go to your terminal and type pip3 install requests.

Now, we can create a new Python file called soup_scraper.py and import the library to it, allowing us to send an HTTP request and return the HTML code as a response, and then store the data in a Python object.

</p>

import requests

url = 'https://www.indeed.com/jobs?q=web+developer&l=New+York'

page = requests.get(url)

print(page.content)

<p>The print(page.content) will log into the terminal the response stored in the page variable, which at this point is a huge string of HTML code – but confirming the request worked.

Another way to verify that the URL is working is by using print(page.status_code). If it returns a 200 status, it means the page was downloaded successfully.

Step 2: Inspect Your Target Website Using the Browser’s Dev Tools

Here’s where those minutes of learning about page structure will payout.

Before we can use Beautiful Soup to parse the HTML we just downloaded, we need to make sure we know how to identify each element in it so we can select them appropriately.

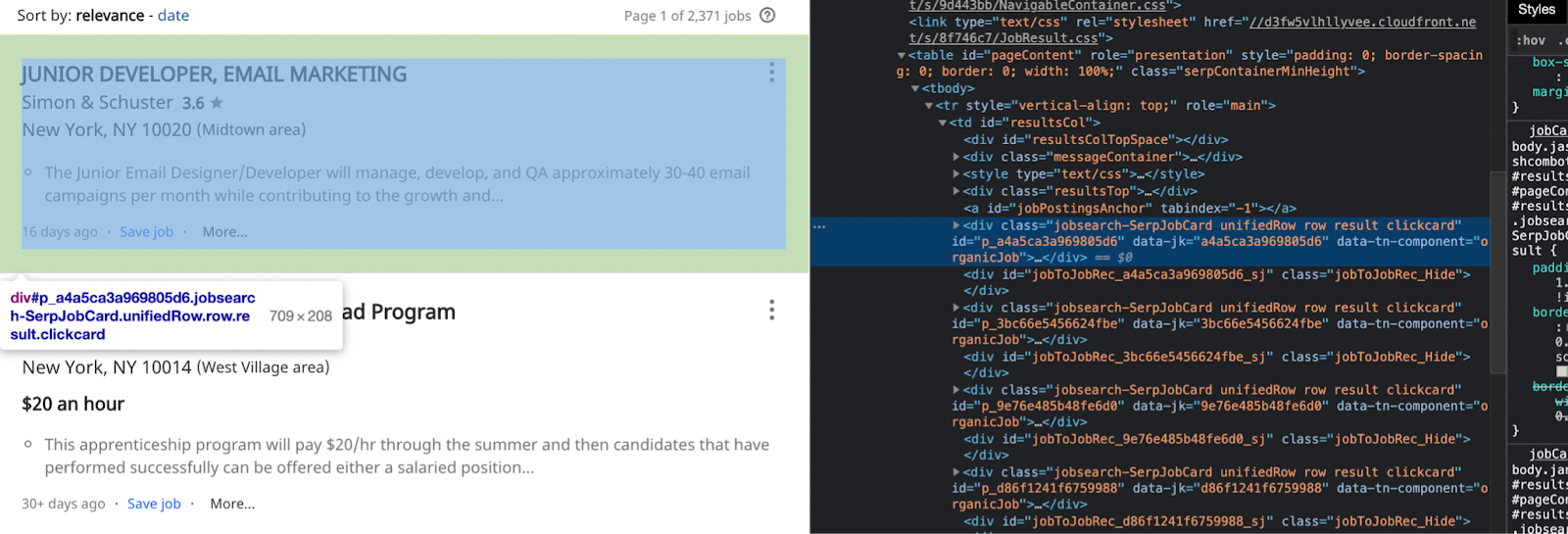

Go to indeed’s URL and open the dev tools. The quickest way to do this is to right click on the page and select “inspect.” Now we can start exploring the elements we want to scrape.

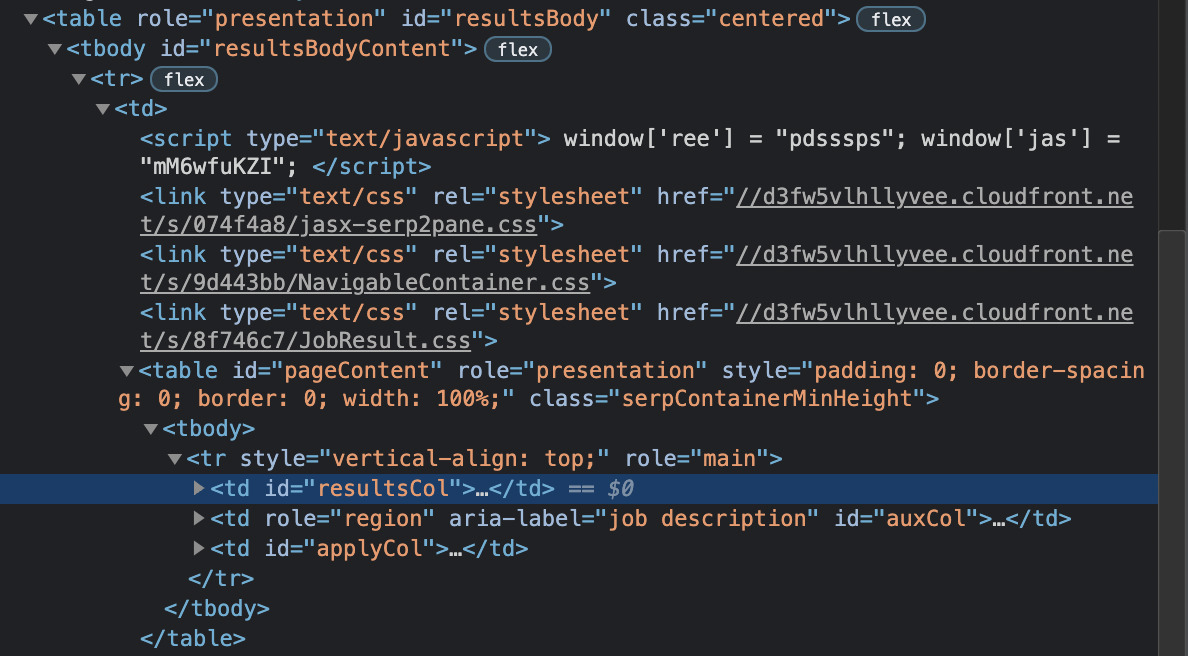

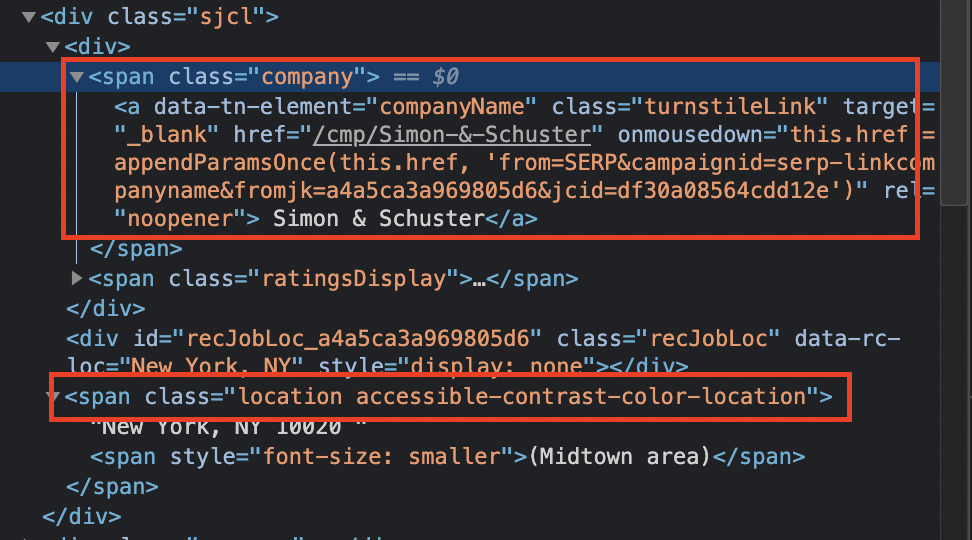

At the time we’re writing this piece, it seems like all the content we want to scrape is wrapped inside a td tag with the class resultsCol.

Note: this page is a little messy in its structure, so if you have trouble finding the elements, don’t be worried. If you hit ctrl+F in the inspection panel, you can search for the elements you’re looking for. Here’s an overview of the HTML of the page so you can find td class=”resultsCol” easier.

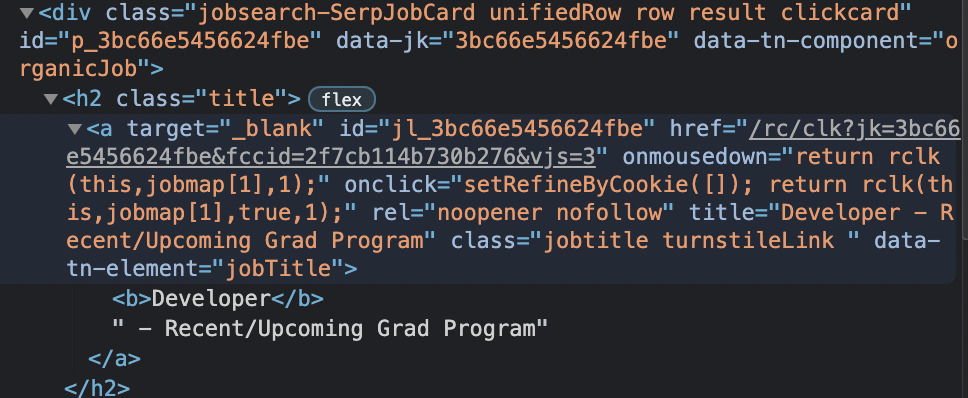

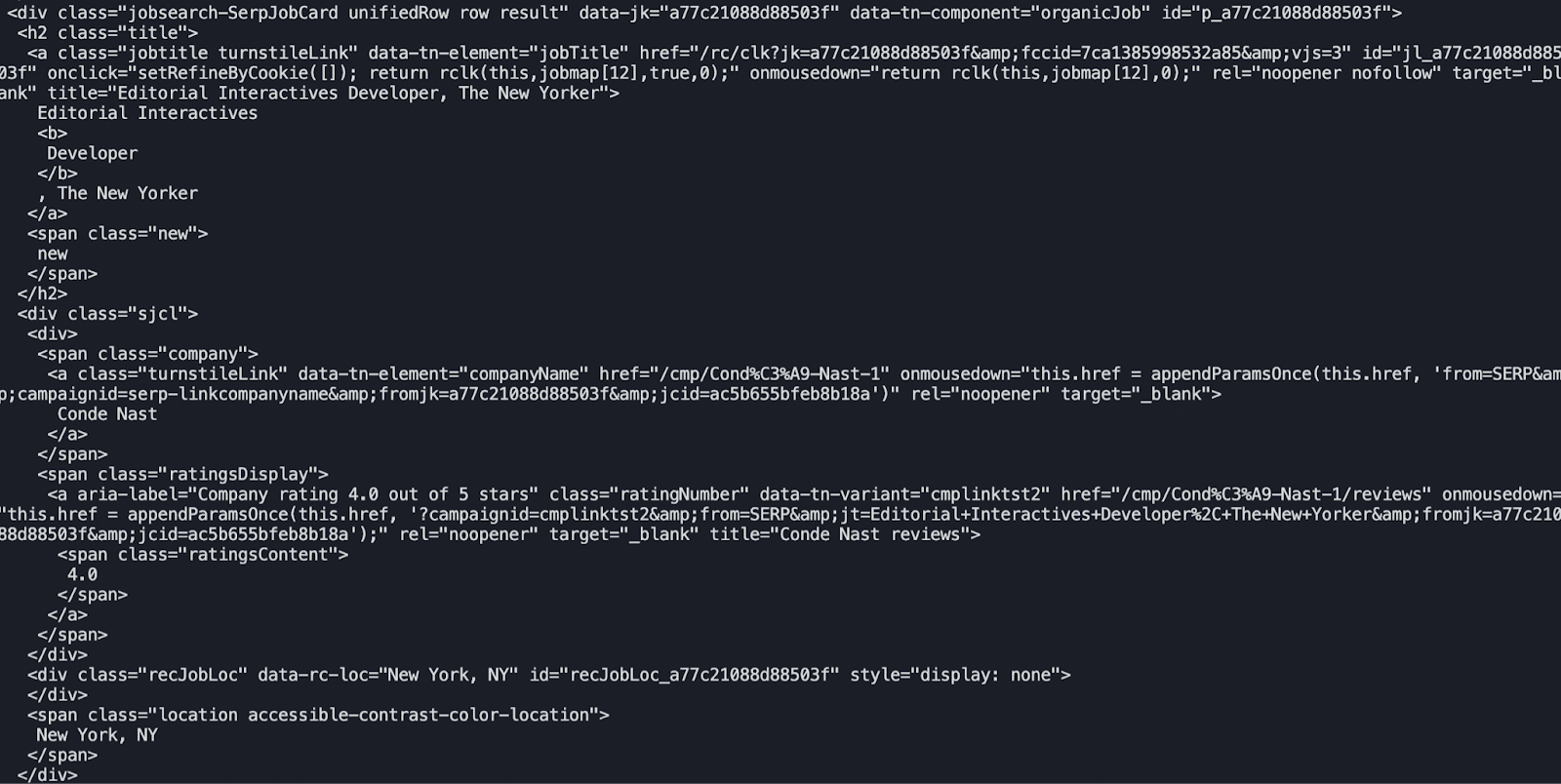

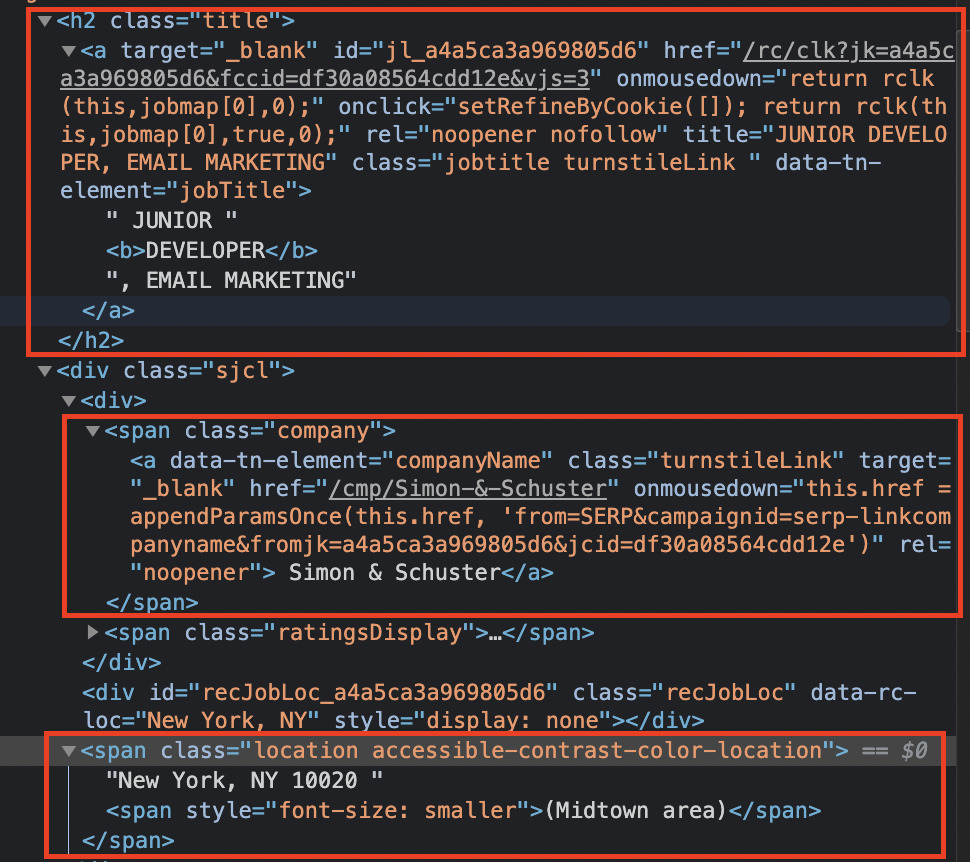

Here it looks like every job result is structured as a card, contained within a div with class=”jobsearch-SerpJobCard unifiedRow row result clickcard”.

We’re getting closer to the information we’re looking for. Let’s inspect these divs a little closer.

We can find the job title within the <a> tag with class="jobtitle turnstileLink ", inside the h2 tag with class=”title”. Plus, there’s the link we’ll be pulling as well.

The rest of the elements are enclosed within the same div and using the class="company" and class=”location accessible-contrast-color-location” respectively.

Step 3: Parse HTML with Beautiful Soup

Let’s go back to our terminal but to install Beautiful Soup using pip3 install beautifulsoup4. After it’s installed, we can now import it into our project and create a Beautiful Soup object for it to parse.

</p>

import requests

from bs4 import BeautifulSoup

url = 'https://www.indeed.com/jobs?q=web+developer&l=New+York'

page = requests.get(url)

soup = BeautifulSoup(page.content, 'html.parser')

<p>If you still remember the id of the HTML tag containing our target elements, you can know find it using results = soup.find(id=’resultsCol’).

To make sure it’s working, we’re going to print the result out but using Prettify so the logged content is easier to read.

</p>

import requests

from bs4 import BeautifulSoup

url = 'https://www.indeed.com/jobs?q=web+developer&l=New+York'

page = requests.get(url)

soup = BeautifulSoup(page.content, 'html.parser')

results = soup.find(id='resultsCol')

print(results.prettify())

<p>The result will look something like this:

Definitely easier to read, still very unusable. However, our scraper is working perfectly, so that’s good!

Step 4: Target CSS Classes with Beautiful Soup

So far we’ve created a new Beautiful Soup object called results that show us all the information inside our main element.

Let’s dig deeper into it by making our Python scraper find the elements we actually want from within the results object.

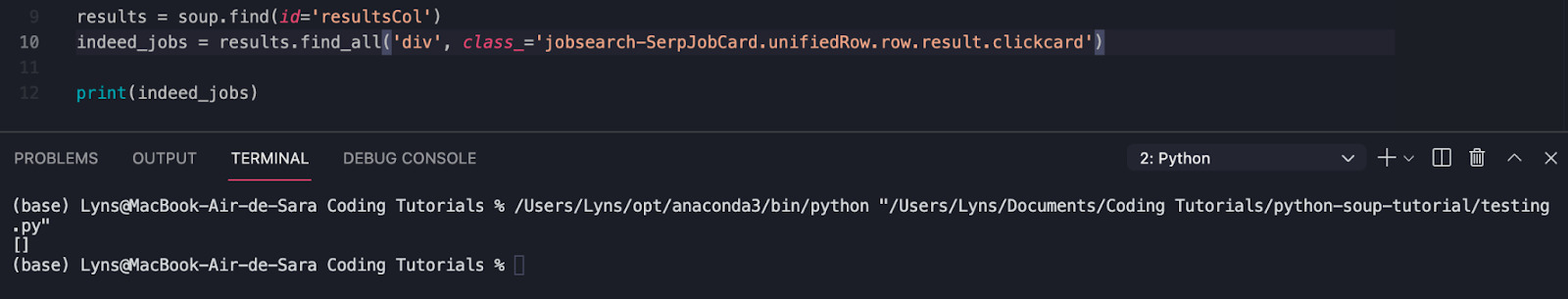

As we’ve seen before, all job listings are wrapped in a div with the class jobsearch-SerpJobCard unifiedRow row result clickcard, so we’ll call find_all to select these elements from the rest of the HTML:

</p>

indeed_jobs = results.find_all('div', class_='jobsearch-SerpJobCard unifiedRow row result clickcard')

<p>And after running it… nothing.

Our scraper couldn’t find the div. But why? Well, there could be a plethora of reasons as this happens frequently when building a scraper. Let’s see if we can figure out what’s going on.

When we have an element with spaces in its class, it’s likely that it has several classes assigned to it. Something that has worked in the past for us is adding a dot (.) instead of a space.

Sadly, this didn’t work either.

This experimentation is part of the process, and you’ll find yourself doing several iterations before finding the answer. Here’s what eventually worked for us:

</p>

indeed_jobs = results.select('div.jobsearch-SerpJobCard.unifiedRow.row.result')

<p>When using select – instead of find_all – we can use a different format for the selector where every dot (.) represents “class” – just like in CSS. We also had to delete the last class (clickcard).

Note: if you want to keep using find_all() to pick the element, another solution is to use indeed_jobs = results.find_all(class_='jobsearch-SerpJobCard unifiedRow row result') and it will find any and all elements with that class.

Step 5: Scrape data with Python

We’re close to finishing our scraper. This last step uses everything we’ve learned to extract just the bits of information we care about.

All our elements have a very descriptive class we can use to find them within the div.

We just have to update our code by adding the following snippet:

</p>

for indeed_job in indeed_jobs:

job_title = indeed_job.find('h2', class_='title')

job_company = indeed_job.find('span', class_='company')

job_location = indeed_job.find('span', class_='location accessible-contrast-color-location')

<p>And then print() each new variable with .text to extract only the text within the element – if we don’t use .text, we’ll get the entire elements including the HTML tags which would just add noise to our data.

</p>

print(job_title.text)

print(job_company.text)

print(job_location.text)

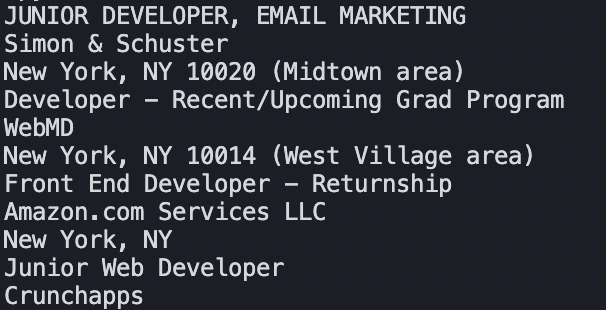

<p>After running our scraper, the results will look like this:

</p>

JUNIOR DEVELOPER, EMAIL MARKETING

Simon & Schuster

New York, NY 10020 (Midtown area)

Junior Web Developer

Crunchapps

New York, NY 10014 (West Village area)

<p>Notice that when the response gets printed there’s a lot of white space. To get rid of it, we’ll add one more parameter to the print() function: .strip().

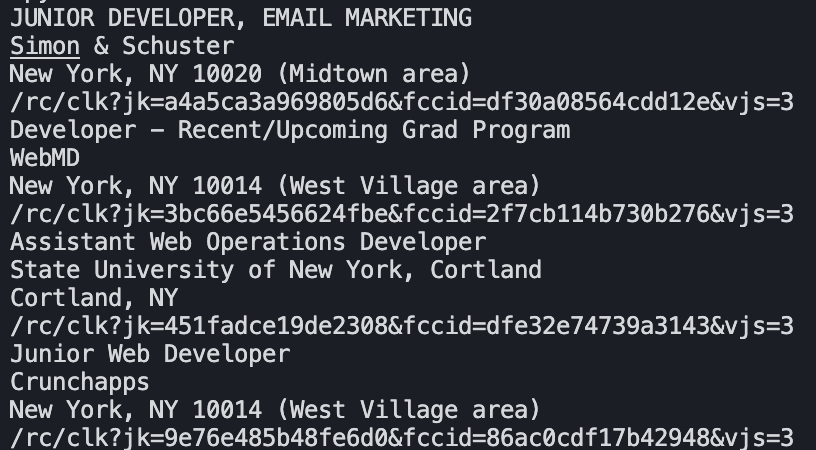

As a result:

Our data looks cleaner and it will be easier to format in a CSV or JSON file.

Step 6: Scrape URLs in Python

To extract the URL within the href attribute of the <a></a> tag, we write job_url = indeed_job.find('a')['href'] to tell our scraper to look for the specified attribute of our target element.

Note: you can use the same syntax to extract any attribute you need from an element.

Finally, we add the last bit of code to our scraper to print the URL alongside the rest of the data: print(job_url).

Note: Indeed doesn’t add the entire URL in their href attribute, it has the extension. To make the link work, you’ll need to add https://www.indeed.com/ at the beginning of the URL. e.g. https://www.indeed.com/rc/clk?jk=a4a5ca3a969805d6&fccid=df30a08564cdd12e&vjs=3. As homework, we’ll let you figure out how to add it automatically.

Step 7: Export Scraped Data Into a CSV File

Simply having the data logged in your terminal isn’t going to be that useful for processing. That’s why we next need to export the data into a processor of some kind. Although there are several formats we can use (like Pandas or JSON), in this tutorial we’re going to send our data to a CSV file.

To build our CSV, we’ll need to first add import CSV at the top of our file. Then, after finding the divs from where we’re extracting the data, we’ll open a new file and create a writer.

</p>

file = open('indeed-jobs.csv', 'w')

writer = csv.writer(file)

<p>Note: This is the method used in Python3, if you’re using Python2 or earlier versions, it won’t work and you’ll get a TypeError. The same will happen if you use Python2’s method (‘wb’ instead of ‘w’ in the open() function).

To make it easier to read for anyone taking a look at the file, let’s make our writer write a header row for us.

</p>

# write header rows

writer.writerow(['Title', 'Company', 'Location', 'Apply'])

<p>We also added a comment so we’ll know why that’s there in the future.

Lastly, we won’t be printing the results so we need to make our variables (job_title, job_company, etc) extract the content right away and pass it to our writer to add the information into the file.

If you updated your code correctly, here’s how your Python file should look like:

</p>

<pre class="wp-block-syntaxhighlighter-code">import csv

import requests

from bs4 import BeautifulSoup

url = 'https://www.indeed.com/jobs?q=web+developer&l=New+York'

page = requests.get(url)

soup = BeautifulSoup(page.content, 'html.parser')

results = soup.find(id='resultsCol')

indeed_jobs = results.select('div.jobsearch-SerpJobCard.unifiedRow.row.result')

file = open('indeed-jobs.csv', 'w')

writer = csv.writer(file)

# write header rows

writer.writerow(['Title', 'Company', 'Location', 'Apply'])

for indeed_job in indeed_jobs:

job_title = indeed_job.find('h2', <i>class_</i>='title').text.strip()

job_company = indeed_job.find('span', <i>class_</i>='company').text.strip()

job_location = indeed_job.find('span', <i>class_</i>='location accessible-contrast-color-location').text.strip()

job_url = indeed_job.find('a')['href']

writer.writerow([job_title.encode('utf-8'), job_company.encode('utf-8'), job_location.encode('utf-8'), job_url.encode('utf-8')])

file.close()</pre>

<p>After running the code, our Python and Beautiful Soup scraper will create a new CSV file into our root folder.

And there you have it, you just built your first Python data scraper using Requests and Beautiful Soup in under 10 minutes.

We’ll keep adding new tutorials in the future to help you master this framework. For now, you can read through Beautiful Soup’s documentation to learn more tricks and functionalities.

However, even when web scraping with Python, there are only so many pages you can scrape before getting blocked by websites. To avoid bans and bottlenecks, we recommend using our API endpoint to rotate your IP for every request.

Step 8: Python Web Scraping at Scale with ScraperAPI

All we need to do is to construct our target URL to send the request through ScraperAPI servers. It will download the HTML code and bring it back to us.

</p>

url = 'http://api.scraperapi.com?api_key=51e43be283e4db2a5afb62xxxxxxxxxx&url=https://www.indeed.com/jobs?q=web+developer&l=New+York'

<p>Now, ScraperAPI will select the best proxy/header to ensure that your request is successful. In case it fails, it will retry with a different proxy for 60 seconds. If it can’t get the 200 response, it will bring back a 500 status code.

To get your API key and 1000 free monthly requests, you can sign in for a free ScraperAPI account. For the first month, you’ll get all premium features so you can test the full extensions of its capabilities.

If you want to scrape a list of URLs or a more complex scraper, here’s a complete guide to integrating Requests and Beautiful Soup with ScraperAPI.

Note: If the result of your target page depends on the location, you can add the parameter &country_code=us. Visit our documentation for a list of country codes compatible with ScraperAPI.

Wrapping Up: Scrape Dynamic Pages with Python

Beautiful Soup is a powerful framework for scraping static pages. However, you’ll find it impossible to scrape a page that needs to render JavaScript.

A simple way to execute JavaScript is to add the parameter render=’true’. This will tell ScraperAPI to execute the JavaScript file before sending the response back to you:

</p>

url = 'http://api.scraperapi.com?api_key=51e43be283e4db2a5afb62xxxxxxxxxx&url=https://www.indeed.com/jobs?q=web+developer&l=New+York&render=true'

<p>We hope you enjoyed and learned a lot from this python web scraping tutorial. For a better understanding, we recommend you follow this tutorial to scrape a different website.

Because every site is unique, you’ll find new challenges that’ll help you think more like a web scraper developer.

If you’re interested in scraping dynamic sites like Amazon, here’s a guide on how to scrape Amazon product data using the Scrapy framework.

Happy scraping!

Related reading: