Web scraping (also known as screen scraping and web harvesting) is the technique and process of extracting data from websites through software or a script.

The final goal is to gather information across several sources and transform it into structured data for further analysis, use, or storage.

Although there are several ways to do web scraping, the best way to do it is by combining the power of web scraping software and programming languages to ensure scalability and flexibility.

Today, we’ll explore how to pick the right tool for your project, as well as our best tips for scraping virtually any website on the internet.

Finding The Best Web Scraping Tools

There are many variables to consider when selecting the best scraping tool for your web scraping project.

However, to keep it simple, we’ll divide these factors into two main categories:

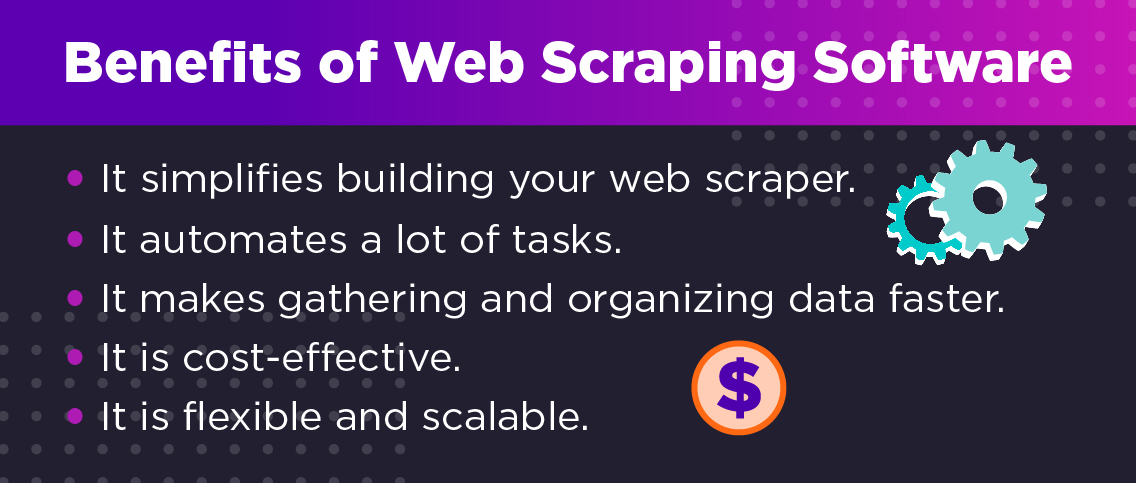

Benefits of Web Scraping Software

You can always create a web scraper from scratch. However, it will definitely be time and resource-consuming.

Sending an HTTP request to a server and creating a parser to navigate a DOM are problems that web scraping software has already solved.

When looking for the right tool for your project, the right web scraping software must have the following benefits:

- It simplifies building your web scraper. Building a web scraper can be as complex or simple as the tools you’re using. When picking a tool to work with, you need to make sure that it is making your work easier. Libraries like Scrapy have great parsing capabilities and handles CSV and JSON files for you.

- It automates a lot of tasks. One of the biggest challenges you’ll face while building your scraper is avoiding bans. Overcoming this can be really time-consuming unless. It’s essential to pick a tool that can automatically handle IP rotations and CAPTCHAs so you can focus on data and not just overcoming bottlenecks.

- It makes gathering and organizing data faster. The tool you pick should guarantee excellent results at a fast pace. Of course, there are limitations like time-outs to consider, but a great tool will find the correct information and organize it in a helpful format.

- It is cost-effective. Web scraping is all about collecting vast volumes of data in the most cost-effective way possible. There are a lot of tools available in the market with unreal prices. A good scraping solution should be affordable without compromising data quality.

- It is flexible and scalable. No website is built the same way, so your scraper needs to be flexible without losing the ability to scale. If your web scraping software can’t grow alongside your project, then it’s time to move to another.

Coding Languages and Web Scraping Software

A huge reason to pick a scraping software is related to the tools you’re already using in your day-to-day work.

If your company (or yourself) has to commit to a whole new tech stack, then it won’t probably be the most efficient way to approach your web scraping project.

Instead of learning new languages and frameworks, you need to focus on what you already know and are proficient in and start from there.

Just as we said before, the best web scraping software for you is the one that allows you to accomplish your goal without hassling. That means that it should be easy to integrate into your already existing stack.

If you’re using JavaScript, you’ll need a different solution than if you’re coding on C#.

For that reason, we built our list of scraping solutions to help you to find the right one, no matter the programming language you’re using or the complexity of the project.

7 Best Web Scraper Software to Use

It’s important to note that we are not listing these tools in a particular order. Each of these tools can work perfectly for your task.

Instead, we want to show you all the different solutions you can pick from and give you a little overview of why and when you should consider using them.

With that out of the way, let’s start with us!

1. ScraperAPI

Yes, we made it into the list, but that’s because we’re confident of what we do: making data accessible for everyone.

ScraperAPI is a robust solution that uses 3rd party proxies, machine learning, huge browser farms, and many years of statistical data to ensure you never get blocked by anti-scraping techniques.

Some of the best features of our tool are:

- Automating IP rotation and CAPCHAs handling

- Allows you to access geo-sensitive data by using a simple parameter when constructing the target URL

- Rendering JavaScript, so you don’t need to use headless browsers and access any data behind a script

- Has the potential to scale to up to 100 million pages scraped per month

- Handles concurrent threads to make your web scraper even faster

- Makes sure to retries with different IPs and headers to find the best fit to achieve a 200 status code request

What’s even better, ScraperAPI integrates seamlessly with the rest of the tools in this list. Just by adding an extra line of code to send the request through our servers, and we’ll take care of the rest.

2. Scrapy

If you’re familiar with Python, then Scrapy will be the scraping tool for you!

Scrapy is an open-source framework designed for simplifying web scraping in Python. It takes advantage of the easy-to-read Python’s syntax to build highly efficient scrapers (call spiders).

What makes this framework so powerful is that it has built-in support for CSS and XPath selectors, allowing you to extract huge amounts of data with just a few lines of code.

A feature we definitely love – and we know you’ll love too – is the Scrapy Shell. You can use this interactive shell to test CSS and XPath expressions instead of running our script to test every change.

After figuring out the right logic, we can then let our scraper run without complications.

To make Scrapy even more efficient, it can export the entire data set into structured data frameworks like CSV, JSON, or XML without the need of configuring the entire thing by hand.

Note: Check our tutorial on how to build a scalable web scraper using Scrapy and ScraperAPI.

While Scrapy automates many moving parts and makes it easy to maintain several spiders (web scrapers) at the same time from one file, combining it with ScraperAPI to handle anti-scraping techniques will make your web scraper more efficient and effective.

3. Cheerio

JavaScript is one of the most popular and widely used programming languages out there. It has a lot of versatility, and you can build pretty much anything with it, and web scrapers are not the exception.

Thanks to its back-end runtime environment – Node.js – we can now build software with JavaScript.

Cheerio is a Node.js library that uses JQuery-like syntax to parse HTML/XML documents using CSS and XPath expressions. When it comes to scraping static pages, you can create lighting fast scripts.

Because Cheerio doesn’t “produce a visual rendering, apply CSS, load external resources, or execute JavaScript”, it can scrape data faster than other software.

These resources take time out of your script and are not necessary for scraping static pages – which are your main target if you’re planning to use Cheerio.

But what if you need to scrape a dynamic page that needs to execute JS before the content loads? For those scenarios, you can use ScraperAPI renderer to add the functionality to your Cheerio scraper.

Note: Check our tutorial on how to do web scraping in Node.js for a more detailed explanation about how to scrape websites using Cheerio and Puppeteer.

4. Puppeteer

Puppeteer is another library for Node.js, designed to control a headless chromnium browser and mimic the behavior of a normal browser from our script.

If you want to scrape dynamic pages using Node.js, this is the right solution for you – in some cases.

Cheerio and ScraperAPI are more than enough for most projects. Nevertheless, ScraperAPI can only execute JS scripts, no interacting with the website itself.

When the target data is behind events (like clicking, scrolling, or filling a form), you’ll need to use a solution like Puppeteer to simulate a user’s behavior and actually access the data.

It’s essential to keep in mind that you should write your script using Async and Await when working with this library. Checking the resource we shared above will give you a better idea of how to do it.

Suppose you have a complex project on your hands. In this case, combining the Puppeteer’s headless browser manipulation, Cheerio’s parsing capabilities, and ScraperAPI’s functions is a great combination to create a highly sophisticated web scraper.

5. ScrapySharp and Puppeteer Sharp

C# is definitely a flexible and complete programming language. With such a wide adoption at the enterprise level, it was just a matter of time to see scraping tools being developed for it.

ScrapySharp is a C# library designed for web scraping that makes it easy to select elements within the HTML document using CSS and XPath selectors thanks to its HTMLAgilityPack extension.

Although this library can handle things like cookies and headers, it can not manipulate a headless browser.

Still, for those projects needing an initial JavaScript execution, ScraperAPI can give the extra functionality to the library, making it an excellent solution for 90% of all projects.

But, if you need to interact with the website to trigger events, a better option is Puppeteer Sharp – a port for .Net of Node.js’s Puppeteer library.

Note: Here’s a full tutorial on building a web scraper using C# and ScrapySharp.

6. Rvest

For those using R as their primary language, the Rvest package will be a familiar and straightforward tool to learn.

The Rvest library uses all the features of the R language to make building a web scraper a breeze by allowing us to send HTTP requests and parse the returned DOM using CSS and XPath expressions.

What’s more, Rvest has built-in data manipulation functions and the ability to create beautiful data visualizations – way superior to any other web scraping software on this list. If you’re into data science, this could be an exciting language and tool to explore further.

Another feature we love is using Magrittr alongside our main scraping library to use the %>% operator “with which you may pipe a value forward into an expression or function call”.

Although it doesn’t seem like much, it shortens coding times and makes our code more elegant and easier to read.

Note: To better understand what we mean, check our Rvest web scraping tutorial for a more detailed explanation of the library.

7. Goutte

Of course, we can not have a list of scraping software without talking about PHP.

It might not come first to mind when we talk about web scraping, but thanks to its active community, we have Goutte, a web scraping library specially developed for PHP to help us crawl and scrape websites.

Unlike using raw PHP, Goutte handles a lot of the scraping functions starting from supporting CSS and XPath expressions to interacting with the webpage to submit forms.

Something else you’ll notice when using Goutte is that it makes PHP code more human-friendly to read and way shorter, accomplishing in a few lines of code what would take raw PHP tens of lines.

Note: You can learn more about web scraping with PHP Goutte in our tutorial for beginners. We built a scraper using Goutte and CSV Helper to export the scraped data into a CSV file.

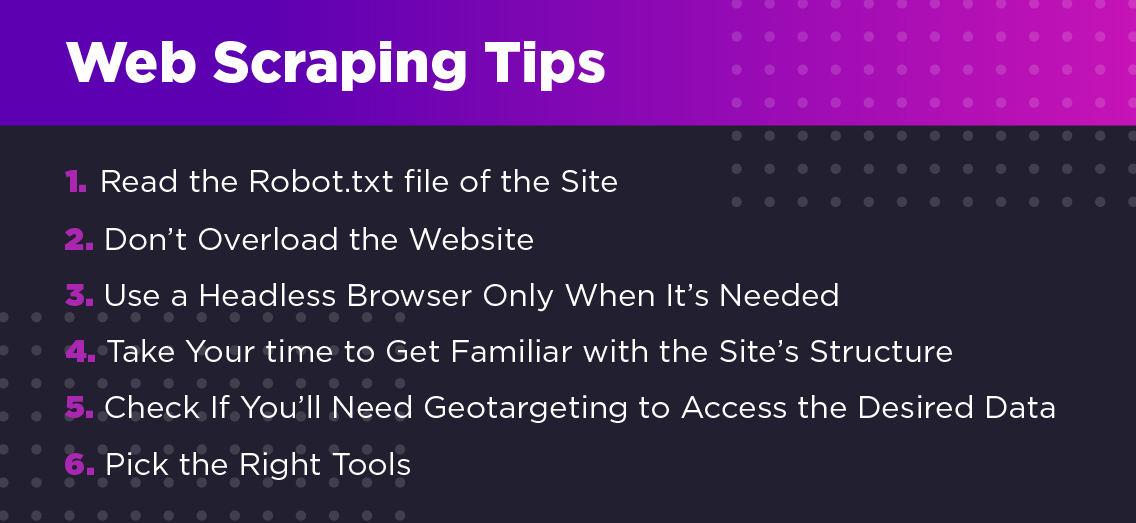

Web Scraping Tips

The idea behind web scraping is to collect data faster than by hand, but it won’t work if your IP gets banned and your robot can not access the site anymore.

To avoid these situations, here are a few tips you can always follow – plus, a few considerations to have when running a scraper:

1. Read the Robot.txt File of the Site

Although not all websites have directives for scrapers in their robot.txt file, it’s respectful to take a look at least.

A few website owners will explicitly tell which pages they don’t want you to scrape or the frequency for the requests.

If those are established, then it’s best to keep your script compliant.

2. Don’t Overload the Website

Remember that your web crawler is sending traffic to the website, thus taking away bandwidth from real users.

Although it might not affect you directly, if your web scraper is crawling and scraping the target website too aggressively, it will negatively affect the user experience.

The way websites protect themselves from this type of workload is by detecting the frequency of your requests and black list it for a period of time to keep the servers running.

However, if you’re using ScraperAPI – which will use a different proxy for each request – the website won’t be able to slow your scraper down.

It’s up to you to add delays, so you don’t hurt the website you’re scraping.

3. Use a Headless Browser Only When It’s Needed

Every line of code and extra functionality will slow down your scraper and consume more resources to execute the JS scripts and render the website to interact with it.

Unless you really need to interact with the website, there’s no real value in using headless browsers.

In most cases, all you need is a good HTTP client and parsing capabilities to create an effective script.

One of the reasons to use a headless browser is to mimic human behavior, but with a scraping API, it’s not really necessary.

4. Take Your Time to Get Familiar with the Site’s Structure

Before you start writing your code, make sure to understand how the website is serving every element and find the most efficient path to extract them.

Once you get the logic you need to follow, creating your web scraper will be easier than you initially thought.

Web scraping is all about solving the puzzle every website is, and each website is different from others, so by taking your time, you’ll make a more elegant solution than if you rush.

5. Check If You’ll Need Geotargeting to Access the Desired Data

Some websites like Amazon or Google will show different information based on the geo-location the request is coming from.

If you run your scraper without defining any geotargeting, your script will use your current location to send the request, and the data will be affected by it.

So if you’re in the US but need to scrape french-specific listings (for localized searches, for example), you’ll need to change your IP address to a French IP.

With ScraperAPI, we can do that by using the country_code parameter.

You can learn more about ScraperAPI’s functionalities in our comprehensive documentation.

Note: For more tips and best practices, check our best five tips for web scraping and our web scraping best practices cheat sheet.

6. Pick the Right Tools

You don’t need to learn too many things as long as you’re using what you already know. Pick one of the pieces of software we listed above based on the programming language you’re most proficient in.

You don’t even need to be a master. These scraping tools are built in such a way that you can call them low-code solutions. With just the basics of the language you’ll be able to scrape almost any site.

If you’re a total beginner, we do recommend using Scrapy to handle the scraping logic and ScraperAPI to ensure that you won’t get blocked by any means.

These two tools work together seamlessly and are easy to learn and implement.

Code-based vs Out-of-Box Scraping Software

You might be asking yourself why you would prefer a code-based solution when you can pick an automated software, and it’s a pretty smart question to ask.

If there’s a takeaway from all we’ve talked about so far is that there’s not a real one solution trick that will meet all your scraping needs, and that’s exactly what you’re committing to when choosing an out-of-box solution.

The reality is that once you start scaling your project, you encounter one of these scenarios:

- The automated scraping tool does not scale with your project. As you add more pages to the pipeline and choose new pieces of data, the tool won’t be able to keep up and slow down the whole operation.

- It doesn’t have the flexibility you’re looking for. These are rigid solutions that will take longer to adapt to your specific needs, if they can at all.

- They will quickly outscale your budget. These are usually expensive solutions that can be ‘affordable’ in the beginning but once the real big workloads come into place, will charge 5x to 10x more than using softwares like Scrapy + ScraperAPI.

There’s no arguing that automated web scraping software can get small projects off the ground, but if you’re aiming for a long-term solution that’ll adapt to your needs and to every website, then there’s no better path than coding.

Of course, libraries like Scrapy, Cheerio or ScrapySharp will make it super easy even for beginners to build web scrapers without too much hassle. They are totally customizable and can integrate with other libraries and solutions to supercharge your robots.

If you still don’t know where to start, you can always find us on Twitter. We’ll be glad to help on your journey.

Happy scraping!