Our new Async Scraper endpoint allows you to submit web scraping jobs at scale (without setting timeouts or retries) and receive the scraped data directly to your webhook endpoint without polling. This makes your web scrapers more resilient, no matter how complex the sites’ anti-scraping systems are.

Asynchronous vs. Synchronous Scraping API

The difference between synchronous and asynchronous APIs (application programming interfaces) lies in the time frame from when the request is sent to when the data is returned. In that sense, a synchronous scraping API is expected to return the data requested soon after the request is sent. When you write scripts synchronously, your scraper will wait until a value is returned. This is great for speed but can harm the request’s success rate for complex or hard-to-scrape websites.

As we move from a couple of requests to thousands, we can start to create bottlenecks because of timeout constraints or even reduce the success rate of our scraping efforts. That’s where an asynchronous scraping API can take the torch!

In simple terms, instead of waiting for one request to return the data, the async scraper gives the scraper service more time to use advanced bypass methodologies to achieve higher success rates. All you do is submit all the necessary requests in bulk to the Async Scraper endpoint, and it’ll work on all of them until it achieves the highest reachable success rate.

After you submit your requests, it’ll create a unique Status endpoint from which you can access the scraped data later. No need for timeouts, testing HTTP headers, or doing any complex asynchronous programming manually.

When to Use Our Async Scraper API

- Your priority is a high success rate and not a fast response time.

- You’re seeing a decrease in the success rate of your requests.

- You want to access difficult-to-scrape websites.

- You need to send a massive number of requests.

Getting Started with ScraperAPI’s Async Scraper

Let’s keep it simple and submit a scraping job to quotes.toscrape.com using the Python Requests library. To initiate the job, you’ll need to send a .post() request with the Async Scraper endpoint as the url and, within a json parameter, your API key and the URL you want to scrape.

Note: If you don’t have an account, create a free ScraperAPI account to generate an API key.

import requests

initial_request = requests.post(

url = 'https://async.scraperapi.com/jobs',

json={ 'apiKey': YOUR_API_KEY, 'url': 'https://quotes.toscrape.com/'

})

print(initial_request.text

It returns the following data:

{"id":"222cdaa5-efd1-4962-ad6c-c51e2eea59b7",

"status":"running",

"statusUrl":"https://async.scraperapi.com/jobs/222cdaa5-efd1-4962-ad6c-c51e2eea59b7",

"url":"https://quotes.toscrape.com/"}

Now, we can send a new request to the StatusUrl endpoint to retrieve the status or results of the job.

💡 Pro Tip

Using the StatusUrl endpoint is a great way to test the Async API. However, for a more robust solution, we’ve implemented Webhook callbacks (soon to support direct database callbacks, AWS S3…etc).

Check our detailed documentation to learn more about implementing Webhooks using our Async Scraper service.

import requests

r = requests.get("https://async.scraperapi.com/jobs/222cdaa5-efd1-4962-ad6c-c51e2eea59b7")

print(r.text)

If the job isn’t finished, it’ll show something like this:

{

"id":"0962a8e0-5f1a-4e14-bf8c-5efcc18f0953",

"status":"running",

"statusUrl":"https://async.scraperapi.com/jobs/0962a8e0-5f1a-4e14-bf8c-5efcc18f0953",

"url":"https://example.com"

}

However, in our case, it was already completed by the time we sent the request.

{

"id":"fa59791f-d2bb-46c4-88c6-b106a90b5335",

"status":"finished",

"statusUrl":"https://async.scraperapi.com/jobs/fa59791f-d2bb-46c4-88c6-b106a90b5335",

"url":"https://quotes.toscrape.com/",

"response":{

"headers":{"date":"Mon, 15 Aug 2022 11:39:13 GMT",

"content-type":"text/html; charset=utf-8",

"content-length":"11053",

"connection":"close",

"x-powered-by":"Express",

"access-control-allow-origin":"undefined",

"access-control-allow-headers":"Origin, X-Requested-With, Content-Type, Accept",

"access-control-allow-methods":"HEAD,GET,POST,DELETE,OPTIONS,PUT",

"access-control-allow-credentials":"true",

"x-robots-tag":"none",

"sa-final-url":"https://quotes.toscrape.com/",

"sa-statuscode":"200",

"etag":"W/"2b2d-cb4U8IcT4IIFDwHVUaiq4e81REA"",

"vary":"Accept-Encoding"},

"body":"<!DOCTYPE html>n<html lang="en">n<head>nt<meta charset="UTF-8">nt<title>Quotes to Scrape</title>n <link rel="stylesheet"...

}

}

The status field is set to finished, and all our content is inside the body field, ready for grabbing.

Parsing the Data from the Response

To parse the returned data, you’ll need to parse the JSON response, extract the value from the body field, and feed the string to an HTML parser.

Continuing with the example above, let’s use Beautiful Soup – “a Python library for pulling data out of HTML and XML files” – to parse and extract all quotes from the page:

import requests

from bs4 import BeautifulSoup

r = requests.get("https://async.scraperapi.com/jobs/222cdaa5-efd1-4962-ad6c-c51e2eea59b7")

res = r.json()

soup = BeautifulSoup(res["response"]["body"], "html.parser")

all_quotes = soup.find_all("div", class_="quote")

for quote in all_quotes:

print(quote.find("span", class_= "text").text)

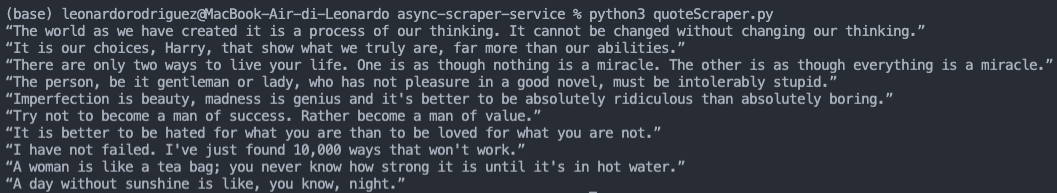

The result? All quotes on the page got printed to the terminal.

We can do this for all elements inside the response and export them to the format we want.

However, what are 10 quotes good for? The Async Scraper can handle heavy loads of requests to make our project as scalable as possible!

Submitting Scraping Jobs in Bulk

So far, we’ve been using the https://async.scraperapi.com/jobs endpoint, which only accepts a string within the url parameter inside the json field, and that’s not useful for the task at hand.

For cases like these, when you have a list of URLs you want to scrape, we’ve created an additional https://async.scraperapi.com/batchjobs endpoint. It uses the same syntax, except it replaces the url field inside the json for a urls parameter that accepts arrays.

As an example we’ll scrape all quotes from Quotes to Scrape:

import requests

from pprint import pprint

urls = []

for x in range(1, 11, 1):

url = f"https://quotes.toscrape.com/page/{x}/"

urls.append(url)

scraping_jobs = requests.post(

url = 'https://async.scraperapi.com/batchjobs',

json={ 'apiKey': "Your_API_KEY", 'urls': urls })

pprint(scraping_jobs.json())

Note: In this case we created a list with a loop, but you can send the entire list inside the urls property as a simple array.

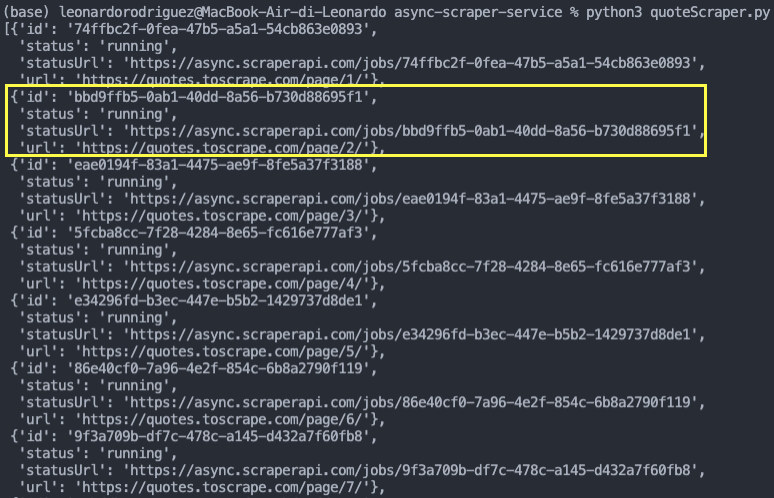

The result? The entire list of URLs is submitted. Webhooks make processing much easier, especially when bulk processing is needed. It returns all the information as we’ve seen previously:

Supercharge Your Scraper Using ScraperAPI’s Advanced Functionalities

Like the traditional ScraperAPI endpoint, you can pass several parameters to make your scraper even more powerful and flexible. For example, suppose you want to scrape data from geo-sensitive pages (pages that show different data depending on your IP address geolocation, like Google or eCommerce sites). In that case, you can add a parameter with the country code to tell ScraperAPI to send your requests from a specific location like this:

import requests

initial_request = requests.post(

url = 'https://async.scraperapi.com/jobs',

json={

'apiKey': YOUR_API_KEY,

'apiParams': {

"country_code": "it"

},

'url': 'https://quotes.toscrape.com/' })

print(initial_request.text)

Note: You can find all of the country codes under the Geographic Location section in our documentation, or use this dynamic list to retrieve all the supported geolocations.

But what if the pages you’re targeting inject content through JavaScript? Simple! Just set render to true in your apiParams field.

import requests

initial_request = requests.post(

url = 'https://async.scraperapi.com/jobs',

json={

'apiKey': YOUR_API_KEY,

'apiParams': {

'country_code': 'it',

'render': true,

},

'url': 'https://quotes.toscrape.com/' })

Now, ScraperAPI will render the page, including the JavaScript, before returning it to you, allowing your scraper to access data without the need for headless browsers or complex code.

Here’s the full list of parameters you can use with the Async Scraper:

{

"apiKey": "xxxxxx",

"apiParams": {

"autoparse": false, // boolean

"binary_target": false, // boolean

"country_code": "us", // string, see: https://api.scraperapi.com/geo

"device_type": "desktop", // desktop | mobile

"follow_redirect": false, // boolean

"premium": true, // boolean

"render": false, // boolean

"retry_404": false // boolean

},

"url": "https://example.com"

}

Using ScraperAPI’s functionalities alongside the Async Scraper endpoint, you’ll be able to scrape accurate data from any website in any location of the world without the risk of getting banned, blocked, or missing information.

If you’re struggling to access crucial information, create a new free ScraperAPI account to test our Async Scraper service and start collecting data at scale more reliably; or subscribe to one of our pricing plans to take full advantage of ScraperAPI in a few minutes.