TL;DR: Using Requests and BeautifulSoup for Web Scraping

To get started, install both dependencies from your terminal using the following command:

pip install requests beautifulsoup4Once the installation is done, import

requestsandBeautifulSoupto your file:<pre class="wp-block-syntaxhighlighter-code"> import requests from bs4 import BeautifulSoup <pre>Send a

get()request using Requests to download the HTML content of your target page and store it in a variable – for this example, we’re sending aget()request to BooksToScrape and storing its HTML into aresponsevariable:response = requests.get(“https://books.toscrape.com/catalogue/page-1.html”)To extract specific data points from the HTML, we need to parse the response using BS4:

<pre class="wp-block-syntaxhighlighter-code"> soup = BeautifulSoup(response.content, "lxml") <pre>Lastly, we can pick elements using CSS selectors or HTML tags like so:

title = soup.find(“h1”) print(title)

There's an incredible amount of data on the Internet, and it is a rich resource for any field of research or personal interest. However, not all of this information is easily accessible through traditional means.

Some websites do not provide an API to fetch their content; others may use complex technologies that make extracting data using traditional methods difficult.

To help you get started, in this tutorial, we’ll show you how to use the Requests and BeautifulSoup Python packages to scrape data from any website, covering the basics of web scraping, including how to send HTTP requests, parse HTML, and extract specific information.

To make things more practical, we’ll learn how to build a scraper to collect a list of tech articles from Techcrunch, and by the end, you’ve learned how to:

- Download web pages

- Parse HTML content

- Extract data using BeautifulSoup’ methods and CSS selectors

Prerequisites

To follow this tutorial, you need to install Python 3.7 or greater on your computer. You can download it from the official website if you don't have it installed.

You will also need the following requirements:

- A virtual environment (recommended)

- Requests

- BeautifulSoup

- Lxml

Installing a Virtual Environment

Before installing the required libraries, creating a virtual environment to isolate the project's dependencies is recommended.

Creating a Virtual Environment on Windows

Open a command prompt with administrator privileges and run the following command to create a new virtual environment named venv:

python -m venv venv

Activate the virtual environment with the following command:

venv\Scripts\activate

Creating a Virtual Environment on macOS/Linux

Open a terminal and run the following command to create a new virtual environment named venv:

sudo python3 -m venv venv

Activate the virtual environment:

source venv/bin/activate

Installing Requests, BeautifulSoup, and Lxml

Once you have created and activated a virtual environment, you can install the required libraries by running the following command on your terminal:

pip install requests beautifulsoup4 lxml

- Requests is an HTTP library for Python that makes sending HTTP requests and handling responses easy.

- BeautifulSoup</a > is a library for parsing HTML and XML documents.

- Lxml is a powerful and fast XML and HTML parser written in C. Lxml is used by BeautifulSoup to provide XPath support.

Download the Web Page with Requests

The first step in scraping web data is downloading the web page you want to scrape. You can do this using the get() method of the Requests library.

import requests

url = 'https://techcrunch.com/category/startups/'

response = requests.get(url)

print(response.text)

Above, we use Requests to get the HTML response from TechCrunch. The get() method returns a response object containing the HTML of the web page. We’ll use BeautifulSoup to extract the data we need from this HTML response.

Parse the HTML Response with BeautifulSoup

Once you have downloaded the web page, you must parse the HTML. You can do this using the BeautifulSoup library:

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.text, 'lxml)

The BeautifulSoup constructor takes two arguments:

- The HTML to parse

- The parser to use

In this case, we are using the lxml parser, then we pass the HTML response into BeautifulSoup and create an instance called soup.

Navigate the Response Using BeautifulSoup

When an HTML page is initialized within a BeautifulSoup instance, BS4 transforms the HTML document into a complex tree of Python objects and then provides several ways in which we can query this DOM tree:

- Python object attributes: Each BeautifulSoup object has a number of attributes that can be used to access its children, parents, and siblings. For example, the children attribute returns a list of the object's child objects, and the parent attribute returns the object's parent object.

- BeautifulSoup methods ( eg

.find()and.find_all()): These can be used to search the DOM tree for elements that match a given criteria. The.find()method returns the first matching element, while the.find_all()method returns a list of all matching elements. - CSS Selectors (eg

.select()and.select_one()): CSS selectors let you select elements based on their class, ID, and other attributes.

But which tags should you be searching for?

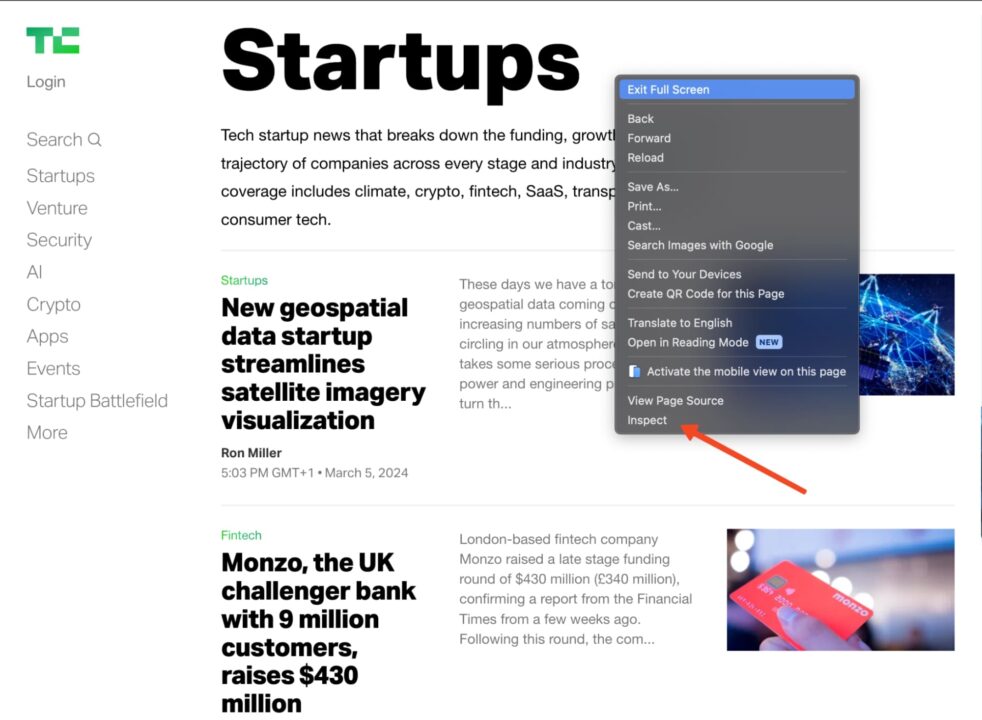

You can find that out by using the inspect option in your browser. Go to the Techcrunch website, find the element you want to scrape, then right-click and choose "Inspect".

This will open the HTML document at the element you have selected.

Now, you need to find a combination of HTML element tags and classes that uniquely identify the elements you need.

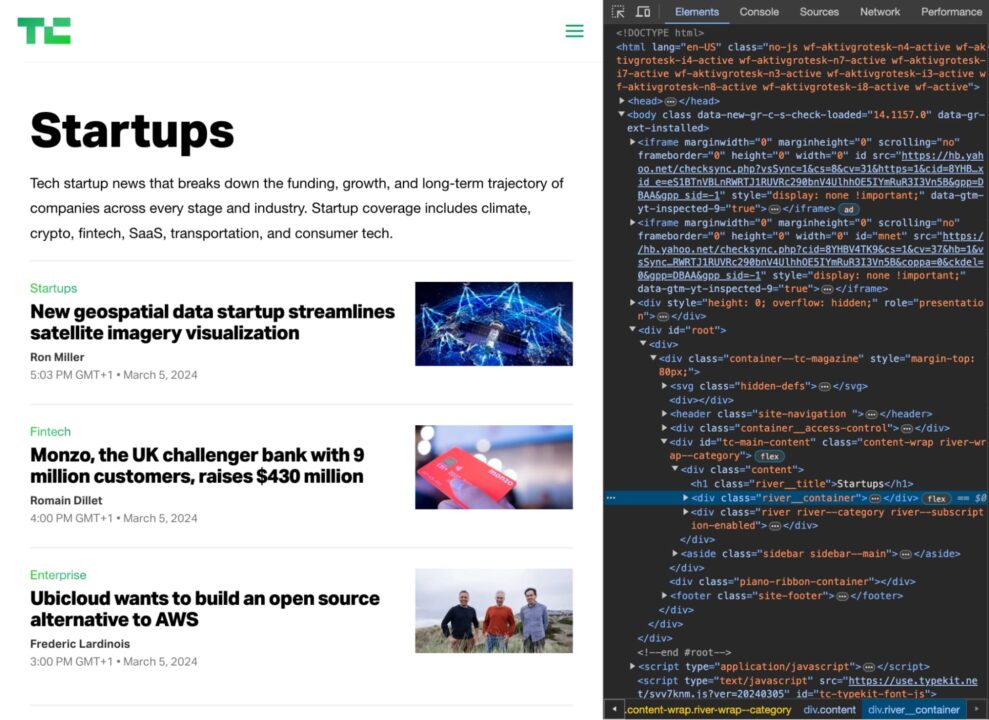

For example, if you want to scrape the titles of the articles on the TechCrunch homepage, you would inspect the HTML and find that the titles are all contained within h2 tags with the class post-block__title.

So, you could use the following CSS selector to pick the titles:

soup.select('h2.post-block__title')

This would return a list of all the h2 tags with the class post-block__title on the page containing the articles' titles.

Get an Element by HTML Tag

Syntax: element_name

You can use the find() and find_all() methods to find elements by their HTML tag.

For example, the following code finds all the header tags on the page:

header_tags = soup.find_all('header')

for header_tag in header_tags:

print(header_tag.get_text(strip=True))

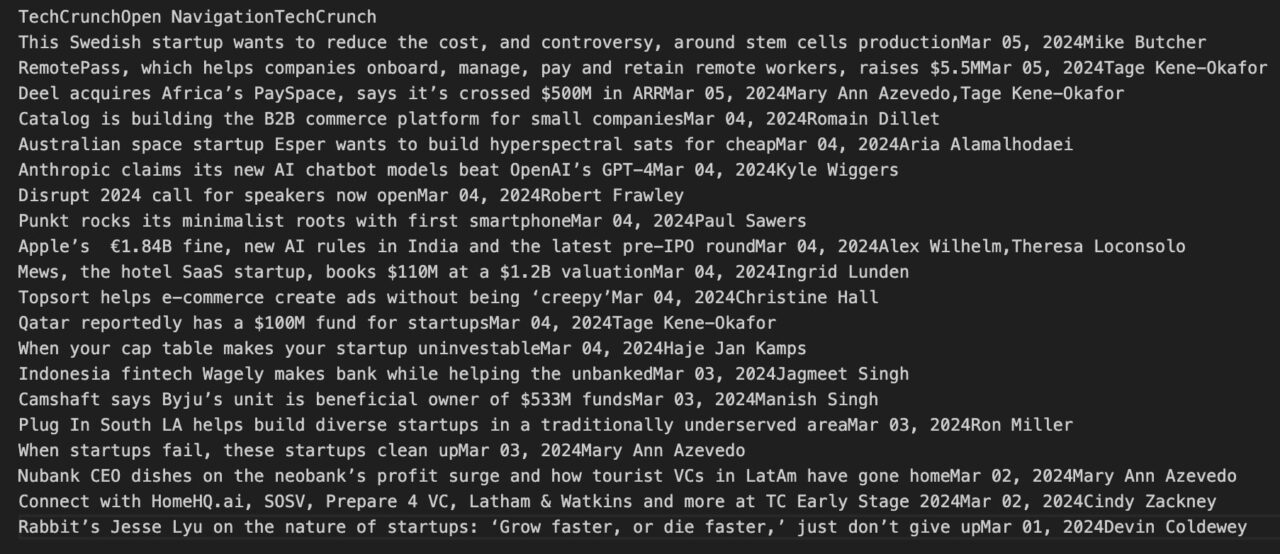

The output, as seen in the console, should be similar to this:

Get an Element by CSS Class

Syntax: .class_name

Class selectors match elements based on the contents of their class attribute.

title = soup.select('.post-block__title__link')[0].text

print(title)

The Dot (.) before the class name indicates to BS4 that we’re talking about a CSS class.

Get an Element By ID

Syntax: #id_value

ID selectors match an element based on the value of the element's id attribute. In order for the element to be selected, its id attribute must match exactly the value given in the selector.

element_by_id = soup.select('#element_id') # returns the element at "element_id"

print(element_by_id)

The Hash symbol before the ID’s name tells BS4 that we’re asking for an id.

Get an Element by Attribute Selectors

Syntax: [attribute=attribute_value] or [attribute]

Attribute selectors match elements based on the presence or value of a given attribute. The only difference is that this selector uses square brackets [] instead of a dot (.) as class or a hash symbol (#) as id.

# Get the URL of the article

url = soup.select('.post-block__title__link')[0]['href']

print(url)

Output:

https://techcrunch.com/2024/03/05/new-geospatial-data-startup-streamlines-satellite-imagery-visualization/

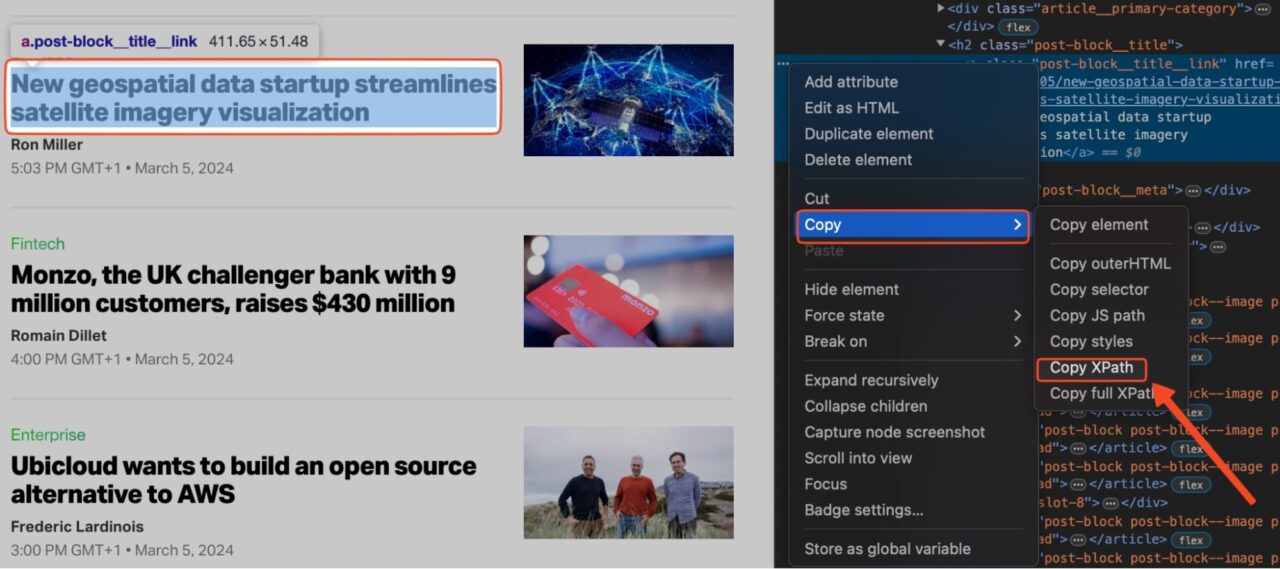

Get an Element using XPath

XPath uses a path-like syntax to locate elements within an XML or HTML document. It works very much like a traditional file system.

To find the XPath for a particular element on a page:

- Right-click the element on the page and click on "Inspect" to open the developer tools tab.

- Select the element in the Elements Tab.

- Click on "Copy" -> "Copy XPath".

Note: If XPath is not giving you the desired result, copy the full XPath instead of just the path. The rest of the steps would be the same.

We can now run the following code to extract the first article on the page using XPath:

from bs4 import BeautifulSoup

import requests

from lxml import etree

url = 'https://techcrunch.com/category/startups/'

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36",

"Accept-Language": "en-US, en;q=0.5",

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

soup = BeautifulSoup(response.content, "lxml")

dom = etree.HTML(str(soup))

print(dom.xpath('//*[@id="tc-main-content"]/div/div[2]/div/article[1]/header/h2/a')[0].text)

Here’s the output:

New geospatial data startup streamlines satellite imagery visualization

Requests and BeautifulSoup Scraping Example

Let's combine all of this to scrape TechCrunch for a list of startup articles:

import requests

from bs4 import BeautifulSoup

url = 'https://techcrunch.com/category/startups/'

article_list = []

response = requests.get(url)

if response.status_code == 200:

soup = BeautifulSoup(response.content, "lxml")

articles = soup.find_all('header')

for article in articles:

title = article.get_text(strip=True)

url = article.find('a')['href']

print(title)

print(url)

This code will print the title and URL of each startup article.

Saving the data to a CSV file

Once you have extracted the data you want from the web page, you can save it to a CSV file for further analysis. To do this using the csv module, add this code to your scraper:

import requests

from bs4 import BeautifulSoup

import csv

url = 'https://techcrunch.com/category/startups/'

article_list = []

response = requests.get(url)

if response.status_code == 200:

soup = BeautifulSoup(response.content, "lxml")

articles = soup.find_all('header')

for article in articles:

title = article.get_text(strip=True)

url = article.find('a')['href']

article_list.append([title, url])

with open('startup_articles.csv', 'w', newline='') as f:

csvwriter = csv.writer(f)

csvwriter.writerow(['Title', 'URL'])

for article in article_list:

csvwriter.writerow(article)

This will create a CSV file named startup_articles.csv and write the titles and URLs of the articles to the file. You can then open the CSV file in a spreadsheet program like Microsoft Excel or Google Sheets for further analysis.

Keep Learning

Congratulations, you just scraped your first website using Requests and BeautifulSoup!

Want to learn more about web scraping? Visit our Scraping Hub. There, you’ll find everything you need to become an expert.

Or, if you’re ready to tackle some real projects, follow our advanced tutorials:

- How to Scrape Cloudflare Protected Websites with Python

- How to Scrape Amazon Product Data

- How to Scrape LinkedIn with Python

- How to Scrape Walmart using Python and BeautifulSoup

Keep exploring, and have fun scraping!