Extracting data from an HTML and parsing it can seem like a huge challenge for a Node.js engineer tasked with a web scraping project. But once you get your Node.js scrapers up and running, you’ll quickly notice there is a bigger challenge in place — overcoming website blockers, CAPTCHAs, IP bans, and more.

So, how can you build and improve your Node.js scraping infrastructure to avoid getting blocked?

In this guide, we’ll break down the various available Node.js web scraping tools and libraries and explain how you can use them for your data collection projects.

Then, we’ll dive deeper into using advanced scraping APIs, such as ScraperAPI, that are indispensable for higher speed and scale in web scraping.

Let ScraperAPI handle all web scraping complexities and start gathering data in minutes

What You Need to Know Before Scraping in Node.js

Scraped data is not equal and you should draw a clear line between what you can and must not scrape. So what are the things you can’t do?

- Avoid reselling personal information for commercial purposes. It is against the ethics and standards set by the GDPR and California Consumer Privacy Act

- Do not scrape company’s data if there are provisions to APIs, as it is always advised to use them instead of scraping web data

- Ensure you are permitted to scrape only relevant endpoints from the user-agent page. To know the right endpoints allowed, check their website robots.txt file, like this: https://amazon.com/robots.txt

The Best Node.js Web Scraping Tools and Libraries

As already mentioned, whatever you want to scrape from the web, these libraries will do the job and meet your data collection needs.

Before writing a line of code, you need to consider the following prerequisites:

- Have Node.js installed on your local machine, which comes included with the package manager npm

- Have a basic, at minimum, understanding of JavaScript

- Knowledge of using the DevTools in the browser to inspect sites’ elements

Done? Now, let’s move to the overview of available tools and libraries you can use for web scraping.

1. Axios and Cheerio

Axios is a promise-based HTTP client developers utilize to send requests from client browsers and Node.js applications, with the page content received as the response.

Thanks to its simplicity, Axios is one of the easiest implementations to fetch HTML code from a web page in JavaScript projects.

On the other hand, Cheerio is a dependency package that parses markup into DOM-like objects, offering an API with methods for traversing and manipulating the code’s data structure. The implementation of Cheerio is reminiscent of jQuery.

To use it, install the package into an initialized project using the following command:

</p>

npm install cheerio axios

<p>Copy-paste this code into the entry point file:

</p>

const express = require('express');

const axios = require('axios');

const cheerio = require('cheerio');

const app = express();

const PORT = process.env.PORT || 3000;

const website = 'https://news.sky.com';

try {

axios(website).then((res) => {

const data = res.data;

const $ = cheerio.load(data);

let content = [];

$('.sdc-site-tile__headline', data).each(function () {

const title = $(this).text();

const url = $(this).find('a').attr('href');

content.push({

title,

url,

});

app.get('/', (req, res) => {

res.json(content);

});

});

});

} catch (error) {

console.log(error, error.message)

}

app.listen(PORT, () => {

console.log(`server is running on PORT:${PORT}`);

});

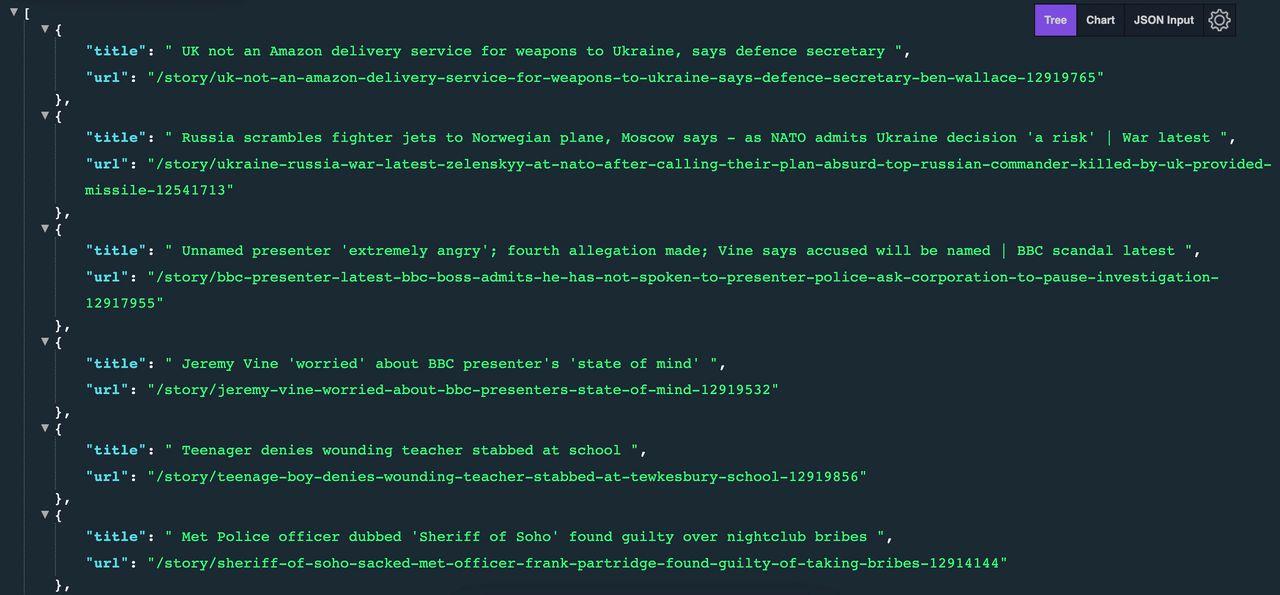

<p>In the provided example code:

- The Node.js framework Express is employed to exhibit the responses from Axios and Cheerio through the home route endpoint (“/”) using the GET method

- The response data from Axios is retrieved and loaded into Cheerio

- Cheerio then conducts a search on the website, selecting elements within the document

- It iterates through these elements, compiling the content into an array of objects that showcase the page’s title and URL

The anticipated outcome is as follows:

If you’d like to learn more about using Axios and Cheerio to collect web data, check our LinkedIn scraper tutorial with Node.js.

2. Puppeteer

Puppeteer, a headless version of Chrome or Chromium, represents a Node.js library employed programmatically through the CLI (command-line interface) or directly within a Node.js environment.

It emulates the actions of a real user, encompassing activities such as scrolling, clicking, generating screenshots, facilitating automated testing, and more.

To install Puppeteer within your project directory, use the following command:

</p>

npm install puppeteer

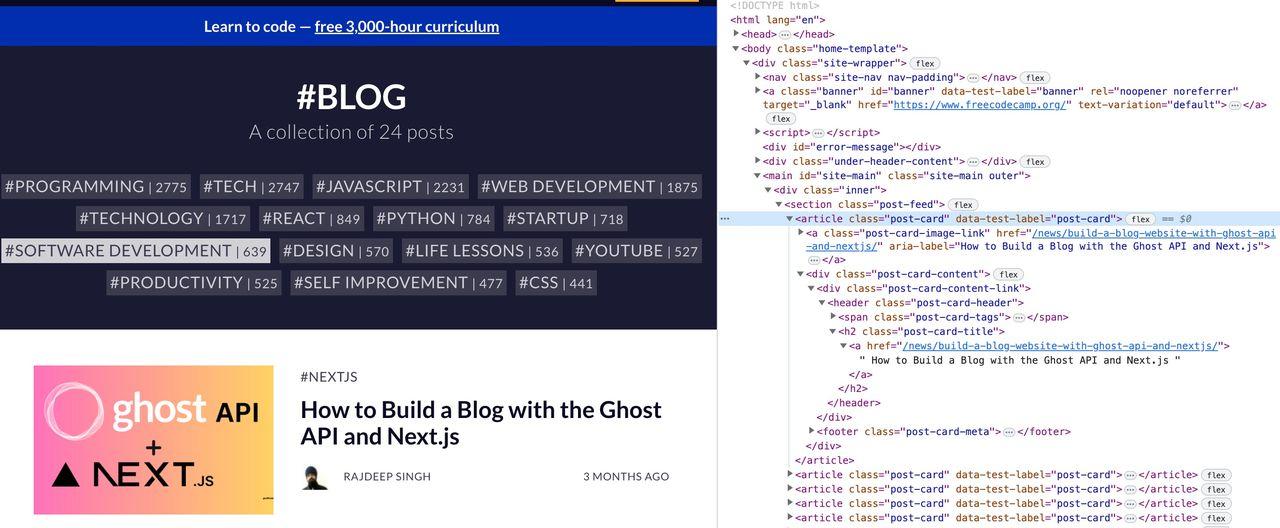

<p>For the purpose of this tutorial, we will use the freeCodeCamp website to extract its blog title using Puppeteer.

Proceed by copying and pasting the provided code:

</p>

index.js

const puppeteer = require("puppeteer");

async function run() {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto("https://www.freecodecamp.org/news/tag/blog/");

// Get all blog title

const titles = await page.evaluate(() =>

Array.from(document.querySelectorAll(".post-feed .post-card"), (e) => ({

blog: e.querySelector(".post-card-content .post-card-title a").innerText,

}))

);

console.log(titles);

await browser.close();

}

run();

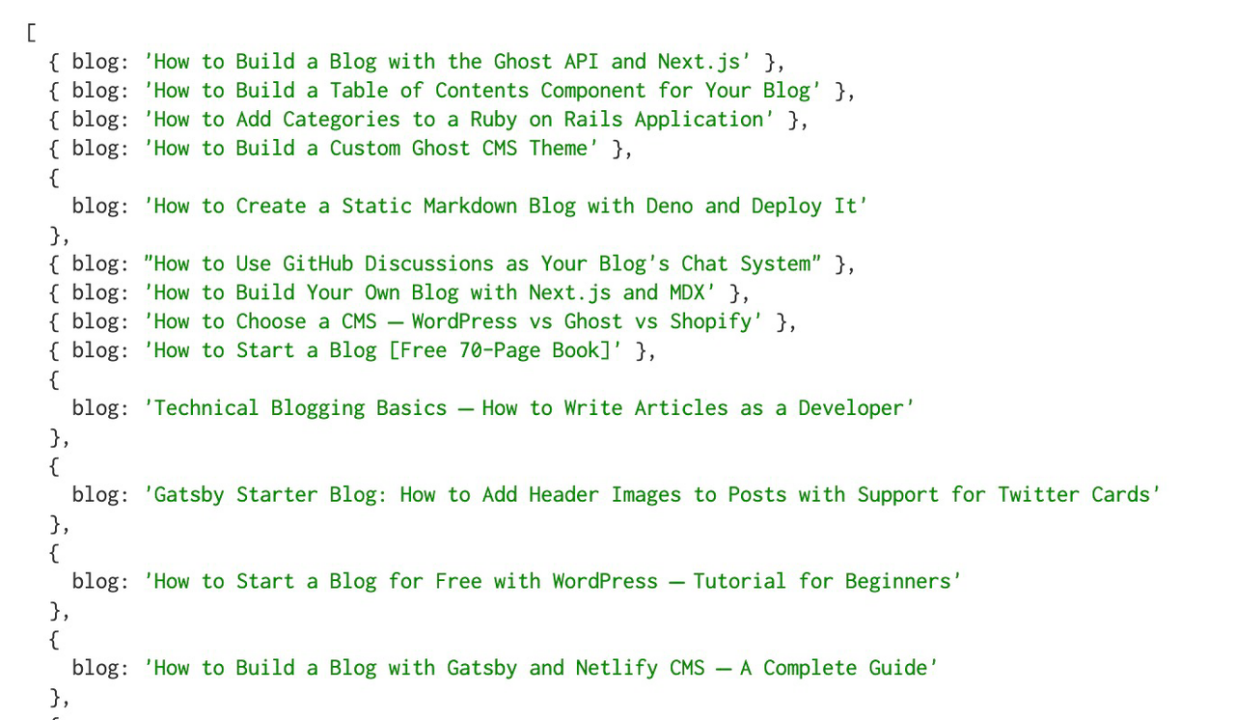

<p>The rendered page queries all the H2 titles using the querySelectorAll method and displays the results as an object.

The result of running this script in the terminal looks like this:

The drawback of using Puppeteer is the slow performance and the time it takes on complex web pages.

As a rule of thumb, using a headless browser should be the last method when there’s no other way to access the data.

Want to learn more? In this tutorial we show you how to collect hundreds of hotel prices using Node.js and Puppeteer – with a simple trick to improve success rate to 99.99%.

3. ScraperAPI

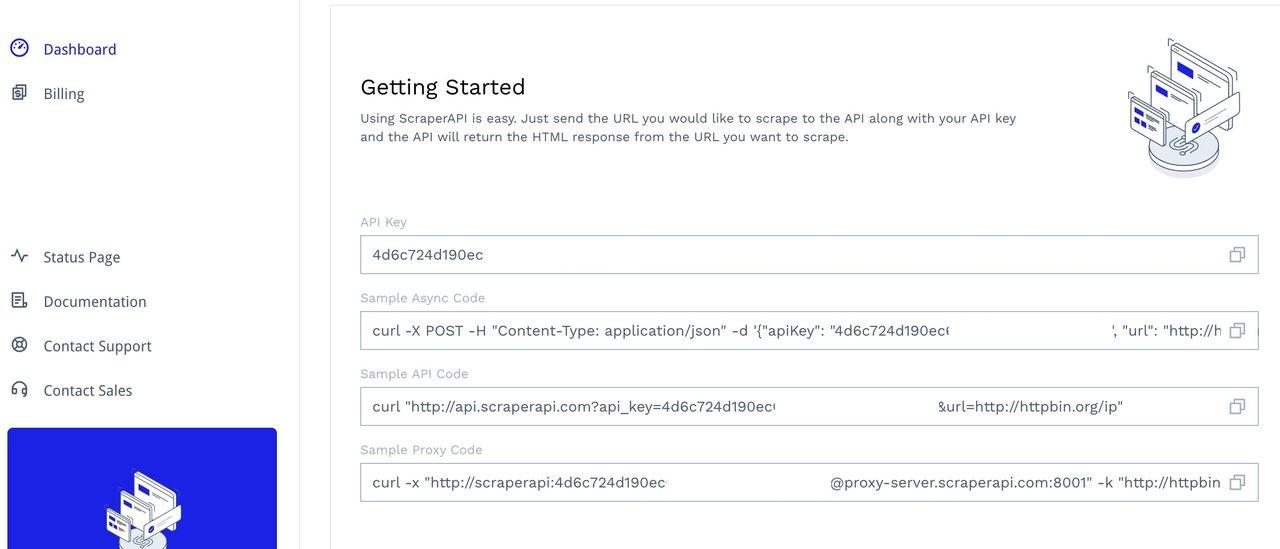

ScraperAPI is a tool that deals with proxy rotation, handling CAPTCHAs, IP blocks, and JS rendering. As a result, you can scrape even the most difficult and unpredictable domains at high speed and scale, and by adding more concurrencies to your scrapers, you can scrape pages asynchronously, without getting blocked.

To access the tool, sign up for an account to access your dashboard, where you’ll find your API key and some sample code to test:

Discover how to integrate and implement ScraperAPI with Axios requests in this guide and the sample code using Puppeteer in Node.js.

Note: It is recommended to set a navigation timeout in your application when hitting endpoints or scraping the websites to get the best success rates and avoid getting blocked on hard-to-scrape domains.

What are the use cases of ScraperAPI and its benefits?

ScraperAPI can address the following challenges:

- Proxy management

- JavaScript rendering

- Browser and CAPTCHA handling

- Downloads in HTML or JSON

- Structured data endpoints (e.g., Amazon and Google scraping)

- Async scraper and concurrent requests

- Low-code scraper (DataPipeline)

Get 5,000 free API credits to test all ScraperAPI’s tools and features

Use Cases of Web Scraping in Node.js

Web scraping with Node.js can help achieve diverse business objectives and provide you with the desired amount of data.

Let’s quickly check possible applications of web scraping so you can identify areas for web scraping in your niche.

1. Market Research and Competitive Analysis

Web scraping facilitates the collection of intelligence on competitors’ products, pricing strategies, and marketing tactics. Such insights empower businesses to refine their offerings and enhance product positioning by understanding competitors’ strategies.

💡 Idea: Scrape Amazon using structured data endpoints and get well-structured data on products, offers, reviews, and more in JSON format.

You can use web scraping for Amazon to identify trending products, learn about keywords that help competitors rank in the marketplace, and evaluate your competitors’ market share in almost no time.

Resource: How to Use Web Scraping to Empower Marketing Decisions

2. Content Aggregation

With data collection, you can curate content from a multitude of sources. Thanks to this, you can create comprehensive and constantly updated content hubs. News aggregation websites and content-centric platforms use data collection for this specific purpose.

3. Price Monitoring and Comparisons

E-commerce enterprises leverage web scraping to monitor rivals’ websites, keeping a vigilant watch on product prices and availability. This practice facilitates dynamic pricing strategies, ensuring competitive positioning in the market.

💡 Idea: Get competitors’ pricing data continuously and be the first to learn about new pricing trends and adjust your company’s prices appropriately.

By using DataPipeline, you can schedule the intervals within which to run scraping jobs and connect data to your app or download it to a folder. This lets you collect pricing insights 24/7 and keep your offers competitive.

By utilizing multiple concurrent threads and Async scraper functionalities, you can scrape hundreds of thousands of pages without getting blocked.

4. Financial Data Analysis

Node.js serves as an adept tool for scraping financial websites, extracting stock market data, economic indicators, and other vital financial information. This harvested data proves indispensable for analysis, investment decisions, and predictive trend modeling.

Resource: Alternative Data Scraping is the Next Big Thing in Finance

5. Real Estate Data

Companies operating in the real estate sector can harness web scraping to gather property listings, rental prices, and market trends. This reservoir of information can then be disseminated to clients and investors.

6. Job Market Insights

Scrutinizing job listing websites via web scraping unveils invaluable insights into job market dynamics, the demand for specific skills, and prevailing salary ranges. This data is an asset to both job seekers and employers.

💡 Idea: Learn how to scrape Glassdoor without using headless browsers or logging in to the website. As a result, you can stay fully compliant with international and country laws on data collection.

7. Social Media Analytics

Scraping data from social media platforms yields a trove of information encompassing user engagement, sentiment analysis, and trending topics. By deciphering these insights, businesses can fine-tune their marketing strategies and enhance customer interactions.

💡 Idea: Don’t cross the line with scraping social media websites. In fact, tools like BrightData were sued by Twitter with an allegation of reselling data and scraping behind the login page.

Learn about legal implications before you start collecting data. Explore how to scrape LinkedIn legally so you remain compliant with the platform’s laws.

8. Search Engine Optimization (SEO)

You can monitor keyword rankings, and organic and paid results using data collection tools or building your scrapers from scratch.

Given the fact that most agencies use off-the-shelf tools such as Ahrefs or Semrush to build SEO plans, you can quickly get a competitive advantage by collecting rankings data with your custom tool and monitoring competitor rankings in specific intervals.

💡 Idea: Scrape organic and paid rankings at scale using the Google search structured endpoint in ScraperAPI.

As a result, you can get a large amount of data in a matter of seconds and download the results in JSON.

You don’t have to parse your data as it’s already processed and structured for you.

Summing Up

You can build Node.js scrapers with Puppeteer, Cheerio, and axios. However, building and maintaining code infrastructure can quickly become a cumbersome task to handle. It also puts considerable limitations on speed and scalability.

If you need to scrape data at scale, consider using more advanced tools that help scrape asynchronously, overcome website blockers, automate parsing and rendering, and help you get to data faster without complicated workarounds.

ScraperAPI makes data more accessible and easier to collect at scale.

Sign up for a free trial or contact our sales team to work out the best solution for your business case and receive an extended trial and more testing credits.

Until next time, happy scraping!