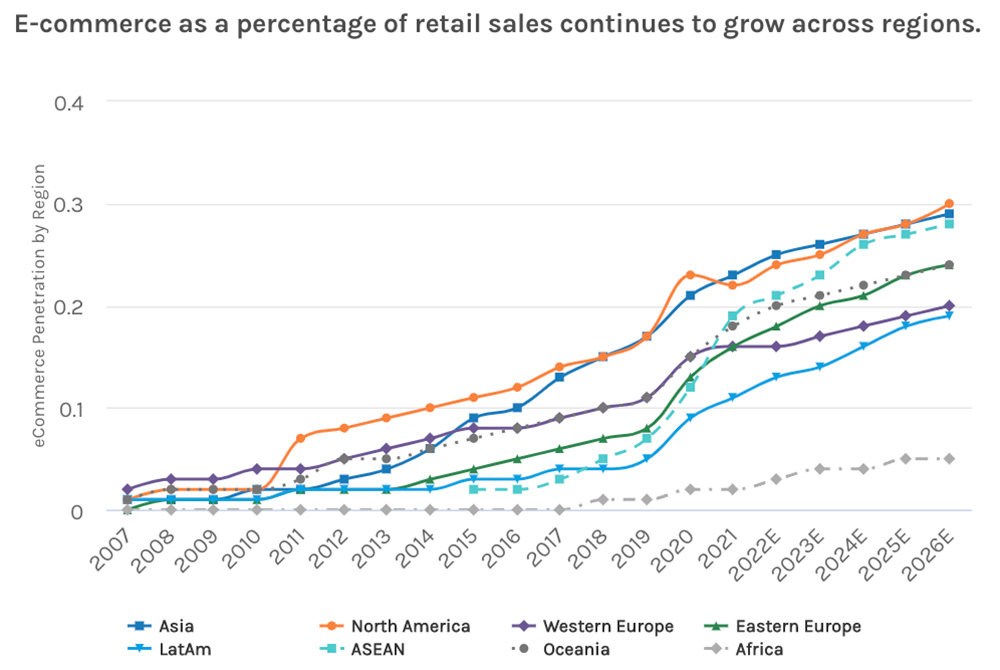

Online shopping is nothing new, but we’ve seen exponential growth in eCommerce sales in recent years.

Thanks to the pandemic, eCommerce adoption took a big jump to what was already a clear trajectory. After all, marketplaces like Amazon and eBay have more than demonstrated there’s a shift in consumer behavior.

However, this also means there’s more competition than ever before across all categories, making it harder for newcomers and even small to mid-sized eCommerce companies to succeed.

To overcome your competition, you’ll need to take advantage of any resources available to get ahead and seize market share, and in today’s world, there’s nothing more advantageous than data.

With the correct data, you’ll be able to make better decisions on your products, marketing, and overall business strategies.

But the question remains: how do you go about getting this data?

You scrape it.

What is eCommerce Web Scraping?

Web scraping is the process of extracting publicly available data from websites for analysis, reporting, or repurposing. In that sense, eCommerce web scraping is about collecting the necessary data to make better business decisions like, but not limited to, pricing data or product reviews.

By using a web scraping tool with experience in this sector, businesses can set better (or even dynamic) prices for their products, inform product launches based on customers’ needs, improve their products by finding common complaints against their competitors, and more.

Is It Legal to Scrape eCommerce Websites?

Of course, if your competitors own the data you pretend to extract, aren’t there any risks of legal repercussions? Well, it all comes down to the data you’re extracting.

If you paid attention, there are two words we added to our definition of web scraping that keep you in the clear legally: publicly available. As long as the data you scraping is available to the public, it can be scraped.

Most eCommerce sites display all product, pricing, and review data on their pages for anyone to see. So it’s totally fine to extract this information.

However, there are certain practices that you need to avoid:

- Don’t scrape content behind login walls. If you need an account to access the data, you probably accepted their terms of service. In most cases, there’s a clause about web scraping.

- Don’t use this data on your own sites. You can use extracted data to inform your strategies, not plagiarize their sites. For example, you can scrape product descriptions across all your competitors to find common terms they use (especially if they rank high in search engines), but you can’t copy and paste their descriptions.

There’s an ongoing debate on this matter, but if you follow these two directives, you’ll be fine. Still, you can learn more about this in our complete guide to legal web scraping.

Web Scraping Use Cases for eCommerce

Now that you understand why you should be using web scraping, let’s explore a few different ways you can use this technique to grow your eCommerce and get ahead of your competitors:

1. Price Monitoring

Pricing is one of the most important factors in conversions. With so much information available online, customers can compare similar products in just a few clicks, making it crucial to have a suitable pricing model for your products to see conversions go up.

Using web scraping, you can track your competitors’ pricing for the same or similar products you offer and use this information to set the pricing of your product.

A good place to start would be to scrape pricing data from Amazon. According to Martech.org, “Nearly 70 percent of online shoppers use Amazon to compare products found on a brand’s website.” So, keeping an eye on pricing changes on the platform can allow you to dynamically change your product pages to remain competitive without spending countless hours of research.

Tracking prices in the market for your products can also help you decide where to invest your money best. If prices are going down too aggressively in a category, it might be time to focus elsewhere. On the other hand, if the prices are going up within a category you serve, it might be a good idea to double down your marketing to gain more ground.

To help you get started, here’s a step-by-step tutorial on how to scrape Amazon pricing data without getting blocked.

2. Product Page Data

Product pages are the bread and butter of any eCommerce site. These act as your salesman and are filled with invaluable information about your product, processes, and sometimes your customers.

A good use of product page data is optimizing your listings by extracting product descriptions, titles, images, features, and more from your competitors and using this data to better your own pages.

Note: Be aware of copyrighted material! This type of content is protected by law from being used without the owner’s consent. To avoid copyright infringement in your projects, make it a part of your initial research and testing to ensure your scraper isn’t extracting any copyrighted information.

For example, by scraping Google’s page one results for products in your category, you can quickly find common sections (headings) and terms (keywords) worth adding to your page to help them rank better and increase conversions.

Other data points worth monitoring are delivery times and return policies. Offering a better service and benefits is a big differentiator that can get you more sales.

Also, scraping product reviews can give you the necessary insights to improve your offerings or exploit common complaints to gain over your competitors’ customers.

Besides Amazon, scraping eBay can help you collect millions of product descriptions, titles, images, and more. So don’t sleep on the platform when optimizing your listings.

Pro Tip:

Manufacturers keep developing their craft; instead of investing hundreds of hours copying and updating manufacturing information, you can scrape this data weekly and automatically update your pages.

This information is made publicly available by manufacturers, so things like product descriptions and technical specifications are, more than not, legal to scrape. However, it’s always worth double-checking directly with the source if you’re in doubt.

For example, scraping patent information to display on your site can be a huge gray area and you’d want to ask for permission before scraping this type of data.

3. Trends Monitoring and Forecasting

Let’s say you’re looking to launch a new product. Wouldn’t it be great to determine whether or not people are actually looking for a solution like yours?

Web scraping is widely used in forecasting as it’s a fast and reliable way to collect massive amounts of data to find trends in the market. With this information, you can identify the best moment for a product launch or even find new pain points to solve.

To scrape trends data, you can use frameworks like Pytrend – if you haven’t used this framework before or don’t have experience in web scraping, here’s a great real-life example of how to scrape Google Trends data to determine the popularity of specific topics. This article is written so even non-technical people can follow along and find interesting insights.

Something to remember is that even though a topic is not trending at the moment, it doesn’t mean it is dead. It’s important to take into account things like the seasons. If a topic always increases interest at specific points throughout the year, it can be a good indicator of a successful product launch opportunity.

Another strategy is using demand forecasting. By tracking the demand for products, you’ll be able to predict how much of a product you’ll need to keep in stock at every given time and in which locations.

4. Reputation Management and Sentiment Analysis

Understanding what your customers say about your products and brand is vital to improve your marketing and messaging and to be able to respond in a timely manner to negative feedback growing around you.

Web scraping can allow you to collect thousands of reviews daily without needing a dedicated team to read through them one by one.

You can collect this data and use it to identify those that matter the most and your customers’ overall sentiment toward your brand.

For example, you could develop a scoring system that automatically alerts you when a review drops below a certain threshold. This way, you’ll only need to read those that require a quick response.

On the same note, you can identify negative words or phrases in reviews and use them to find emerging negative trends to act before the issue escalates.

Pro Tip: You can go beyond reviews and scrape forums like Reddit, Quora, and even social media, to find more conversions around your brand and products.

5. Product Research

New products are always a risky investment, so having the data to support the development and launch of new products can save or make you millions of dollars.

By collecting enough data about an industry, topic, or problem, you can determine the best way to approach getting into the market, whether it is creating a new product to fit a need or to improve upon existing products based on negative reviews from your competitors.

You can also scrape marketplaces like Etsy to find trending products and give them your own spin to target a different niche. Etsy is full of independent creators with interesting ideas worth exploring and can give you the inspiration and data to support the launch.

6. Lead Generation

Converting visitors into leads can have a huge impact on your eCommerce success. After all, what’s eCommerce without customers?

Although there are many traditional channels to generate leads, these tend to take time and depend on how big your budget is to scale. To complement these techniques, you can use web scraping to collect contact information and create an email list of potential customers, helping you scale your outreach efforts without the hassle.

You can also monitor what techniques your competitors and other companies in your industry are using to generate leads by identifying words like special offers, free resources in their content, and so on.

7. Anti-Counterfeiting

According to the IACC, counterfeiting is “a fraudulent imitation (a forgery) of a trusted brand and product,”and defines a counterfeit as “an item that uses someone else’s trademark without their permission.” With the objective of “seek(ing) to profit unfairly from the trademark owner’s reputation.”

To combat this ever-increasing phenomenon, eCommerce businesses can scrape Google and other search engines, marketplaces, and image searches to identify these fraudulent attempts and stop them as soon as possible.

Not only are these criminals profiting out of your brand, but if they are convincing enough, they can damage your brand’s reputation by selling low-quality imitations, costing your business thousands of dollars on PR campaigns and lost sales.

5 Tips to Scrape eCommerce Marketplaces at Scale

Data is valuable, and even though it’s legal, it doesn’t mean that business owners want you to scrape data from their sites. Most eCommerce marketplaces and online stores use anti-scraping techniques to identify bots and block them from their roots to keep your scraper out of their information.

In many cases, it can mean a total ban on your IP, making it impossible for your computer to re-access the website- and thus to your scraping scripts.

To avoid issues and gather accurate data, here are five best practices you should follow on all your projects:

1. Determine If the Data is Geo Sensitive

A lot of websites will display different information according to the location from which the request was made. If you search for a pair of shoes on Google from France, the results will be totally different from what you’ll get by sending the request from the US.

To get accurate data, you’ll need to change the geolocation of your scraper to access the information you’re actually looking for.

2. Slow Down Your Scraper

Web scrapers can extract information from thousands of websites in minutes. However, humans can’t.

Servers can use the number of requests performed by minute to determine whether a human or a bot is navigating the site. If your script is going too fast, it’s a clear flag for the server to cut your connection and ban your IP.

3. Rotate Your IP Address Between Requests

Rotating your IP address can help you avoid many issues, although not all of them. When you rotate your IP address, it’s like a whole new user connects to the website.

In other words, you’re telling the server every request is made by a different user, making it harder for it to find patterns to spot your scraper.

To make this work, you’ll need to have a pool of IPs and manage your proxies, which can be time-consuming and complex, but it’ll pay big in the long run.

4. Make Sure the Data is in the HTML File

Web scrapers send a request to a server and then return the raw HTML file for parsing and extracting the data you asked for. However, a lot of websites are using JavaScript to inject content into the pages dynamically. This process makes it impossible for regular scripts to access the information.

Before running a scraper to millions of empty pages, verify you can access the data from regular requests, or you’ll be wasting a lot of resources without gaining any data.

5. Use the Right Combination of Headers

In simple terms, “An HTTP header is a field of an HTTP request or response that passes additional context and metadata about the request or response.” Servers use this information to identify users, track their behavior on the website and, in many cases, differentiate between humans and bots.

Because HTTP headers are sent by your browser, your scraper, by default, can’t really pass any headers like the agent or cookies. This can be enough for servers to block you.

If you’ve never worked with headers before, here’s a guide on how to grab and use HTTP headers for beginners.

Wrapping Up: Make Scraping Easier with ScraperAPI

Scraping eCommerce data is easier said than done. In fact, the programming logic of scraping data from websites is fairly simple and straightforward – at least, it is for well-formatted websites. The real challenge is handling all the complexities and challenges websites will throw your way.

Not only do you need to handle proxies and JavaScript, but there are also techniques like CAPTCHAs designed to stop your scripts from working properly.

That’s exactly why we built ScraperAPI. With just a single line of code, your requests will be sent through ScraperAPI’s servers, handling the hard part for you.

Out of the box, ScraperAPI will rotate your IP address, from a pool of residential IP addresses and browser farms, between every request, it’ll handle CAPTCHAs by retrying the request from a different IP and will use years of statistical analysis and machine learning to determine the best combination of headers for you.

In addition, ScraperAPI can render JavaScript sites, making them accessible without having to use complex solutions like headless browsers; and change the geolocation of your scraper by changing one parameter in the URL.

If you’re new to web scraping, check out some of our beginner tutorials:

- Web Scraping Basics [Free PDF Guide]

- How to Web Scrape With JavaScript & Node.js

- Build a Web Scraper With Python & Scrapy in 6 Steps

- Building A Golang Web Scraper: Simple Steps

- Easily Build a PHP Web Scraper With Goutte

- Web Scraping Using Ruby – Build a Powerful Scraper in 7 Steps

- Guide to Web Scraping Using C# Without Getting Blocked

- Web Scraping in R: How to Easily Use rvest for Scraping Data

To learn more about ScraperAPI, create a free account with 5000 free API credits to test, or check out our documentation for a detailed walkthrough of what ScraperAPI can do for you.

Until next time, happy scraping!